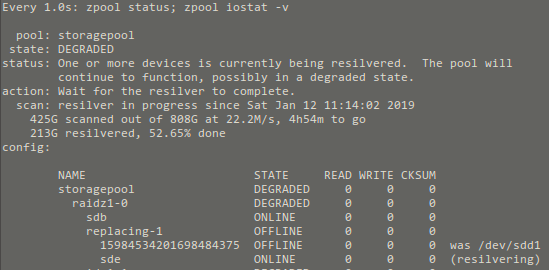

The last Ubuntu kernel update seemingly kicked two hard disks out of a ZFS raidz – sigh. With ZFS on Linux this poses an issue:

Two hard drives that previously where in this ZFS pool named “storagepool” where reassigned a completely different device-id by Linux. So /dev/sdd became /dev/sdf and so on.

ZFS uses a specific metadata structure to encode information about that hard drive and it’s relationship to storage pools. When Linux reassigned a name to the hard drive apparently some things got shaken up in ZFS’ internal structures and mappings.

The solution was these steps

- export the ZFS storage pool (=taking it offline for access/turning it off)

- use the zpool functionality “labelclear” to clear off the data partition table of the hard drives that got “unavailable” to the storage pool

- import the ZFS storage pool back in (=taking it online for access)

- using the replace functionality of zpool to replace the old drive name with the new drive name.

After poking around for about 2 hours the above strategy made the storage pool to start rebuilding (resilvering in ZFS speak). Well – learning something every day.

Bonus: I was not immediately informed of the DEGRADED state of the storage pool. That needs to change. A simple command now is run by cron-tab every hour.

zpool status -x | grep state: | tr –delete state: |mosquitto_pub -t house/stappenbach/server/poppyseeds/zpool -l

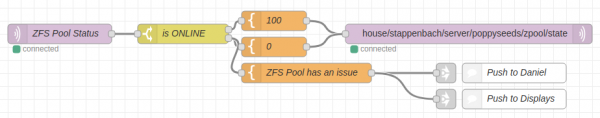

This pushes the ZFS storage pool state to MQTT and get’s worked on by a small NodeRed flow.