In part 1 I wrote a bit about this great game and shared some screenshots. By now I’ve finished the story and almost all side-quests and I still see it as the best game since years.

Anyway, here are more pictures taken in-game (sometimes stitched):

For the first time in the last 10ish years I am back playing a game that really impresses me. The story, the world and the technology of Cyberpunk 2077 really is a step forward.

It’s a first in many aspects for me. I do not own a PC capable enough of playing Cyberpunk 2077 at any quality level. Usually I am playing games on consoles like the Playstation. But for this one I have selected to play on the PC platform. But how?

I am using game streaming. The game is rendered in a datacenter on a PC and graphics card I am renting for the purpose of playing the game. And it simply works great!

So I am playing a next-generation open-world game with technical break-throughs like Raytracing used to produce really great graphics streamed over the internet to my big-screen TV and my keyboard+mouse forwarded to that datacenter without (for me) noticeable lag or quality issues.

The only downside I can see so far is that sooo many people like to play it this way that there are not enough machines (gaming-rigs) available to all the players that want – so there’s a queue in the evening.

But I am doing what I am always doing when I play games. I take screenshots. And if the graphics are great I am even trying to make panoramic views of the in-game graphics. Remember my GTA V and BioShock Infinite pictures?

So here is the first batch of pictures – some stitched together using 16 and more single screenshots. Look at the detail! Again – there are in-game screenshots. Click on them to make them bigger – and right-click open the source to really zoom into them.

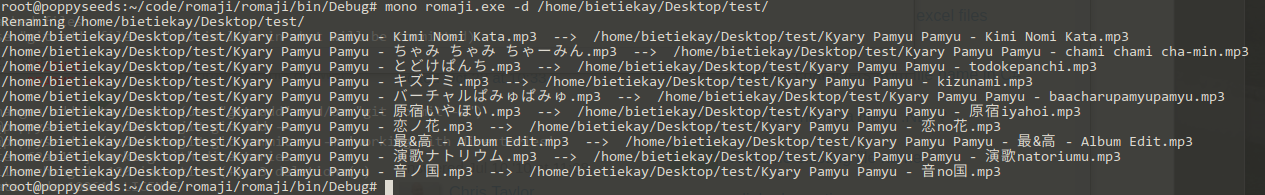

I had this strange problem that my car was not able to display japanese characters when confronted with them. Oh the marvels of inserting a USB stick into a car from 2009.

Now there’s no real option I know of without risking to brick the car / entertainment system of the car to get it to display the characters right.

Needless so say that my wifes car does the trick easily – of course it’s an asian car!

Anyway. I wrote a command line tool using some awesome pre-made libraries to convert Hiragana-Katakana characters to their romaji counterpart.

You can find it on github: https://github.com/bietiekay/romaji

To all techies reading this:

GIST: I am looking for interested hackers who want to help me implement a neural network that improves the accuracy of bluetooth low energy based indoor location tracking.

Longer version:

I am currently applying the last finishing touched to a house wide bluetooth low energy based location tracking system. (All of which will be opensourced)

The system consists of 10+ ESP-32 Arduino compatible WiFi/Bluetooth system-on-a-chip. At least one per room of a house.

These modules are very low powered and have one task: They scan for BLE advertisements and send the mac and manufacturer data + the RSSI (signal strength) over WiFi into specific MQTT topics.

There is currently a server component that takes this data and calculates a probable location of a seen bluetooth low energy device (like the apple watch I am wearing…). It currently is using a calibration phase to level in on a minimum accuracy. And then simple calculation matrices to identify the most probable location.

This all is very nice but since I got interested in neural networks and KI development – and I think many others might as well – I am asking here for also interested parties to join the effort.

I do have an existing set-up as well as gigabytes of log data.

I know about previous works like „Indoor location tracking system using neural network based on bluetooth“

Now I am totally new to the overal concepts and tooling and I start playing with TensorFlow right now.

If you want to join, let me know by commenting!

Did you know how dangerous Lithium-Polymer batteries can be? Well, if not treated well they literally burst in flames spontaneously.

So it’s quite important to follow a couple of guidelines to not burn down the house.

Since I am just about to start getting into the hobby of FPV quadcopter racing I’ve tried follow those guidelines and found that the smart house can help me tracking things.

Unfortunately there are not a lot of LiPo chargers available at reasonable price with computer interfaces to be monitored while charging/discharging the batteries. But there are a couple of workarounds I’ve found useful.

There’s a couple more things to it, like keeping track of charging processes in a calendar as you can see in the flowchart behind all the above.

There are a lot of things that happen in the smart house that are connected somehow.

And the smart house knows about those events happening and might suggest, or even act upon the knowledge of them.

A simple example:

In our living room we’ve got a nice big aquarium which, depending on the time of the day and season, it is simulating it’s very own little dusk-till-dawn lightshow for the pleasure of the inhabitants.

Additionally the waterquality is improved by an air-pump generating nice bubbles and enriching the water with oxygen. But that comes a cost: When you are in the room those bubbles and the hissing sound of the inverter for the “sun” produces sounds that are distracting and disturbing to the otherwise quiet room.

Now the smart home comes to the rescue:

It detects that whenever someone is entering the room and staying for longer, or powering up the TV or listening to music. Also it will log that regularly when these things happen also the aquarium air and maybe lights are turned off. Moreso they are turned back on again when the person leaves.

These correlations are what the smart house is using to identify groups of switches, events and actions that are somehow tied together. It’ll prepare a report and will recommend actions which at the push of a button can become a routine task always being executed when certain characteristics are lining up.

And since the smart house is a machine, it can look for correlations in a lot more dimensions a human could: Date, Time, Location, Duration, Sensor and Actor values (power up TV, Temperature in room < 22, Calendar = November, Windows closed => turn on the heating).

Did you notice that most calendars and timers are missing an important feature. Some information that I personally find most interesting to have readily available.

It’s the information about how much time is left until the next appointment is coming up. Even smartwatches, which should should be jack-of-all-trades in regards of time and schedule, do not display the “time until the next event”.

Now I came across this shortcoming when I started to look for this information. No digital assistant can tell me right away how much time until a certain event is left.

But the connected house also is based upon open technologies, so one can add these kind of features easily ourselves. My major use cases for this are (a) focussed work, plan quick work-out breaks and of course making sure there’s enough time left to actually get enough sleep.

As you can see in the picture attached my watch will always show me the hours (or minutes) left until the next event. I use separate calendars for separate displays – so there’s actually one for when I plan to get up and do work-outs.

Having the hours left until something is supposed to happen at a glance – and of course being able to verbally ask through chat or voice in any room of the house how long until the next appointment gives peace of mind :-).

The Internet of Things might as well become your Internet of Money. Some feel the future to be with blockchain related things like BitCoin or Ethereum and they might be right. So long there’s also this huge field of personal finances that impacts our lives allday everyday.

And if you get to think about it money has a lot of touch points throughout all situations of our lifes and so it also impacts the smart home.

Lots of sources of information can be accessed today and can help to stay on top of the things going on as well as make concious decisions and plans for the future. To a large extend the information is even available in realtime.

– cost tracking and reporting

– alerting and goal setting

– consumption and resource management

– like fuel oil (get alerted on price changes, …)

– stock monitoring alerting

– and more advanced even automated trading

– bank account monitoring, in- and outbound transactions

– expectations and planning

– budgetting

After all this is about getting away from lock-in applications and freeing your personal financial data and have a all-over dashboard of transactions, plans and status.

Water! Fire! Whenever one of those are released uncontrolled inside the house it might mean danger to life and health.

Having a couple of fish and turtle tanks spread out in the house and in addition a server rack in the basement it’s important to know when there’s a leak of water at moments notice.

As the server-room also is housing some water pumps for a well you got all sorts of dangers mixed in one location: Water and Fire hazard.

To detect water leaks all tanks and the pumps for the well are equipped with water sensors which send out an alerting signal as soon as water is detected. This signal is picked up and pushed to MQTT topics and from there centrally consumed and reacted upon.

Of course the server rack is above the water level so at least there is time to send out alerts while even power is out for the rest of the house (all necessary network and uplink equipment on it’s own batteries).

For alerting when there is smoke or a fire, the same logic applies. But for this some loud-as-hell smoke detectors are used. The smoke detectors interconnect with each other and make up a mesh for alerting. If one goes off. All go off. One of them I’ve connected to it’s very own ESP8266 which sends a detected signal to another MQTT topic effectively alerting for the event of a fire.

In one of the pictures you can see what happened when the basement water detector did detect water while the pump was replaced.

A lot of things in a household have weight, and knowing it’s weight might be crucial to health and safety.

Some of those weight applications might tie into this:

– your own body weight over a longer timespan

– the weight of your pets, weighed automatically (like on a kitty litter box)

– the weight of food and ingredients for recipes as well as their caloric and nutrition values

– keeping track of fill-levels on the base of weights

All those things are easily done with connected devices measuring weights. Like the kitty-litter box at our house weighing our cat every time. Or the connected kitchen-scale sending it’s gram measurements into an internal MQTT topic which is then displayed and added more smarts through an App on the kitchen-ipad consuming that MQTT messages as well as allowing recipe-weigh-in functions.

It’s not only surveillance but pro-active use. There are beekeepers who monitor the weight of their bee hives to see what’s what. You can monitor all sorts of things in the garden to get more information about it’s wellbeing (any plants, really).

We love music. We love it playing loud across the house. And when we did that in the past we missed some things happening around.

Like that delivery guy ringing the front doorbell and us missing an important delivery.

This happened a lot. UNTIL we retrofitted a little PCB to our doorbell circuit to make the house aware of ringing doorbells.

Now everytime the doorbell rings a couple of things can take place.

– push notifications to all devices, screens, watches – that wakes you up even while doing workouts

– pause all audio and video playback in the house

– take a camera shot of who is in front of the door pushing the doorbell

And: It’s easy to wire up things whatever those may be in the future.

So how do you manage all these sensors and switches, and lights, and displays and speakers…

One way has proven to be very useful and that is by using a standard calendar.

Yes, the one you got right on your smartphone or desktop.

A calendar is a simple manifestation of events in time and thus it can be used to either protocol or schedule events.

So the smart house uses calendars to:

So what am I using this calendar(s) for? Simple. It’s there to track travelling since I know when I was where by simply searching the calendar (screenshot). It’s easy to make out patterns and times of things happening since a calendar/timeline view feels natural. Setting on/off times and such is just a bliss if you can make it from your phone in an actual calendar rather than a tedious additional app or interface.

And of course: the house can only be smart about things when it has a way to gather and access that data. Reacting to it’s inhabitants upcoming and previous events adds several levels of smartness.

We all know it: After a long day of work you chilled out on your bean bag and fell asleep early. You gotta get up and into your bed upstairs. So usually light goes on, you go upstairs, into bed. And there you have it: You’re not sleepy anymore.

Partially this is caused by the light you turned on. If that light is bright enough and has the right color it will wake you up no matter what.

To fight this companies like Apple introduced things like “NightShift” into iPhones, iPads and Macs.

“Night Shift uses your computer’s clock and geolocation to determine when it’s sunset in your location. It then automatically shifts the colors in your display to the warmer end of the spectrum.”

Simple, eh?. Now why does your house not do that to prevent you being ripped out of sleepy state while tiptoeing upstairs?

Right! This is where the smart house will be smart.

Nowadays we’ve got all those funky LED bulbs that can be dimmed and even their colours set. Why none of those market offerings come with that simple feature is beyond me:

After sunset, when turned on, default dim to something warmer and not so bright in general.

I did implement and it’s called appropriately the “U-Boot light”. Whenever we roam around the upper floor at night time, the light that follows our steps (it’s smart enough to do that) will not go full-blast but light up dim with redish color to prevent wake-up-calls.

The smart part being that it will take into account:

– movement in the house

– sunset and dawn depending on the current geographic location of the house (more on that later, no it does not fly! (yet))

– it’ll turn on and off the light according to the path you’re walking using the various sensors around anyways

Now that you got your home entertainment reacting to you making a phone call (use case #1) as well as your current position in the played audiobook (use case #3) you might want to add some more location awareness to your house.

If your house is smart enough to know where you are, outside, inside, in what room, etc. – it might as well react on the spot.

So when you leave/enter the house:

– turn off music playing – pause it and resume when you come back

– shutdown unnecessary equipment to limit power consumption when not used and start-back up to the previous state (tvs, media centers, lights, heating) when back

– arm the cameras and motion sensors

– start to run bandwidth intense tasks when no people using resources inside the house (like backing up machines, running updates)

– let the roomba do it’s thing

– switch communication coming from the house into different states since it’s different for notifications, managing lists and spoken commands and so on.

There’s a lot of things that that benefit from location awareness.

Bonus points for outside house awareness and representing that like a “Weasly clock”…“xxx is currently at work”.

Bonus points combo breaker for using an open-source service like Miataru (http://miataru.com/#tabr3) for location tracking outside the house.

So you’re listening to this audio book for a while now, it’s quite long but really thrilling. In fact it’s too long for you to go through in one sitting. So you pause it and eventually listen to it on multiple devices.

We’ve got SONOS in our house and we’re using it extensively. Nice thing, all that connected goodness. It’s just short of some smart features. Like remembering where you paused and resuming a long audio book at the exact position you stopped the last time. Everytime you would play a different title it would reset the play-position and not remember where you where.

With some simple steps the house will know the state of all players it has. Not only SONOS but maybe also your VCR or Mediacenter (later use-case coming up!).

Putting together the strings and you get this:

Whenever there’s a title being played longer than 10 minutes and it’s paused or stopped the smart house will remember who, where and what has been played and the position you’ve been at.

Whenever that person then is resuming playback the house will know where to seek to. It’ll resume playback, on any system that is supported at that exact position.

Makes listening to these things just so much easier.

Bonus points for a mobile app that does the same thing but just on your phone. Park the car, go into the house, audiobook will continue playback, just now in the house instead of the car. The data is there, why not make use of it?

p.s.: big part of that I’ve opensourced years ago: https://github.com/bietiekay/sonos-auto-bookmarker

Since I am frequently using the xenim streaming network service but I was missing out on the functionality to replay recent shows. With the wonderful functionality of Re-Live made available through ReliveBot I have now added this replay feature and I am using it a lot since.

Within the SONOS controller app it looks like this:

To set-up this service with your SONOS set-up just follow the instructions shown here: a new Music Service for SONOS

Source 1: xenim streaming network

Source 2: ReliveBot

Source 3: Download the Custom Service

Source 4: a new Music Service for SONOS

I am using this for more several years now. Even though all my workflow happens on Macintosh computers these days I’ve kept this tool in my toolbox: Microsoft Image Composite Editor.

Now after along while with the 1.0 version Microsoft Research decided to release a new version of the free tool with even more features and a new streamlined user interface. This is so much better than before.

[youtube]https://www.youtube.com/watch?v=zhdXLH2GYPA[/youtube]

“Image Composite Editor (ICE) is an advanced panoramic image stitcher created by the Microsoft Research Computational Photography Group. Given a set of overlapping photographs of a scene shot from a single camera location, the app creates a high-resolution panorama that seamlessly combines the original images. ICE can also create a panorama from a panning video, including stop-motion action overlaid on the background. Finished panoramas can be shared with friends and viewed in 3D by uploading them to the Photosynth web site. Panoramas can also be saved in a wide variety of image formats, including JPEG, TIFF, and Photoshop’s PSD/PSB format, as well as the multiresolution tiled format used by HD View and Deep Zoom.”

Source 1: http://research.microsoft.com/en-us/um/redmond/projects/ice/

I was on a business trip the other day and the office space of that company was very very nice. So nice that they had all sorts of automation going on to help the people.

For example when you would run into a room where there’s no light the system would light up the room for you when it senses your presence. Very nice!

There was some lag between me entering the room, being detected and the light powering up. So while running into a dark room, knowing I would be detected and soon there would be light, I shouted “Computer! Light!” while running in.

That StarTrek reference brought an old idea back that it would be so nice to be able to control things through omnipresent speech recognition.

I am aware that there’s Siri, Cortana, Google Now. But those things are creepy because they involve external companies. If there are things listening to me all day every day, I want them to be within the premise of the house. I want to know exactly down to the data flow what is going on and sent where. I do not want to have this stuff leave the house at any times. Apart from that those services are working okayish but well…

Let alone the hardware. Usually the existing assistants are carried around in smart phones and such. Very nice if you want to touch things prior to talking to them. I don’t want to. And no, “Hey Siri!” or “OK Google” is not really what I mean. Those things are not sophisticated enough yet. I was using “Hey Siri!” for less than 24 hours. Because in the first night it seemed to have picked up something going on while I was sleeping which made it go full volume “How can I help!” on me. Yes, there’s no “don’t listen when I am sleeping” thing. Oh it does not know when I am sleeping. Well, you see: Why not?

Anyway. What I wish there was:

And all that should be working on a basic level without internet access. Just like that.

So? Any volunteers?

Like every year the Chaos Communication Congress gathered thousands of people in one place between the Christmas-Holidays and NewYears.

Since I was out-of-order this year to attend I’ve opted for the Attending-by-Stream option. All Lectures are live-streamed by the awesome CCC Video Operations Center (C3VOC) and made available as recordings afterwards.

Since the choice of topics is enormous here are some I can recommend:

Source 1: http://events.ccc.de/congress/2014/wiki/Static:Main_Page

Source 2: http://en.wikipedia.org/wiki/Chaos_Communication_Congress

Source 3: http://c3voc.de/

Source 4: http://media.ccc.de/browse/congress/2014/

As racing cars with petrol engines gets more and more uninteresting for the masses and even Formula 1 faces competition by Formula E.

Now having humans inside cars racing a wide track is one thing, but using relatively cheap but extremely high-tech multi-copters with first-person-view cameras mounted on them and flown by crazy guys sitting next to the “racing track” is the next big thing!

[youtube]https://www.youtube.com/watch?v=6zDDsX5xYcA[/youtube]

As you can see it basically looks like the Endor-scenes from Star Wars. In fact it does look so interesting that I am tempted to try it myself…

“Console framework is cross-platform toolkit that allows to develop TUI applications using C# and based on WPF-like concepts.”

Source 1: http://en.wikipedia.org/wiki/Text-based_user_interface

Source 2: https://github.com/elw00d/consoleframework

Source 3: http://elw00d.github.io/consoleframework/

“Ever notice how people texting at night have that eerie blue glow?

Or wake up ready to write down the Next Great Idea, and get blinded by your computer screen?

During the day, computer screens look good—they’re designed to look like the sun. But, at 9PM, 10PM, or 3AM, you probably shouldn’t be looking at the sun.

f.lux fixes this: it makes the color of your computer’s display adapt to the time of day, warm at night and like sunlight during the day.

It’s even possible that you’re staying up too late because of your computer. You could use f.lux because it makes you sleep better, or you could just use it just because it makes your computer look better.”

Source: https://justgetflux.com/

The next time you stumble across a PDF file with security and not allowing you to print or copy/paste.

Do this:

qpdf –decrypt

“QPDF is a command-line program that does structural, content-preserving transformations on PDF files. It could have been called something like pdf-to-pdf. It also provides many useful capabilities to developers of PDF-producing software or for people who just want to look at the innards of a PDF file to learn more about how they work.

QPDF is capable of creating linearized (also known as web-optimized) files and encrypted files. It is also capable of converting PDF files with object streams (also known as compressed objects) to files with no compressed objects or to generate object streams from files that don’t have them (or even those that already do). QPDF also supports a special mode designed to allow you to edit the content of PDF files in a text editor. For more details, please see the documentation links below.

QPDF includes support for merging and splitting PDFs through the ability to copy objects from one PDF file into another and to manipulate the list of pages in a PDF file. The QPDF library also makes it possible for you to create PDF files from scratch. In this mode, you are responsible for supplying all the contents of the file, while the QPDF library takes care off all the syntactical representation of the objects, creation of cross references tables and, if you use them, object streams, encryption, linearization, and other syntactic details.

QPDF is not a PDF content creation library, a PDF viewer, or a program capable of converting PDF into other formats. In particular, QPDF knows nothing about the semantics of PDF content streams. If you are looking for something that can do that, you should look elsewhere. However, once you have a valid PDF file, QPDF can be used to transform that file in ways perhaps your original PDF creation can’t handle. For example, programs generate simple PDF files but can’t password-protect them, web-optimize them, or perform other transformations of that type.”

Source 1: http://qpdf.sourceforge.net/

Source 2: https://github.com/qpdf/qpdf

“Shadertoy is the first application to allow developers all over the globe to push pixels from code to screen using WebGL since 2009.

This website is the natural evolution of that original idea. On one hand, it has been rebuilt in order to provide the computer graphics developers and hobbyists with a great platform to prototype, experiment, teach, learn, inspire and share their creations with the community. On the other, the expressiveness of the shaders has arisen by allowing different types of inputs such as video, webcam or sound.”

Source 1: https://www.shadertoy.com

So it happened to one of the VU+ Duos in the house. After a clean shutdown it did not boot up as expected but instead just showed the red light. It still blinked on remote keypresses and the harddisk spun up. Nothing else happened with it.

So it was bricked.

Reading the forums about that pointed to a capacitor on the board that quite regularly seems to fail. C807 is it’s name and it’s located near the Harddisk and the power-supply part of the VU+ Duo.

When I looked at the capacitor it did not seem to be faulty or anything. So without the right tools to measure I’ve decided to just give it a shot and replace the original 16V 220uF 85 degrees celsius capacitor with a 105 degrees celsius 16V 330uF one.

In my case I’ve taken out the board, to have a little bit of extra space, and cut of the old capacitor. Desoldering would be nicer looking but, well …

Replacing it on the left-over pins of the old capacitor was a matter of seconds.

After putting the board back in, the VU+ Duo powered up and booted as new. Brilliant!

“Odd patterns of I/O latency can be hidden by line graphs and summary statistics, and revealed by histograms and heat maps. In my previous post I showed my Linux iosnoop tool, which can trace block device I/O along with timestamps and latency. This information can be visualized, revealing any odd patterns.”

Source: http://www.brendangregg.com/blog/2014-07-23/linux-iosnoop-latency-heat-maps.html

“The Infinadeck is the world’s first affordable omnidirectional treadmill that is designed to work both in augmented and virtual reality. This revolutionary device provides the missing link making it now possible to have a true Holodeck experience. You might say, “Reality just got bigger”.”

[youtube]https://www.youtube.com/watch?v=GoVAOfU8UJQ[/youtube]

Source: http://www.infinadeck.com/

“Nitrous is a backend development platform which helps software developers save time by cutting out the repetitive parts of creating development environments and automating them.

Once you create your first development environment, there are many features which will make development easier.”

So what you’re getting is:

Here are some screenshots to get you a feel for it:

Source: https://www.nitrous.io/

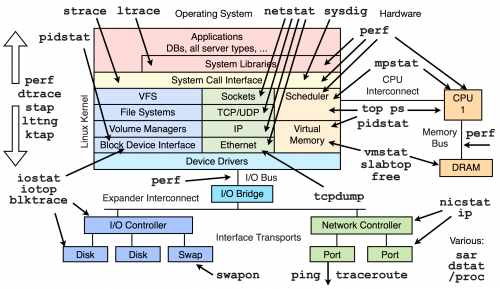

If you ever experienced a missmatch between the performance you expected from a server or application running on Linux you probably started to debug your way into it why the applications performance is not on the expected levels.

With Linux being very mature you get an enormous amounts of helpers and interfaces to debug the performance aspects of the operating system and the applications.

Want to see proof? Here – a map of almost all the thingies and interfaces you got:

Thankfully Brendan Gregg put together a page with videos and further links to drill into those interfaces and methods above.

I am using an external podcast download tool to stay updated on all podcasts I subscribed to. For this purpose SubSonic is a good choice – actually for a lot more also.

One of the quirks of the SONOS products is that Podcasts are not really well supported. In fact there is no support at all.

So I wrote a tool that extends the SONOS players with the functionality to “remember” play positions within audiobooks and podcasts. Now what’s left to properly have podcasts supported is a view of the most recently updated podcasts. Wouldn’t it be nice to have a “Folder View” in the SONOS controller of what’s new across all the different podcasts you are subscribed to?

Now here’s the trick:

Use a small script on any RaspberryPi in the house to dynamically create hardlinks to the podcasts files in a “Recently Updated Podcasts” folder.

The script is something like this:

find /where-your-podcasts-are/ -type f -printf ‘%TY-%Tm-%Td %TT %p\n’ | sort | tail -n 25 | cut -c 32- | sed -e “s/^/ln \”/” -e “s/$/\”/” -e “s/$/ \”\/recentPodcasts\/\”/” | sh

This short line will go through all folders and subfolders in /where-your-podcasts-are/ and then create Hardlinks in /recentPodcasts to the most recent 25 files.

That way, and when /recentPodcasts/ is made accessible to your SONOS controllers, you’ll have something like this:

Source 1: http://www.subsonic.org

Source 2: play position bookmarker