I like playing arcade games. I’ve had an “arcade” in my home town and I used to go there after school quite frequently. It was a small place – maybe 5 machines and some pinball machines.

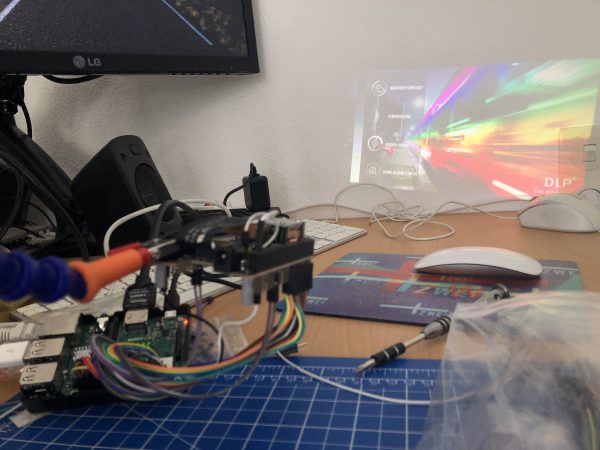

In february this year it occured to be that with the power of the Raspberry Pi and a distribution called RetroPie I could build something that would bring back the games and allow me to play/try those games I never could because my arcade was so small back in the days.

To get a better idea of how to approach this I started to search around and found the build-log of Holbrook Tech where they’ve built a “Bigger Bartop Arcade”.

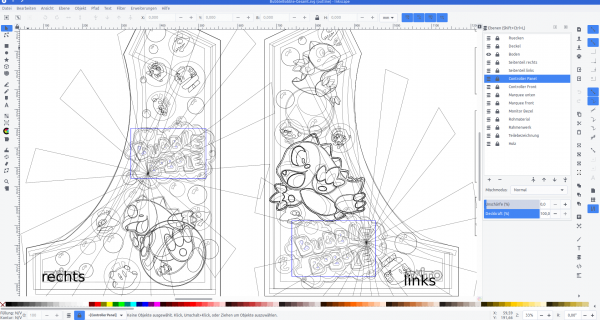

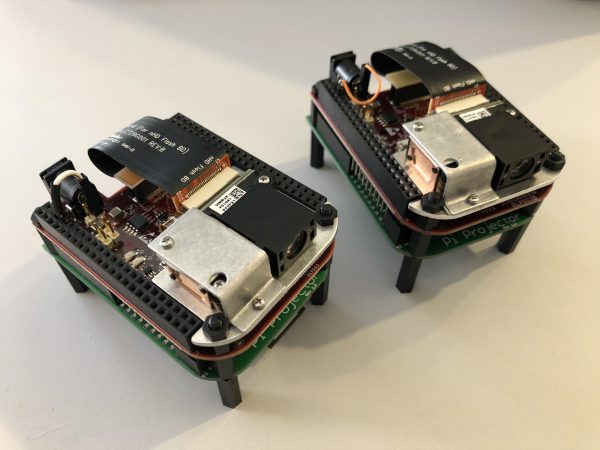

With their basic plans I started drawing in Inkscape and told my father about the plan. He was immediately in – as the plan now was to not build one but two bartop arcade machines. He would take the task of carrying out the wood works and I would do the rest – procurements, electronics, wiring, design and “painting”.

While I took the Holbrook Tech schematics as a base it quickly came apparent that I had to build/measure around the one fixed big thing in the middle: the screen.

screen

I wanted something decently sized that the RaspberryPi would be able to push out to and that would require no maintenance/further actions when installed.

To find something that fits I had my requirements fixed:

- between 24″ – 32″

- colour shift free wide viewing angle

- 1080p

- takes audio over HDMI and is able to push it out through headphone jack

I eventually settled for a BenQ GW2780 27″ monitor with all boxes ticked for a reasonable price.

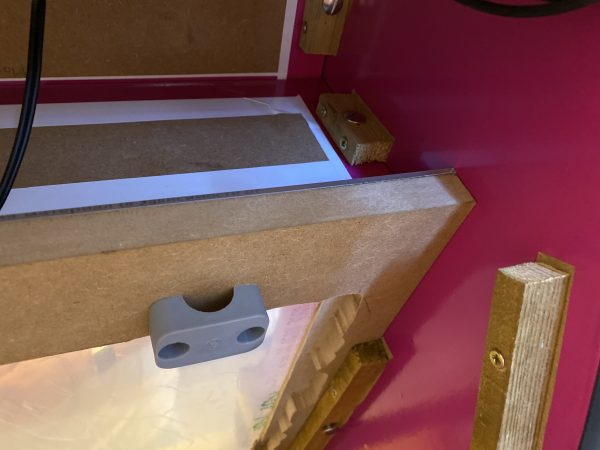

After the monitor arrived I carried it to my fathers house and we started to cut the bezel as a first try.

After some testing with plywood we went for MDF as it was proposed by others on the internet as well. This made the cutting so much easier.

woodwork

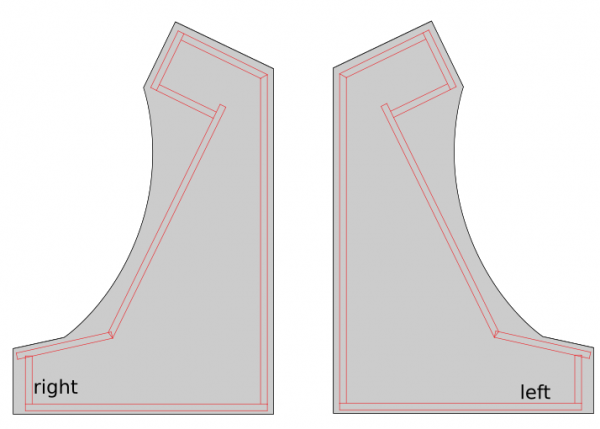

We went with standard 2cm MDF sheets and my father cut them to size with the measurements derived from the monitor bezel centerpiece.

Big thanks to my father for cutting so much wood so diligently! The next days he sent me pictures of what he’d made:

The side panels got a cut around for the black T-Molding to be added later.

electronics and wiring

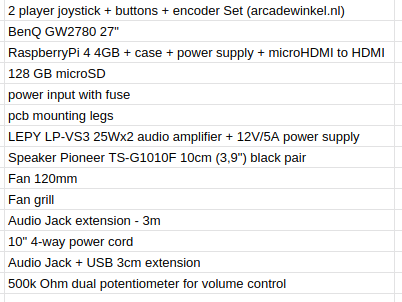

After about 2 weeks my father had built the first arcade out of sheets of MDF and I had taken delivery of the remaining pieces of hardware I had ordered after making a long list.

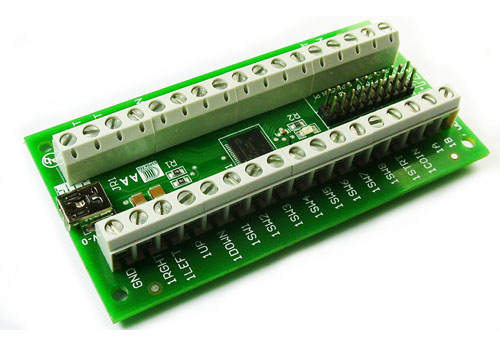

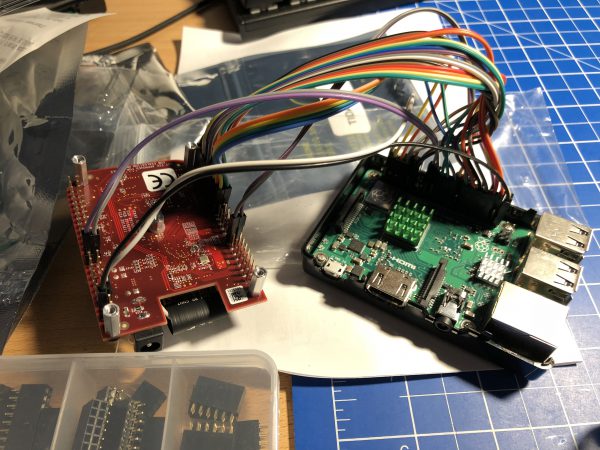

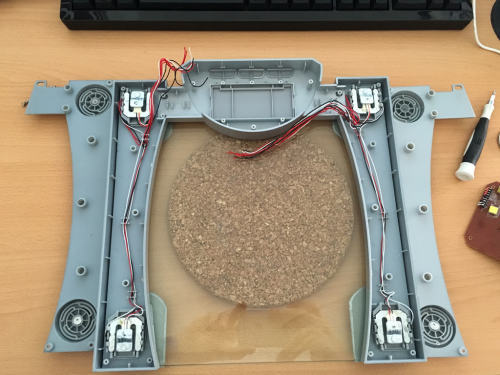

The most interesting parts of the above list might be the 2-player joystick + buttons + encoder set.

It contains 2 standard 4/8-way switchable arcade joysticks, 10 buttons, all microswitches required and the Ultimarc I-PAC-2 joystick encoder.

So when I got the first arcade from my father I started to put in the electonics immediately.

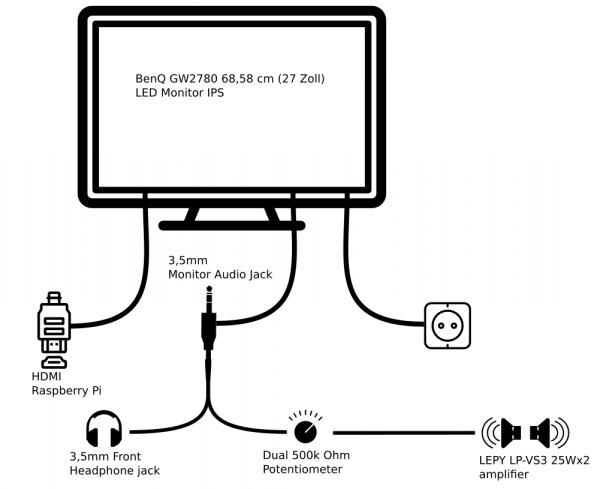

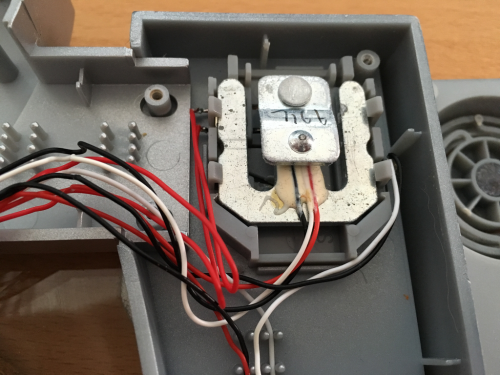

The sound was a bit more complicated. I wanted a volume control knob on the outside but also did not want to disassemble any audio amplifier.

I went with the simplest solution: A 500k Ohm dual potentiometer soldered into the headphone extension cable going to the amplifier. The potentiometer then got put into a pot and a whole made it stick out so that a knob could be attached.

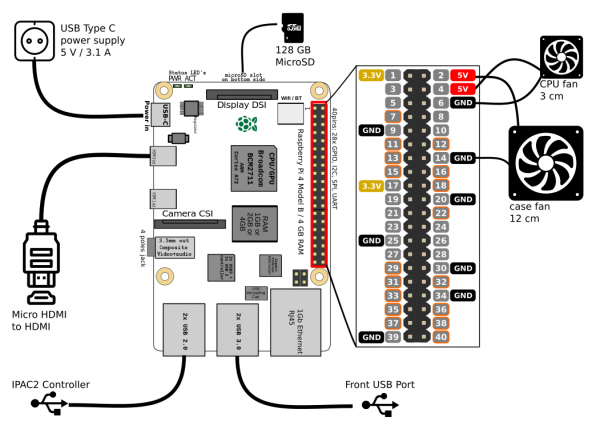

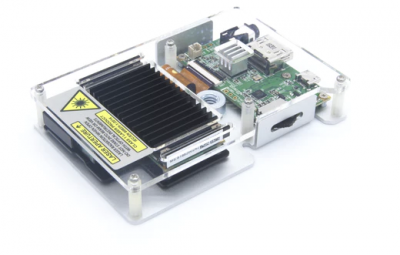

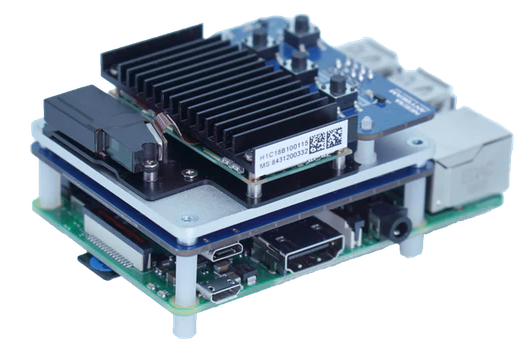

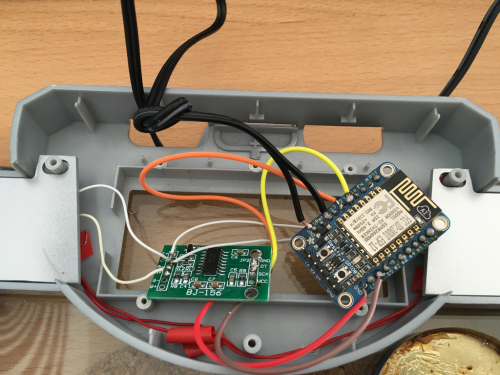

The RaspberryPi set-up then only lacked cooling. The plan was to put a 120mm case fan to pull in air from the bottom and went it out another 120mm case hole at the upper back. Additionally the RaspberryPi would get it’s own small 30mm fan on top of it’s heatsink case.

I attached both fans directly to the RaspberryPi – so I saved myself another power supply.

software

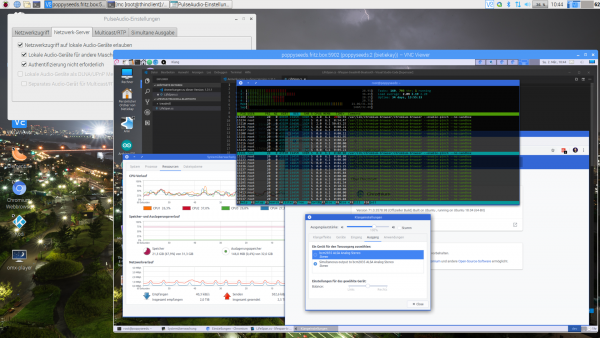

Now I had to make it all work together. As I wanted to use RetroPie in the newest 4.6 release I’ve set that up and hooked it all up.

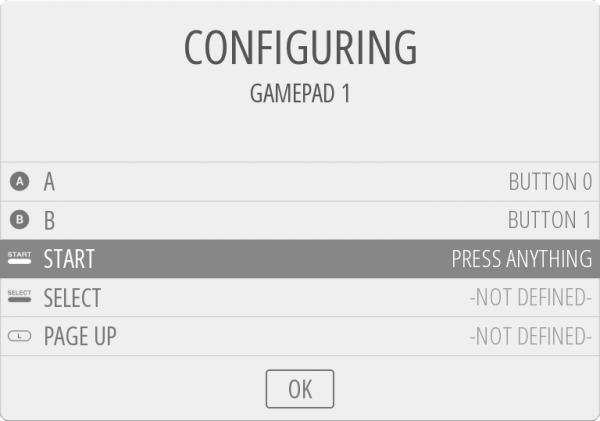

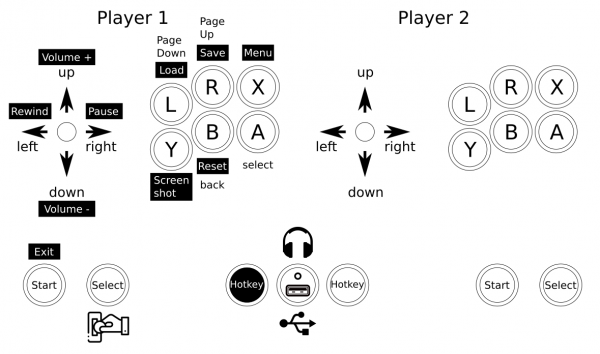

On first start-up EmulationStation asked me to configure the inputs. It had detected 2 gamepads as I had put the IPAC-2 into gamepad mode before. You can do this with a simple mode-switch key-combination that you need to hold for 10 seconds to make it switch.

The configuration of the buttons of the two players went without any issue. First I had set-up the player 1 input. Then I re-ran the input configuration again for player 2 inputs.

The controls where straight forward. I wanted mainly 4-way games but with enough buttons to switch to some beat-em-ups at will.

So I configured a simple layout into Retroarch with some additional hotkeys added:

vinyl design

I tossed around several design ideas I had. Obviously derived from those games I wanted to play and looked forward to.

There was some Metal Slug or some Cave shooter related designs I thought of. But then my wife had the best ideas of them all: Bubble Bobble!

So I went and looked for inspiration on Bubble Bobble and found some but none that sticked.

There was one a good inspiration. And I went to design based upon this one – just with a more intense purple color scheme.

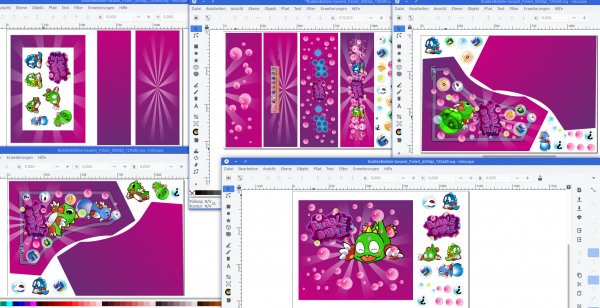

I used Inkscape to pull in bitmap graphics from Bubble Bobble and to vectorize them one by one, eventually ending up with a lot of layers of nice scalable vector graphics.

With all design set I went and sliced it up and found a company that would print my design on vinyl.

With the final arcade-wood accessible top me I could take actual measurements and add to each element 4cm of margin. This way putting it on would hopefully be easier (it was!).

Originally I wanted to have it printed on a 4m by 1,2m sheet of vinyl. It all would have fit there.

But I had to find out that Inkscape was not capable of exporting pixel data at this size and a pixel-density of 600dpi. It just was too large for it to output.

So I had to eventually cut all down into 5 pieces of 1,2m by 80cm each.

After about 7 days all arrived printed on vinyl at my house. I immediately laid everything out and tried if it would fit. It did!

Now everything had to go onto the wood. I did a test run before ordering to check if it would stick securely to the wood. It did stick very nicely. So putting it on was some intense fiddling but it eventually worked out really really great.

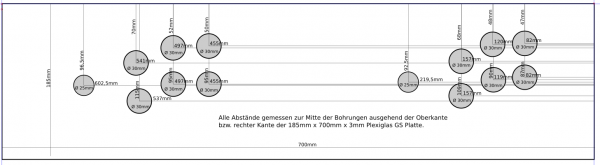

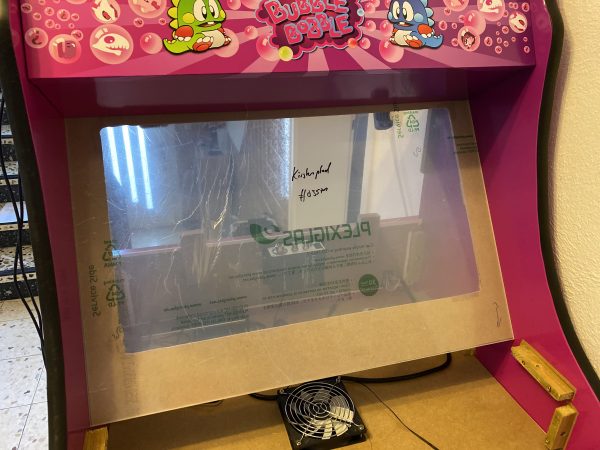

Now it was time for some acrylic. I wanted to get a good bezel and covering of the monitor as well as the handrest and the front buttons.

Cutting acrylic myself was out of questions – so I went with a local company that would laser-cut acrylic for me to my specification.

I’ve sent them the schematics and measurements and the panels for reference and 4 days later the acrylic arrived. We could then put the last bits together for completion!

Result

I am really happy how this turned out – especially since with everything that required actual work with hands I am a hopeless case. With this somehow everything worked out.

I still employ the idea of a vertical shoot-em-up centered version… but maybe some day.

If you got any questions or feedback let me know!

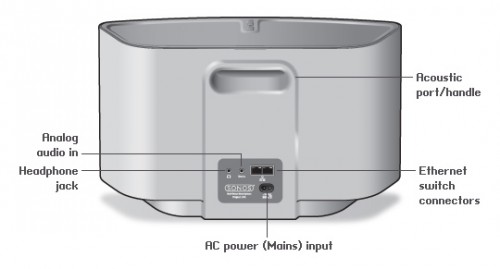

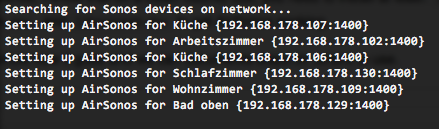

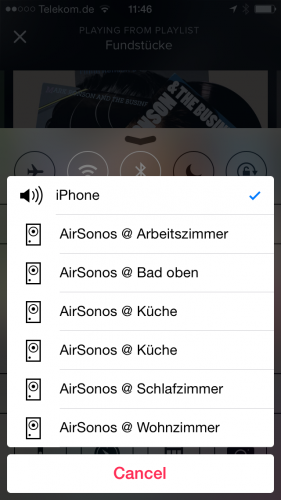

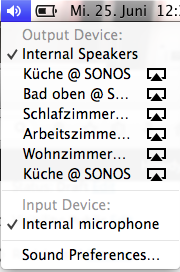

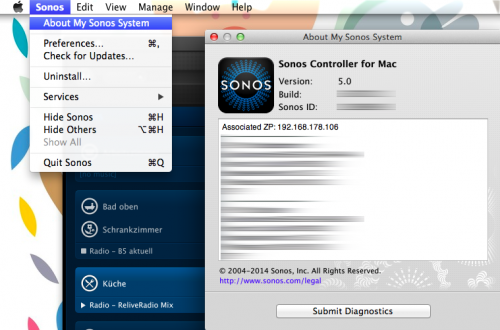

You have to get Airplay hardware (like the Airport Express/Extreme,…) and attach it physically to one of the inputs of your SONOS Set-Up. Typically you will need a SONOS Play:5 which has an analog input jack.

You have to get Airplay hardware (like the Airport Express/Extreme,…) and attach it physically to one of the inputs of your SONOS Set-Up. Typically you will need a SONOS Play:5 which has an analog input jack.