Working in the IT industry requires us to spend copious amounts of time focused on our screens mostly sitting at our desks. But this does not have to be that way.

For me sitting down for long times creates a lot of unwanted effects and essentially leads to me not being able to focus anymore properly.

In 2015 my wife and I attacked that “health problem” as a team. And in the 12 months until 2016 we both lost 120 kg / 260lbs added up together in body weight and completely changed the way we deal with food and sport.

With that I also changed the way I work. Sitting down was from now on the exception.

Coincident with this lifestyle change my then-employer Rakuten rolled out it’s then new workplace concept and everyone got great electric stand-up desks that allowed you to change the height up and down effortlessly.

When I started with SIEMENS of course their workplace concept included standing desks as well!

For those times I am working from home one of the desks is equipped with a standing desk with an additional twist.

So this desk let’s you work while standing. But it also allows you to walk while you work. You can set the speed from 0 to 6.4 km/h.

Given a good headset I personally can attend conference calls without anyone noticing I am walking with about 4 km/h paces.

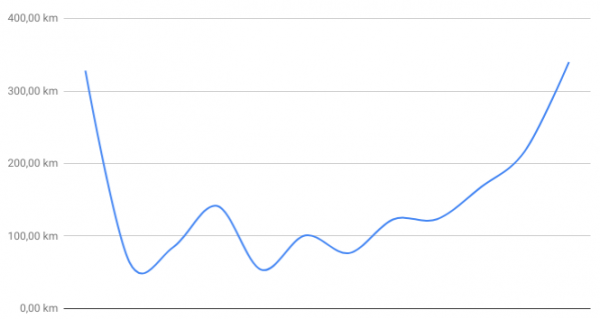

When I am spending a whole day working from this desk it is not uncommon to accumulate 25-40 km of total distance without really noticing it while doing so. Of course: later the day you’ll feel 40km in one way or the other

It took a bit of getting used to as your feet are doing something entirely different from what the rest of the body is doing. But at least for me it started to feel natural very quickly.

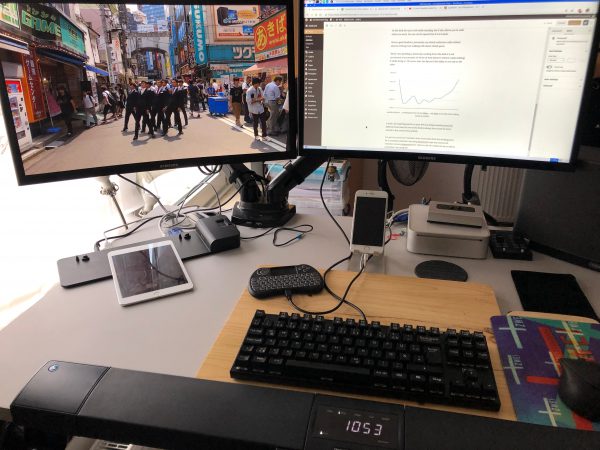

I’ve put two curved 24″ monitors onto it and aside from the docking ports for a company notebook I am using thinclients to get my usual work machines screens teleported there. There’s a bit of a media set-up as well as sometimes I am using one of the screens for watching videos.

For those now interested in the purchase of such a great walking desk: I can only recommend doing so! But be aware of some thoughts:

There are not a lot of vendors of such appliances. And those vendors are not selling a lot of them. This means: be ready for a € 1000+ purchase and be ready to shell out some good money on extended warranties.

My first desk + treadmill was replaced 3 times before. It was LifeSpans first generation of treadmill desks and it just kept exploding. I actually had glowing sparks of fire spitting out of the first generation treadmill.

I’ve returned it for no money loss and waited for the second generation. This current, second generation of LifeSpan treadmill desks is really doing it for me for longer than the first generation ever had without breaking. Looking at the use of the device I would see it as a purchase over 5 years. After 5 years of actual and consistent use I wouldn’t be overly annoyed if the mechanical parts of the appliance would stop working. I am not expecting such a device to live much longer anyhow.

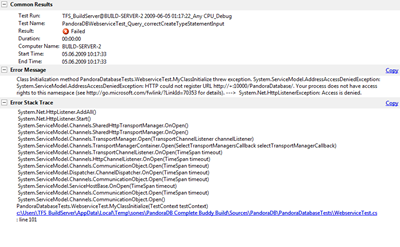

Energy consumption wise it’s quite impressive how much energy this thing consumes. I wasn’t quite expecting those levels. So here’s for you to know:

So just around 500 Watts when in use. The 65W base load is the monitors and computers on top.

I can only recommend to try something like this out. Unfortunately it’s quite hard to find a place to try it out. At least I was not able to try before buy.

But then again I could answer your questions if you had any.

click on it to see it big

click on it to see it big 東京

東京