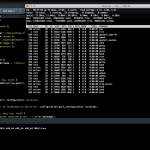

Termshark is a terminal based UI that you can use to debug network traffic when you do not have a GUI available to you.

Podcast Search Engine

When you need something to listen to it could be Podcasts that you are missing in your life.

A good place to start with those is a directory of podcasts, that even helps with categories and search functionality. Just like fyyd:

Of course as you can find all sorts of podcast there you can also find ours.

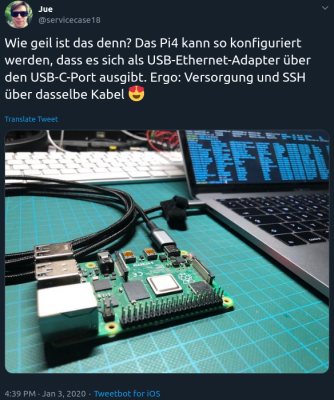

TIL: Pi Zero and Pi4 can be used as USB ethernet adapters

And if you want it too, there is the how-to available on the RaspberryPi forum.

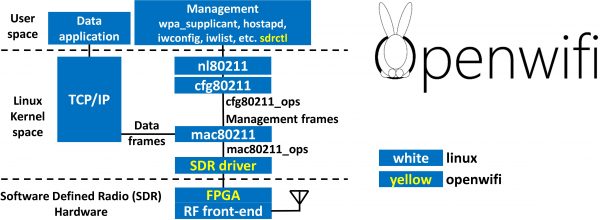

Linux mac80211 compatible full-stack Wi-Fi design based on SDR

In a tweet we were given an early christmas present – open-sdr released an open source software Wi-Fi stack that utilizes software-defined-radio technology to implement actual working Wi-Fi.

Features:

- 802.11a/g; 802.11n MCS 0~7; 20MHz

- Mode tested: Ad-hoc; Station; AP

- DCF (CSMA/CA) low MAC layer in FPGA

- Configurable channel access priority parameters:

- duration of RTS/CTS, CTS-to-self

- SIFS/DIFS/xIFS/slot-time/CW/etc

- Time slicing based on MAC address

- Easy to change bandwidth and frequency:

- 2MHz for 802.11ah in sub-GHz

- 10MHz for 802.11p/vehicle in 5.9GHz

- On roadmap: 802.11ax

See this demonstration:

RaspberryPis to Access Points!

Current generations of RaspberryPi single board computers (from 3 up) already got WiFi on-board. Which is great and can be used, in combination with the internal ethernet or even additional network interfaces (USB) to create a nice wired/wireless router. This is what this RaspAP project is about:

This project was inspired by a blog post by SirLagz about using a web page rather than ssh to configure wifi and hostapd settings on the Raspberry Pi. I began by prettifying the UI by wrapping it in SB Admin 2, a Bootstrap based admin theme. Since then, the project has evolved to include greater control over many aspects of a networked RPi, better security, authentication, a Quick Installer, support for themes and more. RaspAP has been featured on sites such as Instructables, Adafruit, Raspberry Pi Weekly and Awesome Raspberry Pi and implemented in countless projects.

This really is going to be very useful while on travels. I plan to replace my GL-INET router, which shows signs of age.

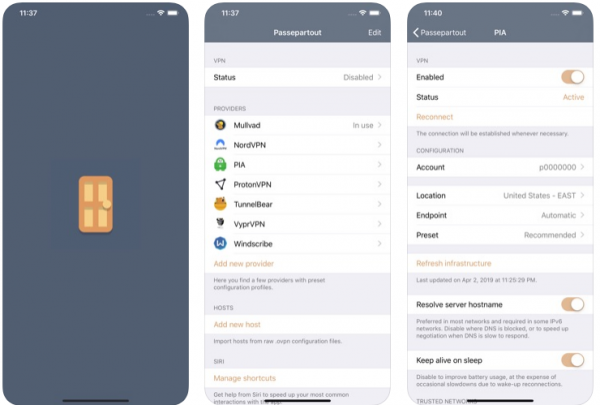

smart OpenVPN client for iOS

There is a free and well integrated OpenVPN client for iOS devices already. And as much as this one works quite well it’s also lacking some comfort features that are now made available through alternative iOS client implementations of OpenVPN.

OpenVPN is an open-source commercial software that implements virtual private network techniques to create secure point-to-point or site-to-site connections in routed or bridged configurations and remote access facilities. It uses a custom security protocol that utilizes SSL/TLS for key exchange.

https://en.wikipedia.org/wiki/OpenVPN

Meet Passepartout. The iOS OpenVPN client that comes with lots of comfort features. Of main interest for me is that Passepartout is aware of the connection you’re currently using and can adopt it’s VPN tunnel status accordingly.

Passepartout is a smart OpenVPN client perfectly integrated with the iOS platform. Passepartout is the only app you need for both well-known OpenVPN providers and your personal OpenVPN servers.

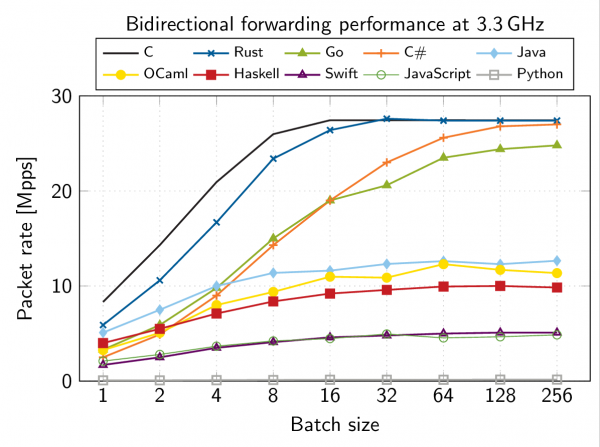

Writing Network Drivers in C#

Somebody had to do it. Maximilian Stadlmeier did:

User space network drivers on Linux are often used in production environments to improve the performance of network-heavy applications. However, their inner workings are not clear to most

programmers who use them. ixy aims to change this by providing a small educational user space network driver, which is gives a good overview of how these drivers work, using only 1000 lines of C code.

While the language C is a good common denominator, which many developers are familiar with, its syntax is often much more dicult to read than that of more modern languages and makes the driver seem more complex than it actually is.For this thesis I created a C# version of ixy, named ixy.cs, which utilizes the more modern syntax and additional safety of the C# programming language in order to make user space network driver development even more accessible. The viability of C# for driver programming will be analyzed and its advantages and disadvantages will be discussed.

The actual implementation (with other programming languages as well) can be found here.

Apparently it’s not as slow as you might think:

TIL: iPhone Visual Voicemal is IMAP

Today I learned that the Apple iPhone re-purposes the IMAP protocol to implement the voice mail feature.

By sniffing the network traffic it was possible to examine the IMAP protocol revealing username and the corresponding hashed password (which allows to repeat a successful login) and of course all voicemail files. We want to highlight, that all the voicemail files have been transferred unencrypted.

Assessment of Visual Voicemail from 2012

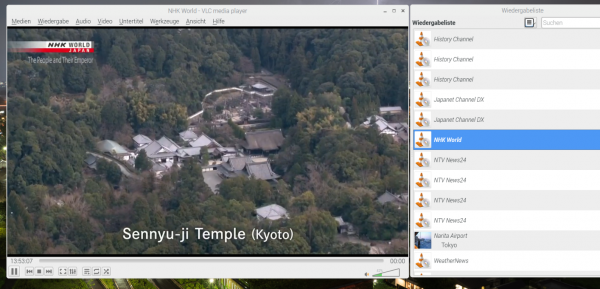

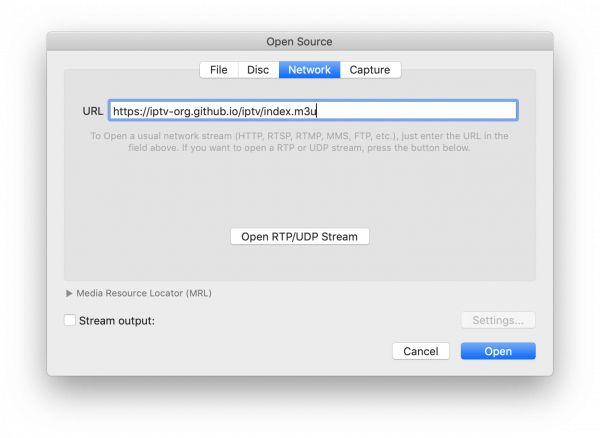

a source of iptv streams

Collection of 8000+ publicly available IPTV channels from all over the world.

Internet Protocol television (IPTV) is the delivery of television content over Internet Protocol (IP) networks.

Github: iptv-org/iptv

Using the streams is as simple as it gets: Just open the provided playlilst files in your favorite media player. The above example is th VLC media player.

good wireguard tutorial

If you, like me, are looking into new emerging tools and technologies you might also look at Wireguard.

WireGuard® is an extremely simple yet fast and modern VPN that utilizes state-of-the-art cryptography. It aims to be faster, simpler, leaner, and more useful than IPsec, while avoiding the massive headache. It intends to be considerably more performant than OpenVPN. WireGuard is designed as a general purpose VPN for running on embedded interfaces and super computers alike, fit for many different circumstances. Initially released for the Linux kernel, it is now cross-platform (Windows, macOS, BSD, iOS, Android) and widely deployable. It is currently under heavy development, but already it might be regarded as the most secure, easiest to use, and simplest VPN solution in the industry.

bold wireguard website statement

To apply and get started with WireGuard on Linux and iOS I’ve used the very nice tutorial of Graham Stevens: WireGuard Setup Guide for iOS.

This guide will walk you through how to setup WireGuard in a way that all your client outgoing traffic will be routed via another machine (server). This is ideal for situations where you don’t trust the local network (public or coffee shop wifi) and wish to encrypt all your traffic to a server you trust, before routing it to the Internet.

WireGuard Setup Guide for iOS.

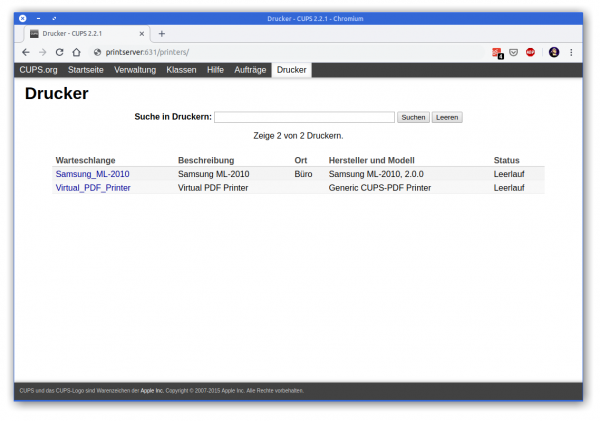

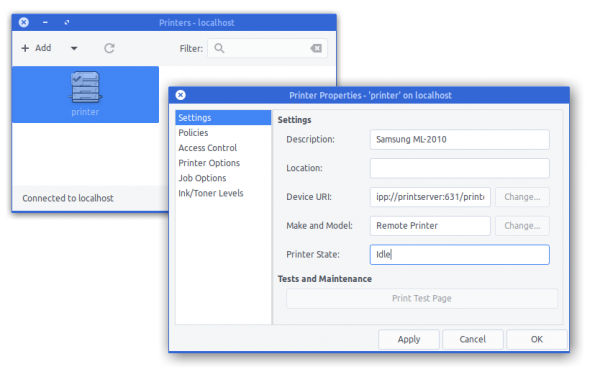

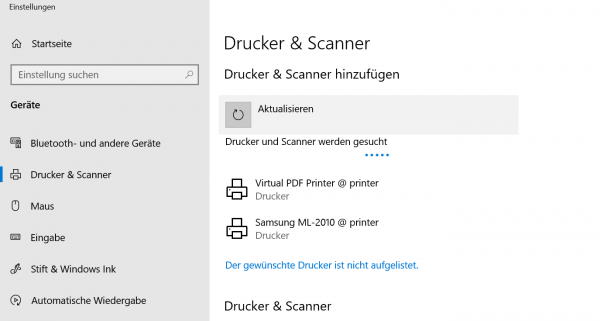

retrofit an old printer to be available on the network

In 2007 I had become proud owner of a Samsung ML-2010 mono laser printer. It’s done a great job ever since and I can recall changing the toner just once so far.

So you can tell: I am not a heavy printer user. Every so often I gotta print out a sheet of paper to put on a package or to fill out a form. A laser printer is the perfect fit for this pattern as it’s toner is not going-bad or evaporating like ink does in ink-printers.

So I still like the printer and it’s in perfect working condition. I’ve just recently filled up the toner for almost no money. But – but this printer needs to be physically connected to the computer that wants to print.

As the usage patterns have significantly changed in the last 12 years this printer needs to be brought into todays networked world.

Replacing it with a new printer is not an option. All printers I could potentially purchase are both more expensive to purchase and the toner is much more expensive to refill. No-can-do.

If only there was an easy way to get the printer network ready. Well, turns out, there is!

First let’s start introducing an opensource project: CUPS

CUPS (formerly an acronym for Common UNIX Printing System) is a modular printing system for Unix-like computer operating systems which allows a computer to act as a print server. A computer running CUPS is a host that can accept print jobs from client computers, process them, and send them to the appropriate printer.

Wikipedia

A good, cheap and energy-efficient way to run a CUPS host is a Raspberry Pi. I do own several first-generation models that have been replaced by much more powerful ones in the previous years.

So I’ve taken one Raspberry Pi and did the set-up steps: Installing the Raspberry Pi Print Server Software.

And now – what did I get?

I got a networked Samsung Laser Printer. No thrills, no problems at all.

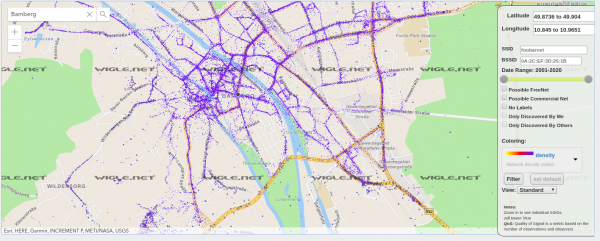

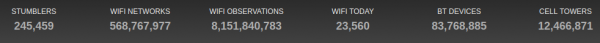

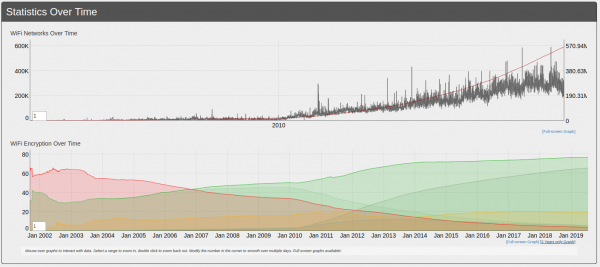

Wireless Network Mapping – data source and data sink

When you work with wireless networks and you do programming and mobile app development that works with things like user location you might find this useful.

Take thousands of users and you’ve got the worlds wifi networks mapped…

WiGGLE (Wireless Geographic Logging Engine) is a project which takes wireless network data + location and puts it into a big database. On top of storage it’s giving you access to that data.

We consolidate location and information of wireless networks world-wide to a central database, and have user-friendly desktop and web applications that can map, query and update the database via the web.

https://wigle.net/faq

So what’s my use-case? Apart from the obvious I will make use of this by finding out more about those fellow travelers around me. Many people probably to the same as me: Travel with a small wifi / 4g access point. Whenever this accesspoints shows up in scans the path will be traceable.

I am curious to see which access point around me is in the million-mile club yet…

PixelFed – Federated Image Sharing

In search of alternatives to the traditional centralized hosted social networks a lot of smart people have started to put time and thought into what is called “the-federation”.

The Federation refers to a global social network composed of nodes that talk to each other. Each of them is an installation of software which supports one of the federated social web protocols.

What is The Federation?

You may already have heard about projects like Mastodon, Diaspora*, Matrix (Synapse) and others.

The PixelFed project seems to gain some traction as apparently the first documentation and sources are made available.

PixelFed is a federated social image sharing platform, similar to instagram. Federation is done using the ActivityPub protocol, which is used by Mastodon, PeerTube, Pleroma, and more. Through ActivityPub PixelFed can share and interact with these platforms, as well as other instances of PixelFed.

the-federation

I am posting this here as to my personal logbook.

Given that there’s already a Dockerfile I will give it a try as soon as possible.

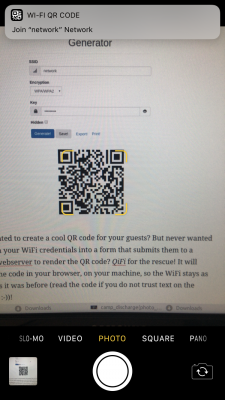

WiFi QR Code Generator

Whenever we arrive at a place that we have not been before it is important to get properly connected to the internet.

Finding wifi SSIDs and typing passwords is tedious and prone to errors. There is an easier way of course!

The owner of the wireless network can generate a QR code that you can easily take a photo of and your phone will automatically prompt you to log into the wireless network without you having to type anything.

On your phone it looks like this:

To generate these QR codes that contain all information for visitors/new users to connect this simple tool / online generator can be used:

Ever wanted to create a cool QR code for your guests? But never wanted to type in your WiFi credentials into a form that submits them to a remote webserver to render the QR code? QiFi for the rescue! It will render the code in your browser, on your machine, so the WiFi stays as secure as it was before (read the code if you do not trust text on the internet :-))!

Qifi.org

Don’t worry: your access point information is not transferred over the internet. As this is open source at the time of writing the data was held in HTML 5 local storage on the local browser only and not transferred out.

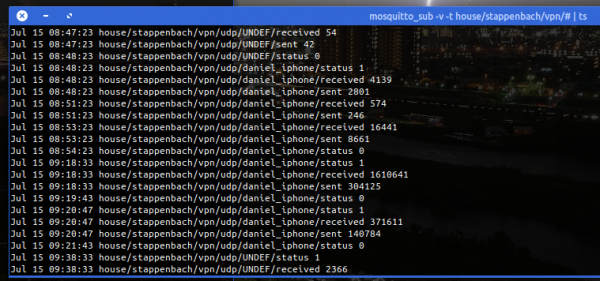

bridge the openvpn-status.log to MQTT

For big parts of my VPN needs I use OpenVPN. Especially on iOS devices the deep integration just works. Even to a degree that you enable the VPN once and the device will transparently keep it up / reestablish connections when required.

OpenVPN protocol has emerged to establish itself as a de- facto standard in the open source networking space with over 50 million downloads. OpenVPN is entirely a community-supported OSS project which uses the GPL license.

VISIT THE OPENVPN COMMUNITY

I am using the dockerized version of OpenVPN. From there I’ve got several ways to get telemetry data (like connections, traffic, …) out of it. One way is the management interface provided by OpenVPN. Another way is by using the default openvpn-status.log file.

Since the easiest way out-of-the-box was to use the logfile I sat down and wrote a little 2mqtt bridge for the contents of the logfile.

It’s also dockerized so you can easily set it up by pointing the openvpn-status.log to the right volume/mount-point.

When done it’ll produce MQTT messages like this:

The set-up and start-up is rather simple:

docker run -d --restart=always --volume /openvpnstatus2mqttconfiglocation/:/configuration --volume /openvpnstatusloglocation/:/openvpn openvpn-status2mqttMQTT Broker, Topic-Prefix and so on are configured with the .json configuration file found along the project.

Of course everything I wrote is available on GitHub as open-source.

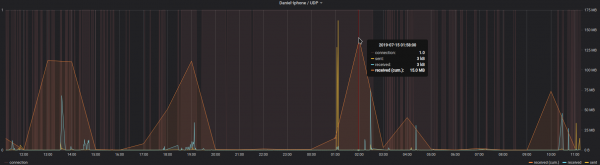

The immediate outcome of this is that with the always-on VPN I am now getting statistics about, for example, the data consumption of my iPhone.

The big traffic spike at 1 AM is the backup that my iPhone does every night. Very interesting also how often the connection is closed and opened again even without me using the phone…

IP-over-DNS

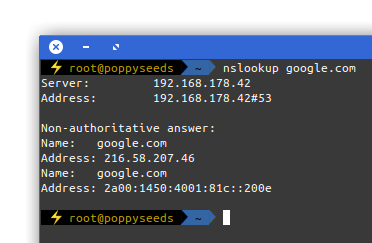

Picture yourself in this situation. You connect to a network and nothing works. Except for this:

It is quite common to have DNS working in networks while everything else is not. Sometimes the network requires a log-in to give you access to a small portion of the internet.

Now, with the help of a tool called iodine, you can get access to the full internet with only DNS working in your current network:

iodine lets you tunnel IPv4 data through a DNS server. This can be usable in different situations where internet access is firewalled, but DNS queries are allowed.

It runs on Linux, Mac OS X, FreeBSD, NetBSD, OpenBSD and Windows and needs a TUN/TAP device. The bandwidth is asymmetrical with limited upstream and up to 1 Mbit/s downstream.

iodine

Setting it up is a bit of work but given that you are anyway having access to a well connected server on the free portion of the internet it can be easily done.

Of course the source is on github.

Time estimation in software development

I’ve found myself in these spots several times in my life. Either I had to deliver on an estimate or I had to acknowledge an estimation and deal with the outcomes.

If you are involved in anything digital / software this is a recommended piece to read:

Anyone who built software for a while knows that estimating how long something is going to take is hard. It’s hard to come up with an unbiased estimate of how long something will take, when fundamentally the work in itself is about solving something. One pet theory I’ve had for a really long time, is that some of this is really just a statistical artifact.

Why software projects take longer than you think

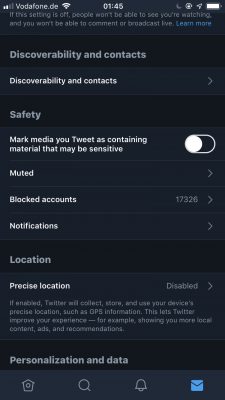

blocking ads and promotions on twitter

When a group of people with the same problem meets, they work together and sometimes do an experiment.

Nobody likes ads or “promotional content”.

At some point Twitter chose to push ads in the official Twitter client into every timeline and decided to make them look like normal timeline content.

It did not take long for a group of people that do not like that to meet and join forces: Since about a week now a very small group of people has taken their Twitter block lists and merged them using the Block Together service.

This experiment great since it’s completely effortless. You link your block lists once and from thereon you keep using Twitter like you always did. Whenever you see a paid promotion you “block it”. Everybody from thereon will not see promotions and timeline entries from this specific Twitter user (unless you would actively follow them).

And the effect after about a week is just great! I cannot see a downside so far but the amount of promotion content on my timeline has shrunk to a degree where I do not see any at all.

This is a great way to get rid of content you’ve never wanted and focus on the information you want.

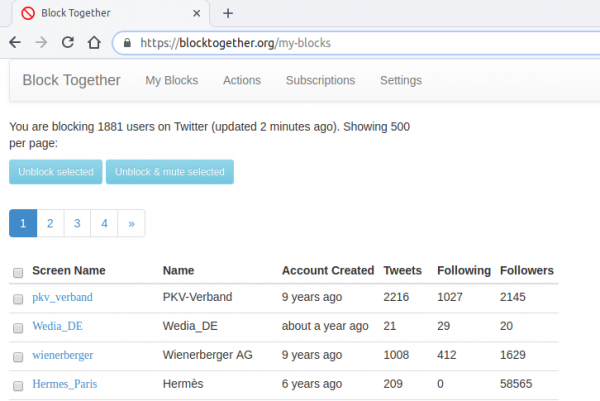

Twitter Blocklists

My usual twitter use looks like this: I am scrolling through the timeline reading up things and I see an ad. I click block and never again will I see anything from this advertiser. As I’ve written here earlier.

As Twitter is also a place of very disturbing content there are numerous services built around the official block list functionality. One of those services is “Block Together“.

Block Together is designed to reduce the burden of blocking when many accounts are attacking you, or when a few accounts are attacking many people in your community. It uses the Twitter API. If you choose to share your list of blocks, your friends can subscribe to your list so that when you block an account, they block that account automatically. You can also use Block Together without sharing your blocks with anyone.

blocktogether.org

I’ve signed up and apparently this is as easy as it gets when you want to share block lists.

There seem to be more people that use Twitter like I do. For example Volker Weber wrote about his handling of “promoted content”.

My block list on Twitter currently includes 1881 accounts and these are only accounts that put paid promotions without my request into my timeline.

I’ve read that Volker has such a long list as well – maybe it’s worth sharing as Volker is one person I would trust on his decisions for such a list. (vowe is a good mother!)

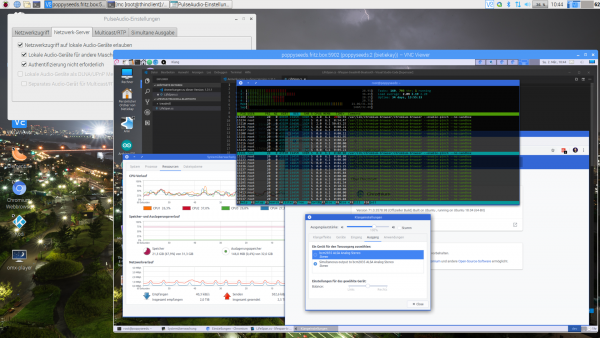

bringing the thinclient back

I had to solve a problem. The problem was that I did not wanted to have the exact same session and screen shared across different work places/locations simultaneously. From looking at the same screen from a different floor to have the option to just walk over to the lab-desk solder some circuits together and have the very same documents opened already and set on the screens over there.

One option was to use a tablet or notebook and carry it around. But this would not solve the need to have the screen content displayed on several screens simultaneously.

Also I did not want to rely on the computing power of a notebook / tablet alone. Of course those would get more powerful over time. But each step would mean I would have to purchase a new one.

Then in a move of desperation I remembered the “old days” when ThinClients used to be the new-kid in town. And then I tried something:

I just recently had moved all house server infrastructure over to Linux and Docker. So what would keep me from utilizing the computing power of that one beefy server in the basement to host all of my desktop needs?

It turns out: Nothing really. Docker is well prepared to host desktop environments. With a bit of tweaking and TigerVNC Xvnc I was able to pre-configure the most current Ubuntu to start my preferred Mate desktop environment in a container and expose it through VNC.

If you wanted to replicate this I would recommend this repository as a starting point.

Even better I found that the RaspberryPi single board computers come with a free pre-licensed and accelerated version of RealVNC.

So I took one of those RaspberryPis, booted up the Raspbian Desktop lite and connected to the dockers VNC port. It all worked just like that.

The screenshot above holds an additional information for you. I wanted sound! Video works smooth up to a certain size of the moving video – after all those RaspberryPis only come with sub Gbit/s wired networking. But to get sound working I had to add some additional steps.

First on the RaspberryPI that you want to output the sound to the speakers you need to install and set-up pulseaudio + paprefs. When you configure it to accept audio over the network you can then configure the client to do so.

In the docker container a simple command would then redirect all audio to the network:

pax11publish -e -S thinclient

Just replace “thinclient” with the ip or hostname of your RaspberryPI. After a restart Chrome started to play audio across the network through the speakers of the ThinClient.

Now all my screens got those RaspberryPIs attached to them and with Docker I can even run as many desktop environments in parallel as I wish. And because VNC does not care about how many connections there are made to one session it means that I can have all workplaces across the house connected to the same screen seeing the same content at the same time.

And yes: The UI and overall feel is silky smooth. And since VNC adapts to some extend to the available bandwidth by changing the quality of the image even across the internet the VNC sessions are very much useable. Given that there’s only 1 port for video and 1 port for audio it’s even possible to tunnel those sessions across to anywhere you might need them.

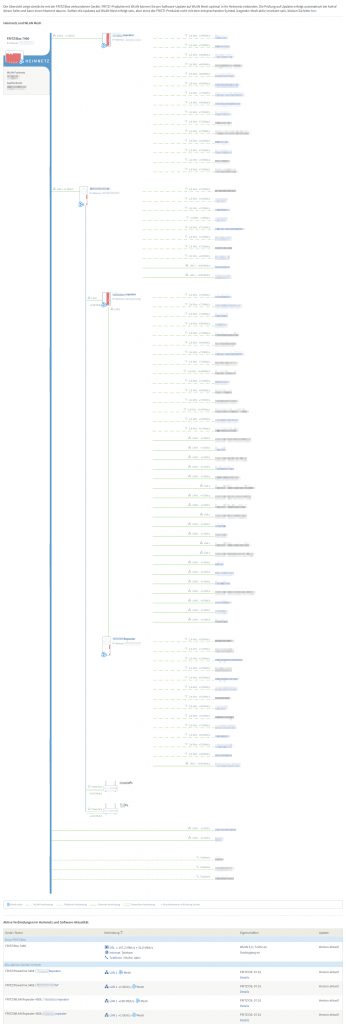

wireless mesh network

Since AVM has started to offer wireless mesh network capabilities in their products through software updates I started to roll it out in our house.

Wireless mesh networks often consist of mesh clients, mesh routers and gateways. Mobility of nodes is less frequent. If nodes constantly or frequently move, the mesh spends more time updating routes than delivering data. In a wireless mesh network, topology tends to be more static, so that routes computation can converge and delivery of data to their destinations can occur. Hence, this is a low-mobility centralized form of wireless ad hoc network. Also, because it sometimes relies on static nodes to act as gateways, it is not a truly all-wireless ad hoc network.

Wikipedia

With the rather complex physical network structure and above-average number of wireless and wired clients the task wasn’t an easy one.

To give an impression of what is there right now:

So there’s a bit of almost everything. There’s wired connections (1Gbit to most places) and there is wireless connections. There are 5 access points overall of which 4 are just mesh repeaters coordinated by the Fritz!Box mesh-master.

There’s also powerline used for some of the more distant rooms of the mansion. All in all there are 4 powerline connections all of them are above 100 Mbit/s and one even is used for video streaming.

All is managed by a central Fritz!Box and all is well.

Like without issues. Even interesting spanning-tree implementations like from SONOS are being properly routed and have always worked without issues.

The only other-than-default configuration I had made to the Fritz!Box is that all well-known devices have set their v4 IPs to static so they are not frequently switching around the place.

How do I know it works? After enabling the Mesh things started working that have not worked before. Before the Mesh set-up I had several accesspoints independently from each other on the same SSID. Which would lead to hard connection drops if you walked between them. Roaming did not work.

With mesh enabled I’ve not seen this behavior anymore. All is stable even when I move actively between all floors and rooms.

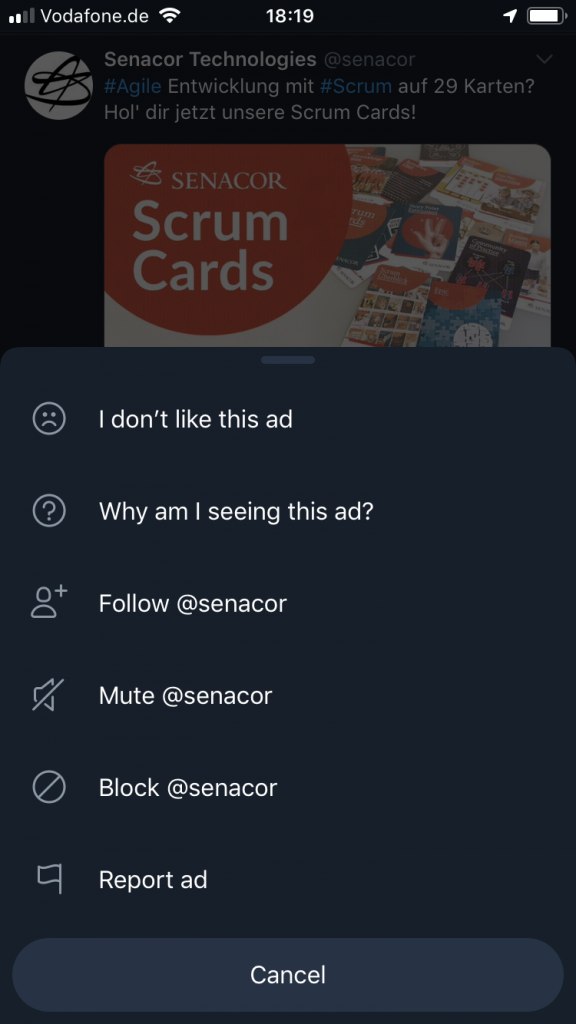

How to get me to actively avoid your products

It is a simple one step process: shove unasked advertising in my face. Bonus points for loud full blast audio right of the start.

If I ever see unasked advertising that tried to be sneaky or not do sneaky I am going to block it without noticing from whom or for what it was.

But when it’s shown so often and is so intrusive that I take note of your brand. That brand is not considered for future business anymore.

That is especially for services where I am the product paying with my data.

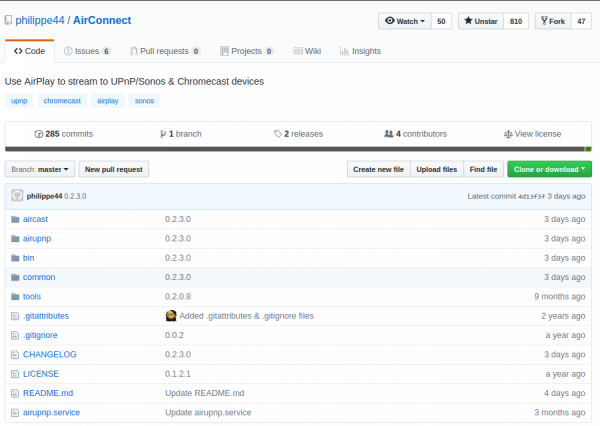

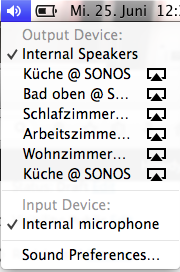

Apple Airplay for SONOS (in Docker)

We’ve got a couple of SONOS based multi-room-audio zones in our house and with the newest generation of SONOS speakers you can get Apple Airplay. Fancy!

But the older hardware does not support Apple Airplay due to it’s limiting hardware. This is too bad.

So once again Docker and OpenSource + Reverse-Engineering come to the rescue.

AirConnect is a small but fancy tool that bridges SONOS and Chromecast to Airplay effortlessly. Just start and be done.

It works a treat and all of a sudden all those SONOS zones become Airplay devices.

There is also a nice dockerized version that I am using.

“making your home smarter” – use case #12 – How much time do I have until…?

Did you notice that most calendars and timers are missing an important feature. Some information that I personally find most interesting to have readily available.

It’s the information about how much time is left until the next appointment is coming up. Even smartwatches, which should should be jack-of-all-trades in regards of time and schedule, do not display the “time until the next event”.

Now I came across this shortcoming when I started to look for this information. No digital assistant can tell me right away how much time until a certain event is left.

But the connected house also is based upon open technologies, so one can add these kind of features easily ourselves. My major use cases for this are (a) focussed work, plan quick work-out breaks and of course making sure there’s enough time left to actually get enough sleep.

As you can see in the picture attached my watch will always show me the hours (or minutes) left until the next event. I use separate calendars for separate displays – so there’s actually one for when I plan to get up and do work-outs.

Having the hours left until something is supposed to happen at a glance – and of course being able to verbally ask through chat or voice in any room of the house how long until the next appointment gives peace of mind :-).

“making your home smarter”, use case #11 – money money money

The Internet of Things might as well become your Internet of Money. Some feel the future to be with blockchain related things like BitCoin or Ethereum and they might be right. So long there’s also this huge field of personal finances that impacts our lives allday everyday.

And if you get to think about it money has a lot of touch points throughout all situations of our lifes and so it also impacts the smart home.

Lots of sources of information can be accessed today and can help to stay on top of the things going on as well as make concious decisions and plans for the future. To a large extend the information is even available in realtime.

– cost tracking and reporting

– alerting and goal setting

– consumption and resource management

– like fuel oil (get alerted on price changes, …)

– stock monitoring alerting

– and more advanced even automated trading

– bank account monitoring, in- and outbound transactions

– expectations and planning

– budgetting

After all this is about getting away from lock-in applications and freeing your personal financial data and have a all-over dashboard of transactions, plans and status.

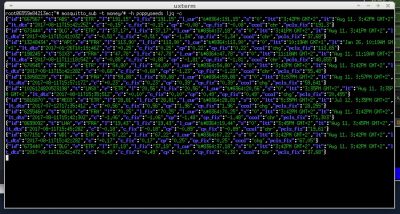

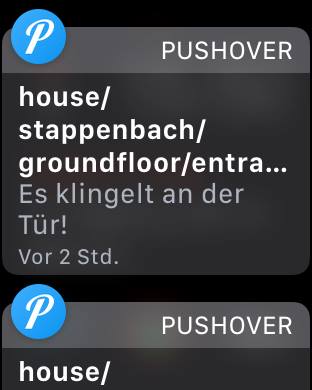

“make your home smarter”, use case #7 – hear that doorbell ringing!

We love music. We love it playing loud across the house. And when we did that in the past we missed some things happening around.

Like that delivery guy ringing the front doorbell and us missing an important delivery.

This happened a lot. UNTIL we retrofitted a little PCB to our doorbell circuit to make the house aware of ringing doorbells.

Now everytime the doorbell rings a couple of things can take place.

– push notifications to all devices, screens, watches – that wakes you up even while doing workouts

– pause all audio and video playback in the house

– take a camera shot of who is in front of the door pushing the doorbell

And: It’s easy to wire up things whatever those may be in the future.

Nitrous – full IDE in your browser – with Collaboration!

“Nitrous is a backend development platform which helps software developers save time by cutting out the repetitive parts of creating development environments and automating them.

Once you create your first development environment, there are many features which will make development easier.”

So what you’re getting is:

- a virtual machine operated for you and set-up with a single click

- A full-featured IDE in your browser

- Code-Collaboration by inviting others to edit your project

- a debugging environment in which you can test-run and work with your code

Here are some screenshots to get you a feel for it:

Source: https://www.nitrous.io/

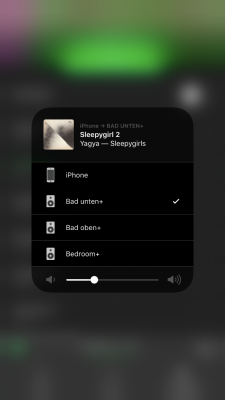

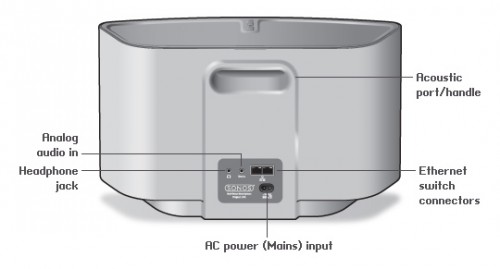

using the RaspberryPi to make all SONOS speakers support Apple Airplay

Airplay allows you to conveniently play music and videos over the air from your iOS or Mac OS X devices on remote speakers.

Since we just recently “migrated” almost all audio equipment in the house to SONOS multi-room audio we were missing a bit the convenience of just pushing a button on the iPad or iPhones to stream audio from those devices inside the household.

To retrofit the Airplay functionality there are two options I know of:

1: Get Airplay compatible hardware and connect it to a SONOS Input.

You have to get Airplay hardware (like the Airport Express/Extreme,…) and attach it physically to one of the inputs of your SONOS Set-Up. Typically you will need a SONOS Play:5 which has an analog input jack.

You have to get Airplay hardware (like the Airport Express/Extreme,…) and attach it physically to one of the inputs of your SONOS Set-Up. Typically you will need a SONOS Play:5 which has an analog input jack.

2: Set-Up a RaspberryPi with NodeJS + AirSonos as a software-only solution

You will need a stock RaspberryPi online in your home network. Of course this can run on virtually any other device or hardware that can run NodeJS. For the Pi setting it up is a fairly straight-forward process:

You start with a vanilla Raspbian Image. Update everything with:

sudo apt-get update

sudo apt-get upgrade

Then install NodeJS according to this short tutorial. To set-up the AirSonos software you will need to install additional avahi software. Especially this was needed for my install:

sudo apt-get install git-all libavahi-compat-libdnssd-dev

You then need to get the AirSonos software:

sudo npm install airsonos -g

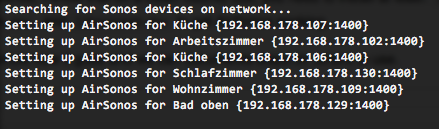

After some minutes of wait time and hard work by the Pi you will be able to start AirSonos.

sudo airsonos

And it’ll come up with an enumeration of all active rooms.

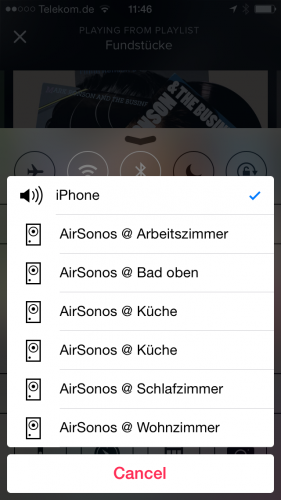

And on all your devices it’ll show up like this:

and

Need to do Load Tests? Try Tsung!

Tsung is an open-source multi-protocol distributed load testing tool

It can be used to stress HTTP, WebDAV, SOAP, PostgreSQL, MySQL, LDAP and Jabber/XMPP servers. Tsung is a free software released under the GPLv2 license.

The purpose of Tsung is to simulate users in order to test the scalability and performance of IP based client/server applications. You can use it to do load and stress testing of your servers. Many protocols have been implemented and tested, and it can be easily extended.

It can be distributed on several client machines and is able to simulate hundreds of thousands of virtual users concurrently (or even millions if you have enough hardware …).

Source 1: http://tsung.erlang-projects.org/

MOSH (Mobile Shell) – fixing SSH for everyone

How many times did you experience a connection loss on your terminal window in the last week? Yeah I know – like everytime you close the lid of your notebook and move to a different place. So like a dozen times every day.

And everytime you reconnect to your servers and you use things like screen to keep your terminals open and your programs running while you’re disconnected.

On the other hand – did you ever curse the internet gods while you tried to do a very important check or bugfix to a machine whilst on a train or mobile roaming network? It’s not what I would call fun-times. When there are no constant disconnects the lag is just infuriating. MOSH also solves this since it’s predicting and responding way faster then vanilla SSH. Your terminal becomes useable again!

So there’s now MOSH to the rescue:

Remote terminal application that allows roaming, supports intermittent connectivity, and provides intelligent local echo and line editing of user keystrokes.

Mosh is a replacement for SSH. It’s more robust and responsive, especially over Wi-Fi, cellular, and long-distance links.

Mosh is free software, available for GNU/Linux, FreeBSD, Solaris, Mac OS X, and Android.

[youtube]http://www.youtube.com/watch?v=XsIxNYl0oyU[/youtube]

Install it on your servers and your clients and never lose a connection again.

Source 1: http://www.gnu.org/software/screen/

Source 2: http://mosh.mit.edu

TIME_WAIT – how does it work?

The purpose of TIME-WAIT is to prevent delayed packets from one connection being accepted by a later connection. Concurrent connections are isolated by other mechanisms, primarily by addresses, ports, and sequence numbers

Source: http://www.isi.edu/touch/pubs/infocomm99/infocomm99-web/

IPv6 native root server has problems with OpenFire Jabber / XMPP Server to Server

I was setting up a new root-server machine and went for the Debian 7 minimal set-up. Thankfully the root-server provider I am using (hetzner) is connected with IPv4 and IPv6 natively. Awesome stuff!

If you’re using an IPv6 native set-up these days you STILL have to be cautious about possible side-effects with software having bugs and not knowing how to deal with these ginormous ip adresses.

So there’s a well known Jabber / XMPP server that I am using for some years now without any issues. I was even using it on native IPv6 connected machines earlier.

But with the fresh and clean set-up of Debian 7 and IPv6 by the hoster several problems started bubbling up.

1: the ‘there can only be one ipv*’ problem

Turns out that the debian team decided to set a system setting by default that lets IPv6 aware applications bind to IPv6 only. Good thing, you can disable it by adding this to your sysctl.conf:

net.ipv6.bindv6only=0

2: the ‘who resolves first is right’ problem

When you get a IPv6 native machine it might have a resolv.conf consisting of IPv4 and IPv6 name servers. And don’t worry: Everything is going to be all-right as long as the software you’re planning to use is perfectly capably dealing with the answers of both types of servers. The IPv4 ones will default to the A records, the IPv6 ones to the AAAA record.

Now there’s OpenFire. A stable and easy to use XMPP / Jabber server implementation. It’s based upon Java and I am running it with Java 7 on my Debian machine.

Unfortunately in the current 3.9.1 version of OpenFire there’s a bug that leads to Server-to-Server XMPP connections not working when they resolv to IPv6. So for example your Google-Talk contacts won’t work at all.

The bug itself is rather stupid: Seems that OpenFire expects an IPv4 adress from the DNS lookup and crashes on an IPv6 adress.

The solution is as easy as the bug is stupid: Remove the IPv6 defaulting nameservers from your resolv.conf.

# nameserver config

nameserver 2xx.xxx.yyy.99

nameserver 2xx.xxx.yyy.100

nameserver 2xx.xxx.yyy.98

nameserver 8.8.8.8

nameserver 8.8.4.4

#nameserver 2axx:yyy:0:zzzz::add:9898

#nameserver 2axx:yyy:0:zzzz::add:9999

#nameserver 2axx:yyy:0:zzzz::add:1010

Source 1: defaulting to net.ipv6.bindv6only=1

Source 2: http://community.igniterealtime.org/thread/51902

weave your net of things that have internet…ehm – internet of things

“The internet of things” is a buzzword used more and more. It means that things around you are connected to the (inter)network and therefore can talk to each other and, when combined, offer fantastic new opportunities.

Yeah right.

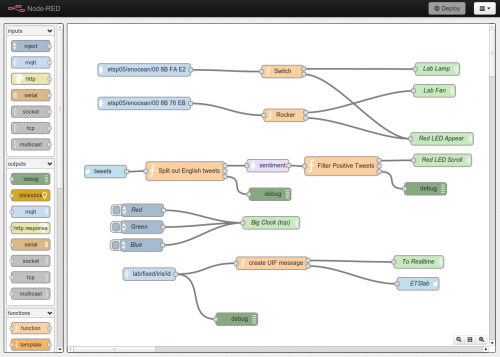

So NodeRed is a NodeJS based toolset that allows you to create so called “flows” (see picture above). Those flows determine what reacts and happens when things happen. Fantastic, told you!

Source 1: http://nodered.org/

Source 2: http://en.wikipedia.org/wiki/Internet_of_ThingsSource 3: http://nodejs.org/