english version

I am currently contemplating the development of a mobile application that allows users to discover and collect various Japanese cultural stamps, such as 駅スタンプ (eki stamps), 御朱印 (goshuin), and 鉄印 (tetsuin). Additionally, this app will enable users to share their collections. My plan is to utilize OpenStreetMap data and provide functionality for users to contribute new stamp locations to the OSM database directly from the app. I have prepared a comprehensive “vision-readme” document that outlines the initial version of the application, detailing various aspects like functionalities, design considerations, and target audience.

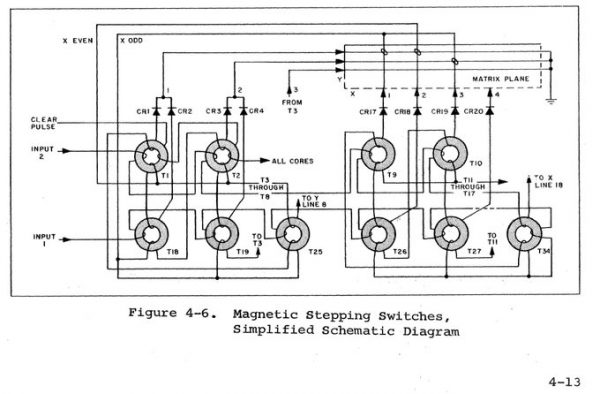

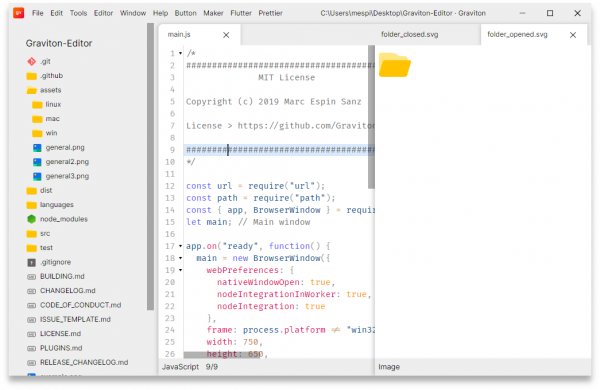

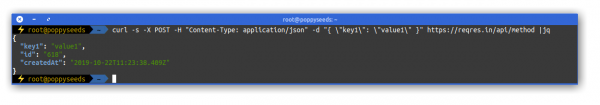

I am seeking support as I currently lack expertise in adding structured data to OSM. My experience with OSM data and app development includes hosting my own Overpass server with a full global dataset. This server supports two iOS mobile applications I developed: (1) miataru and (2) Toilets around me.

I am in the research and conceptualization phase and am looking for collaborators interested in contributing to the concept, implementation, and operation of this project.

You can find more details on the vision and concept here:

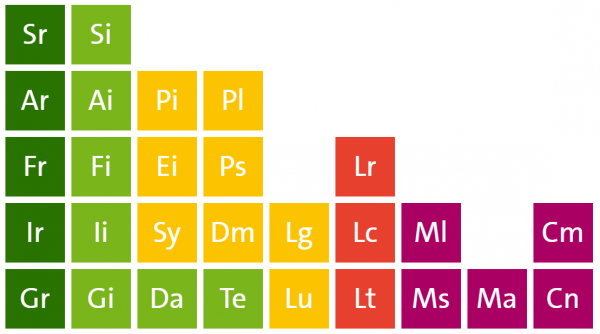

Overview

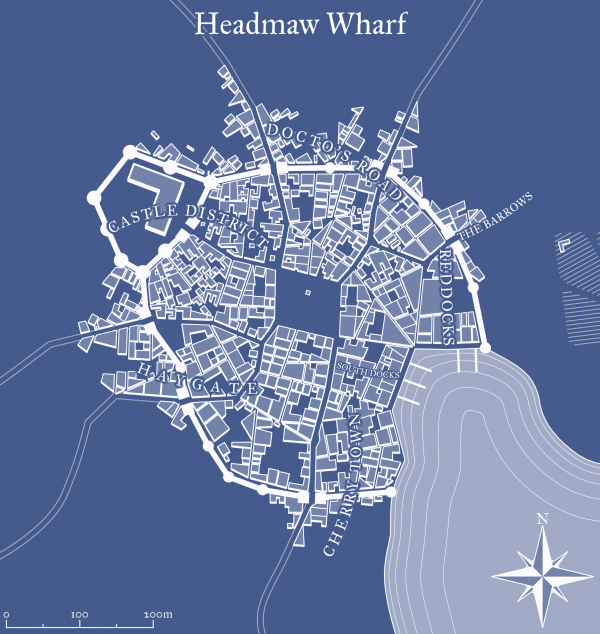

EkiStamp Quest is an engaging mobile application designed for travelers in Japan. It’s a perfect companion for those who enjoy collecting unique Eki Stamps from train stations and tourist spots across the country. The app also supports the collection of Goshuin and Tetsuin, catering to a wide range of cultural enthusiasts.

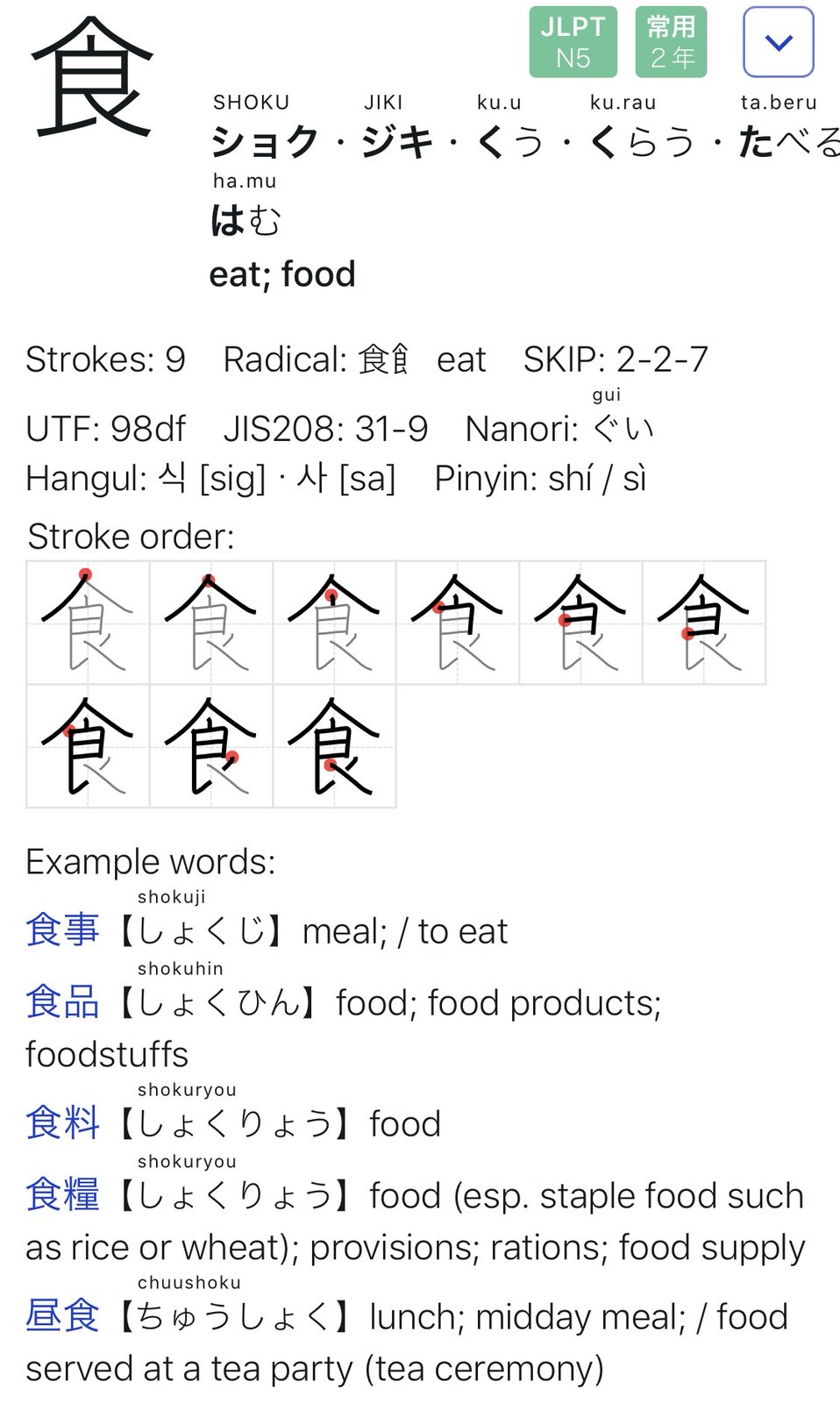

Goshuin are traditional seals collected at temples and shrines, symbolizing a visit and prayer.

Tetsuin are railway station-specific stamps, often celebrating historic or scenic railway lines. EkiStamp Quest offers a fun and interactive way to explore and appreciate Japan’s cultural landmarks, including temples, shrines, and railway stations.

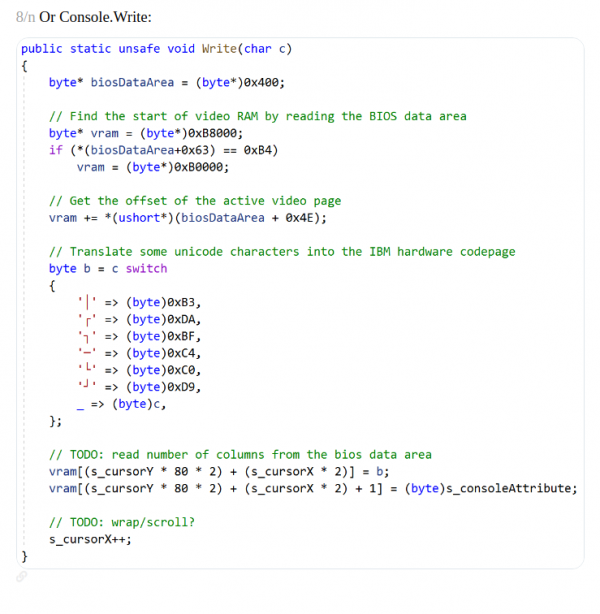

Features

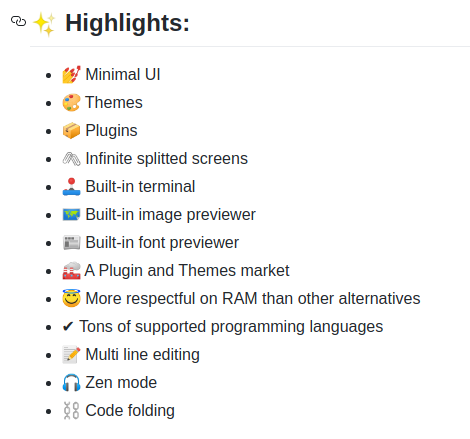

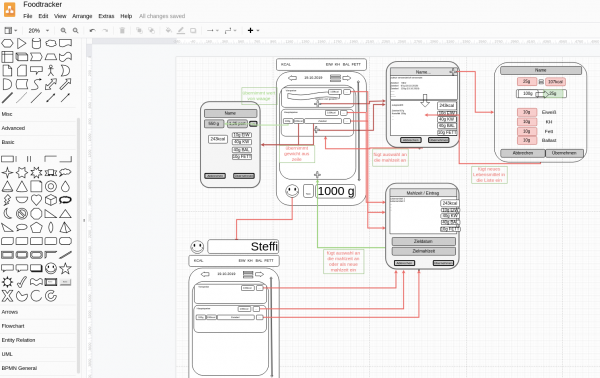

- Stamp Locator: Utilize your location to discover nearby tourist spots, train stations, temples, and shrines with Eki Stamps, Goshuin, and Tetsuin.

- Interactive Map: Navigate through different regions and find locations offering these cultural stamps and seals.

- Collection Tracker: Keep track of the stamps and seals you’ve collected and the locations you’ve visited.

- Stamp and Seal Information: Access detailed information about each stamp and seal, including their design, station history, and cultural insights.

- Community Sharing: Share your collection with others and explore collections from various users.

- Rewards and Challenges: Engage in challenges such as stamp rallies and historic railway journeys to collect special stamps and earn rewards.

- In-App Cropping Tool: Save and personalize your stamp collection with a cropping tool, allowing for cut-out versions of stamps.

- Customizable Collection Books: Choose from various designs to display your stamp collection in a style that suits you.

- Social Media Integration: Easily share your stamps, overlaid on personal photos, on social networking sites.

- Stamp Rally Participation: Join stamp rallies organized by different locations or operators, adding an exciting dimension to your collection experience.

EkiStamp Quest enriches the cultural experience of its users, enabling them to delve into and appreciate the diverse aspects of Japanese heritage through the collection of unique stamps and seals from various locations. This app transforms the traditional hobby of stamp collecting into an interactive and memorable journey through Japan’s rich cultural landscape.

Contact

To ask any question or offer help, please contact me through the comment function of this blog or by email: bietiekay -at- gmail.com

japanese version

私は日本語のネイティブスピーカーではなく、翻訳ツールを使っています。

こんにちは、現在私は、駅スタンプ、御朱印、鉄印などの様々な日本の文化的なスタンプを発見し、収集できるモバイルアプリケーションの開発を検討しています。さらに、このアプリではユーザーが自分のコレクションを共有できるようになります。私の計画は、OpenStreetMapのデータを利用し、ユーザーがアプリから直接OSMデータベースに新しいスタンプの場所を追加できる機能を提供することです。私は、アプリケーションの初期バージョンを概説する包括的な「ビジョンリードミー」ドキュメントを用意しました。これには、機能、デザインの考慮事項、対象オーディエンスなど、さまざまな側面が詳述されています。

私は現在、OSMに構造化データを追加する専門知識が不足しているため、サポートを求めています。私のOSMデータとアプリ開発の経験には、全世界のデータセットを持つ自分自身のOverpassサーバーをホストすることが含まれます。このサーバーは、私が開発した2つのiOSモバイルアプリケーションをサポートしています:(1) miataru および (2) Toilets around me。

私は現在、研究および概念化の段階にあり、このプロジェクトのコンセプト、実装、運用に貢献したいと思っているコラボレーターを探しています。

ビジョンとコンセプトの詳細はこちらでご覧いただけます:

概要

EkiStamp Questは、日本を旅する人々向けの魅力的なモバイルアプリです。全国の鉄道駅や観光地で集められるユニークな駅スタンプを愛する人に最適。このアプリは御朱印や鉄印の収集もサポートし、幅広い文化愛好家を対象としています。

御朱印は、寺社で受ける証しの印章で、訪問と祈りを表します。 鉄印は、鉄道駅固有のスタンプで、特に歴史的または景観の良い鉄道路線を記念しています。EkiStamp Questは、日本の寺院、神社、鉄道駅などの文化的名所を探索し、楽しむためのインタラクティブな方法を提供します。

機能

- スタンプ探索: 現在地を利用して、近くの観光地や鉄道駅で駅スタンプ、御朱印、鉄印を見つけます。

- インタラクティブマップ: 地図上で様々な地域をナビゲートし、これらの文化的スタンプや印章がある場所を探します。

- コレクション追跡: 収集したスタンプや印章、訪れた場所を記録します。

- スタンプ・印章情報: 各スタンプや印章の詳細な情報、デザイン、駅の歴史、文化的背景などを提供します。

- コミュニティ共有: 自分のコレクションを共有し、他のユーザーのコレクションを見ることができます。

- 報酬とチャレンジ: スタンプラリーや歴史的鉄道旅行などのチャレンジに参加し、特別なスタンプを獲得し、報酬を得ます。

- クロッピングツール: アプリ内のクロッピングツールを使って、スタンプコレクションを保存し、カスタマイズします。

- カスタマイズ可能なコレクションブック: 自分の好みに合わせた様々なデザインの中からコレクションブックを選べます。

- SNS統合: スタンプを個人の写真に重ねて、SNSで簡単に共有できます。

- スタンプラリー参加: 様々な場所や運営者が主催するスタンプラリーに参加することができます。

EkiStamp Questは、日本の豊かな文化を通じて、ユニークなスタンプや印章を集めることでユーザーの体験を深めます。このアプリは、伝統的なスタンプ収集を、日本の文化的景観を巡るインタラクティブで記憶に残る旅に変えます。

連絡先

ご質問やお手伝いの申し出は、このブログのコメント機能または電子メールでご連絡ください:bietiekay -at- gmail.com