As iOS 13 has introduced a system wide dark mode into my workflow I had a good reason to give the CSS of this website a little spin.

Depending on your system settings this website now supports Dark Mode.

As iOS 13 has introduced a system wide dark mode into my workflow I had a good reason to give the CSS of this website a little spin.

Depending on your system settings this website now supports Dark Mode.

Booting a computer does not happen extremely often in most use-cases, yet it’s a field that has not seen as much optimization and development as others had.

Find a very interesting presentation on the topic: How to make Linux boot faster here. The presentation was held at the Linux Plumbers Conference 2019.

You might have asked yourself how it is that some phones charge up faster than others. Maybe the same phone charges at different speed when you’re using a different cable or power supply. It even might not charge at all.

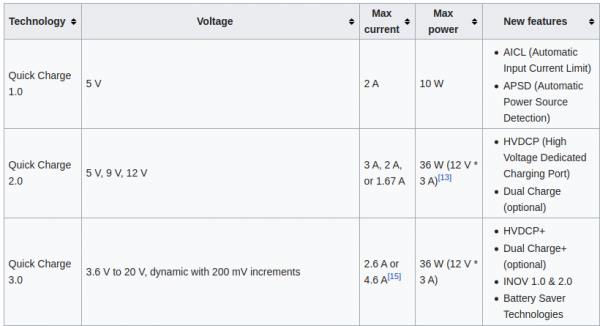

There is some very complicated trickery in place to make those cables and power supplies do things in combination with the active devices like phones. Many of this is implemented by standards like “Quick Charge”:

Quick Charge is a technology found in QualcommSoCs, used in devices such as mobile phones, for managing power delivered over USB. It offers more power and thus charges batteries in devices faster than standard USB rates allow. Quick Charge 2 onwards technology is primarily used for wall adaptors, but it is also implemented in car chargers and powerbanks (For both input and output power delivery).

Wikipedia: Quick Charge

So in a nutshell: If you are able to speak the quick charge protocol, and with the right cable and power supply, you are able to get anything between 3.6 and 20V out of such a combination by just telling the power supply to do so.

This is great for maker projects in need of more power. There’s lots of things to consider and be cautious about.

“Speaking” the protocol just got easier though. You can take this open source library and “power up your project”:

The above mentioned usage-code will give you 12V output from the power supply. Of course you can also do…:

Be aware that your project needs to be aware of the (higher) voltage. It’s really not something you should just try. But you knew that.

More on Quick Charge also here.

Browsers can do many things. It’s probably your main window into the vast internet. Lots of things need visualization. And if you want to know how it’s done, maybe do one yourself, then…

D3.js is a JavaScript library for manipulating documents based on data. D3 helps you bring data to life using HTML, SVG, and CSS. D3’s emphasis on web standards gives you the full capabilities of modern browsers without tying yourself to a proprietary framework, combining powerful visualization components and a data-driven approach to DOM manipulation.

D3.js

And to further learn what it’s all about, go to Amelia Wattenbergers blog and take a stroll:

So, you want to create amazing data visualizations on the web and you keep hearing about D3.js. But what is D3.js, and how can you learn it? Let’s start with the question: What is D3?

An Introduction to D3.js

While it might seem like D3.js is an all-encompassing framework, it’s really just a collection of small modules. Here are all of the modules: each is visualized as a circle – larger circles are modules with larger file sizes.

In Nodes you write programs by connecting “blocks” of code. Each node – as we refer to them – is a self contained piece of functionality like loading a file, rendering a 3D geometry or tracking the position of the mouse. The source code can be as big or as tiny as you like. We’ve seen some of ours ranging from 5 lines of code to the thousands. Conceptual/functional separation is usually more important.

Nodes.io

Nodes* is a JavaScript-based 2D canvas for computational thinking. It’s powered by the npm ecosystem and lives on the web. We take inspiration from popular node-based tools but strive to bring the visual interface and textual code closer together while also encouraging patterns that aid the programmer in the prototype and exploratory stage of their process.

*(not to be confused with node.js)

The amount of computing power available in todays hardware is reaching levels where raytracing and high detail physics simulations are in reach.

Dennis Gustafsson shared on his Twitter and blog some insights and videos of his experiments and implementations. Be astonished:

I am running most of my in-house infrastructure based on Docker these days…

Docker is a set of platform-as-a-service (PaaS) products that use operating-system-level virtualization to deliver software in packages called containers. Containers are isolated from one another and bundle their own software, libraries and configuration files; they can communicate with each other through well-defined channels.

All containers are run by a single operating-system kernel and are thus more lightweight than virtual machines.

Wikipedia: Docker

And given the above definition it’s fairly easy to create and run containers of things like command-line tools and background servers/services. But due to the nature of Docker being “terminal only” by default it’s quite hard to do anything UI related.

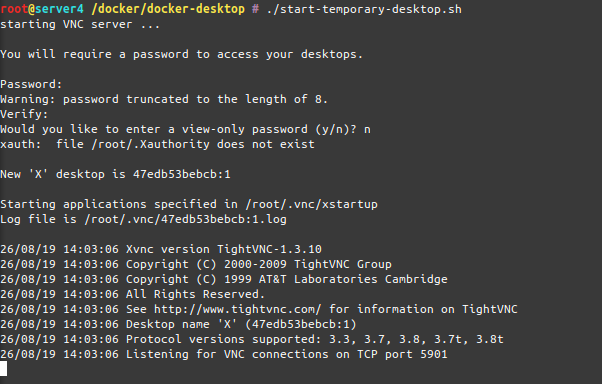

But there is a way. By using the VNC protocol to get access to the graphical user interface we can set-up a container running a fully-fledge Linux Desktop and we can connect directly to this container.

I am using something I call “throw-away linux desktop containers” all day every day for various needs and uses. Everytime I start such a container this container is brand-new and ready to be used.

Actually when I start it the process looks like this:

As you can see when the container starts-up it asks for a password to be set. This is the password needed to be entered when the VNC client connects to the container.

And when you are connected, this is what you get:

I am sharing my scripts and Dockerfile with you so you can use it yourself. If you put a bit more time into it you can even customize it to your specific needs. At this point it’s based on Ubuntu 18.04 and starts-up a ubuntu-mate desktop environment in it’s default configuration.

When you log into the container it will log you in as root – but effectively you won’t be able to really screw around with the host machine as the container is still isolating you from the host. Nevertheless be aware that the container has some quirks and is run in extended privileges mode.

Chromium will be pre-installed as a browser but you will find that it won’t start up. That’s because Chromium won’t start up if you attempt a start as root user.

The workaround:

Now get the scripts and container here and build it yourself!

When you are using or developing NodeJS applications and the Node Package manager (npm) over time a lot of old crusty libraries will accrue.

A lot means, a lot:

To have a chance to get on top of things and save space, try this:

npm i -g npkill

By then using npkill you will get an overview (after a looong scan) of how much disk space there is to be saved.

Great tip!

So I leave this right here:

OS.js is an open-source JavaScript Web Desktop implementation for your browser with a fully-fledged window manager, Application APIs, GUI toolkits and filesystem abstraction.

It really does implement a lot of what an operating system UI and portions of the backends are supposed to be. It looks quite funky and there are applications to this. Of course it’s open source

I want all electron Apps to start existing there so I can call all of them with just a browser from anywhere.

If you want to give it a spin, click here:

The CCCamp 2019 has just started and you can join the live streaming anytime.

As people around me discuss what to go for in regards to manage their growing number of private GIT repositories I joined their discussion.

A couple of years ago I assessed how I would want to store my collection of almost 100 GIT private repositories and all those cloned mirrors I want to keep for archival and sentimental reasons.

An option was to pay for GitHub. Another option, which most seemed to prefer, was going for a local Gitlab set-up.

All seemed not desirable. Like chaining my workflows to GitHub as a provider or adopting a new hobby to operate and maintain a private GitLab server. And as it might have become easier to operate a GitLab server with the introduction of container management systems. But I’ve always seemed to have to update to a new version when I actually wanted to use it.

So this was when I had to make the call for my own set-up about 4 years ago. We were using a rather well working GitLab set-up for work back then. But it all seemed overkill to me also back then.

So I found: gogs.io

It runs with one command, the only dependency is two file system directories with (a) the settings of gogs and (b) your repositories.

It’ll deploy as literally a SINGLE BINARY without any other things to consider. With the provided dockerfile you are up and running in seconds.

It has never let me down. It’s running and providing it’s service. And that’s the end of it.

I am using it, as said, for 95 private repositories and a lot of additionally mirrored GIT repositories. Gogs will support you by keeping those mirrors in sync for you in the background. It’s even multi-user multi-organization.

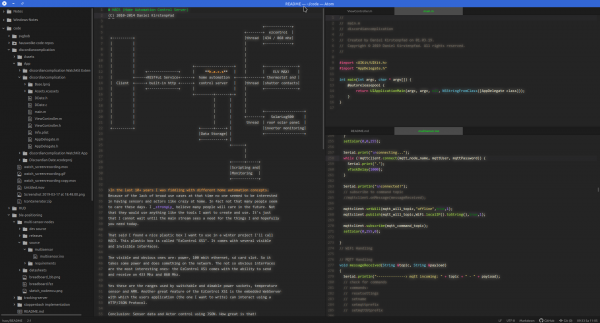

When you are writing code the patterns seem to repeat every once in a while. Not only the patterns but also the occasion you are going to apply certain code styles and methods while developing.

To support a developer with this creative work the tedious and repetitious tasks of typing out what is thought can be supported by machine learning.

Chances are your favourite IDE already supports an somehow AI driven code autocomplete feature. And if it does not, read on as there are ways to integrate products like TabNine into any editor you can think of…

Visual Studio IntelliCode is a set of AI-assisted capabilities that improve developer productivity with features like contextual IntelliSense, argument completion, code formatting, and style rule inference.

IntelliCode augments existing developer workflows with machine-learning services that provide an understanding of code and its context. It’s applicable for C#, C++ (in preview), JavaScript/TypeScript (in preview), and XAML code today, and will be updated in the future to support more languages.

Visual Studio IntelliCode

Of course there are some new contenders to the scene, like TabNine:

TL;DR: TabNine is an autocompleter that helps you write code faster. We’re adding a deep learning model which significantly improves suggestion quality. You can see videos below and you can sign up for it here.

TabNine

Deep TabNine requires a lot of computing power: running the model on a laptop would not deliver the low latency that TabNine’s users have come to expect. So we are offering a service that will allow you to use TabNine’s servers for GPU-accelerated autocompletion. It’s called TabNine Cloud, …

TabNine

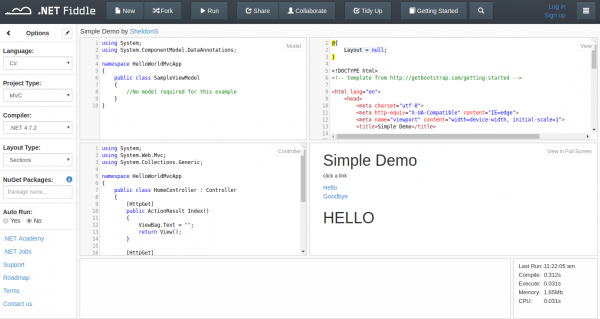

There are a lot of different “fiddles”. There’s JSFiddle, SQLFiddle, HTMLFiddle, RegExFiddle, PythonFiddle, R-Fiddle, GoFiddle. There is even a page that curates a list of fiddles.

For .NET there’s one too. It supports C#, F# and VB.NET. For trying something quickly or sharing it online this is a nice way to do it:

As I am mainly producing text and markdown notes throughout the day I am always interested in ways to quickly create simple text-based flow-charts.

I did write about a couple of tools to accomplish this previously but I want to take note of the most recent addition to the toolbox: ASCIIFlow Infinity.

You open it in your browser and start drawing with the simple tools provided.

When you are done you export it to plain text and do what you feel like with it.

Here’s a feature overview:

The Chaos Communication Camp is an international, five-day open-air event for hackers and associated life-forms. It provides a relaxed atmosphere for free exchange of technical, social, and political ideas. The Camp has everything you need: power, internet, food and fun. Bring your tent and participate!

CCCamp 2019 Wiki

It has been 2005 that I had the time and chance to attend an international open-air meeting of normal people. Of course I am talking about the 2005 What-the-hack I wrote about back then.

This year it’s time again for the Chaos Communication Camp in Germany. Sadly still I won’t be attending. Clearly that needs to change with one of the next iterations. With the CCC events becoming highly valuable also for families maybe it’s a chance in the future to meet up with old and valued friends (wink-wink Andreas Heil).

The Chaos Communication Camp (also known as CCCamp) is an international meeting of hackers that takes place every four years, organized by the Chaos Computer Club (CCC). So far all CCCamps have been held near Berlin, Germany.

The camp is an event for providing information about technical and societal issues, such as privacy, freedom of information and data security. Hosted speeches are held in big tents and conducted in English as well as German. Each participant may pitch a tent and connect to a fast internet connection and power.

Enjoy the intro-movie that has just been made available to us, alongside the whole design material:

How to build security into your software? It’s always simple to find examples where things gone wrong. Where security was compromised and things did not work out as the software authors envisioned.

As always there are new concepts and operating systems being implemented.

A particularly interesting example of security software design can be observed here:

Fuchsia is an open source capability-based operating system currently being developed by Google.

In contrast to prior Google-developed operating systems such as Chrome OS and Android, which are based on the Linux kernel, Fuchsia is based on a new microkernel called Zircon. The name Zircon refers to the mineral of the same name.

Google Fuchsia

So you now know what Fuchsia is. Now on to the actual example. For this we have to take a look into the developer documentation of Zircon:

So this describes a method to get random numbers from the systems cryptocraphically-secure-random-number-generator (CPRNG). It takes a pointer to a memory location as a parameter.

Now. What’s secure about that? It’s the behaviour of the method when it is encountering an unsecure situation:

It’ll kill the calling process when the pointer is not valid.

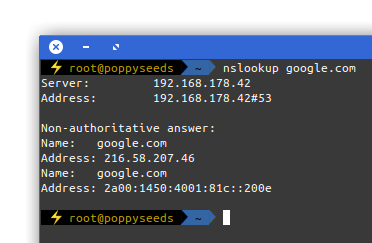

Picture yourself in this situation. You connect to a network and nothing works. Except for this:

It is quite common to have DNS working in networks while everything else is not. Sometimes the network requires a log-in to give you access to a small portion of the internet.

Now, with the help of a tool called iodine, you can get access to the full internet with only DNS working in your current network:

iodine lets you tunnel IPv4 data through a DNS server. This can be usable in different situations where internet access is firewalled, but DNS queries are allowed.

It runs on Linux, Mac OS X, FreeBSD, NetBSD, OpenBSD and Windows and needs a TUN/TAP device. The bandwidth is asymmetrical with limited upstream and up to 1 Mbit/s downstream.

iodine

Setting it up is a bit of work but given that you are anyway having access to a well connected server on the free portion of the internet it can be easily done.

Of course the source is on github.

SpriteStack is a voxel editor suited for 2D artists featuring hand crafted retro renderer with animation support. It exports spritesheets, slices and vox models.

SpriteStack

You can get a grasp at the beautiful side of science with visualizations and algorithms that output visual results.

This is the example of producing lots and lots of complex data (houses!) from a small set of input data. It is widely used in game development but also can be helpful to generate parameterized test and simulation environments for machine learning.

So before sending you over to the more detailed explanation the visual example:

This is a lot of different house images. Those are generated using a program called WaveFunctionCollapse:

WFC initializes output bitmap in a completely unobserved state, where each pixel value is in superposition of colors of the input bitmap (so if the input was black & white then the unobserved states are shown in different shades of grey). The coefficients in these superpositions are real numbers, not complex numbers, so it doesn’t do the actual quantum mechanics, but it was inspired by QM. Then the program goes into the observation-propagation cycle:

On each observation step an NxN region is chosen among the unobserved which has the lowest Shannon entropy. This region’s state then collapses into a definite state according to its coefficients and the distribution of NxN patterns in the input.

On each propagation step new information gained from the collapse on the previous step propagates through the output.

On each step the overall entropy decreases and in the end we have a completely observed state, the wave function has collapsed.

It may happen that during propagation all the coefficients for a certain pixel become zero. That means that the algorithm has run into a contradiction and can not continue. The problem of determining whether a certain bitmap allows other nontrivial bitmaps satisfying condition (C1) is NP-hard, so it’s impossible to create a fast solution that always finishes. In practice, however, the algorithm runs into contradictions surprisingly rarely.

Wave Function Collapse algorithm has been implemented in C++, Python, Kotlin, Rust, Julia, Go, Haxe, JavaScript and adapted to Unity. You can download official executables from itch.io or run it in the browser. WFC generates levels in Bad North, Caves of Qud, several smaller games and many prototypes. It led to new research. For more related work, explanations, interactive demos, guides, tutorials and examples see the ports, forks and spinoffs section.

This project is a way for people to use CSS Grid features quickly to create dynamic layouts.

You can set the numbers, and units of your columns and rows, and I’ll generate a CSS grid for you! Drag within the boxes to create divs placed within the grid.

I noticed a lot of people weren’t using Grid because they felt it was too complicated and they couldn’t understand it. Grid is capable of so much, and this small generator only touches on a fraction of the features. The purpose of this is so people get up and running quickly, and create more interesting layouts.

Once you work with this a bit, I suggest checking out resources by Rachel Andrew, Jen Simmons, and Dave Geddes to dive deeper. There is also a CSS Grid Guide on CSS-Tricks, and a fun little game called Grid Garden to help you learn more!

Source

When working on source files with wide-ranging scopes, I wish source editors could pin the declaration lines to the top of the window like section headers, something like this…

Joe Groff on Twitter

This looks like something that would really make a difference when editing code. Let’s see how long until we get something like that in modern editors…

The Jupyter Notebook is an open-source web application that allows you to create and share documents that contain live code, equations, visualizations and narrative text. Uses include: data cleaning and transformation, numerical simulation, statistical modeling, data visualization, machine learning, and much more.

Voila serves live Jupyter notebook including Jupyter interactive widgets.

Unlike the usual HTML-converted notebooks, each user connecting to the Voila tornado application gets a dedicated Jupyter kernel which can execute the callbacks to changes in Jupyter interactive widgets.

https://github.com/QuantStack/voila

Install multi-cursor-plus:

Hate reaching for the mouse?

multi-cursor-plusmulti-cursor-plusallows you to create multiple cursors anywhere in the buffer, using only your keyboard. Supports multiple selections and removing previous cursors at any time. Easy to use. Amazing!

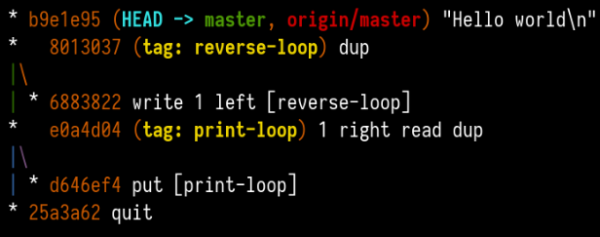

Programs written in legit are defined entirely by the graph of commits in a Git repository. The content of the repository is ignored.

legit is designed so that all relevant information is visible when runninggit log --graph --oneline.

For example, here is “hello world”:

You can find implementations of legit, as well as some example programs, on GitHub: https://github.com/blinry/legit. The entry in the Esolang wiki is at https://esolangs.org/wiki/Legit.

The beginning of the decade saw the continuation of the clothing styles of the late 1970s and evolved into heavy metal fashion by the end. However, it had a lot of changes considering that, this fashion became more and more extravagant during the 80s.

The 80s included things like teased hair, ripped jeans, neon clothing and lots of colours and different designs which at first weren’t accepted for a lot of people.

Popular Culture in the 80s

Do you remember that endless summer back in ’84? Cruising down the ocean-highway with the top down, the wind in our hair and heads buzzing with neon dreams?

Synthwave’84 theme

No, I don’t remember it either, but with this experimental theme we can go there.

About 2 years ago I sat down and wrote a filesystem. Well not from scratch but using the great FUSE (Filesystem in Userspace) framework. I’ve released it as open-source later on Github:

This script acts as glue between a local file storage mount point and RIAK. It is targeted at specific use cases when local mount-points need to be migrated to RIAK without changing the applications accessing those mount point. Think of it as a transparent RIAK filesystem layer with multiple options to control it’s behavior regarding local files.

riakfuse

I had a very specific purpose in mind when I wrote this: There was a local filesystem that got filled up and because of technical restrictions we were not able to resize it or even completely copy it without interrupting the service using the data stored there.

Since we were already using the RIAK Key-Value Database for certain binary loads the idea came up to also utilize this key-value concept for a filesystem.

The idea now is: You have a local filesystem that holds a lot of folders and files already and you want to gradually want to move it to new grounds.

This migration needed to happen with minimal service interruptions assuming that there is constant reading and writing happening.

In this riak-fuse project I’ve written an overlay filesystem that steps between the application and the underlying “old”-filesystem. It looks and behaves identical to the application reading and writing.

But, depending on the mode you have chosen while mounting, every file read will at first be read from the “old”-filesystem and after successfull read stored into the key-value store.

On the next read it will be read from the key-value store directly.

The same applies for writing. Riak-fuse will write either to both, local storage and key-value store or just to the key-value store.

So in a nutshell: Data is slowly but surely on each access transferred over to the key-value store and load + storage space is slowly moving over from the local storage to the key-value store.

To facilitate this there are a lot of options for this script:

This all comes with ready-to-use docker and docker-compose files for you to try out.

Also it might interest you as an extremely simplified example of how to write an actual file system module for FUSE in Python.

Disclaimer: This effectively is my first python script as well as fuse module. Don’t be too hard on your judgement.

Many of us are happy when they can accomplish the most simple tasks with Excel without pulling their own hair out.

And then there are these people who do something entirely different with Excel:

Finding engineering work quite unchallenging lately I decided to start this blog in which to share cool ways of solving engineering problems or just interesting modeling of natural phenomena in MS Excel 2003. I use mainly cell formulas with minimum of VBA in order to take advantage of the ease of “programming” and the native speed of the Excel spreadsheet.

http://www.excelunusual.com/

I’ve found myself in these spots several times in my life. Either I had to deliver on an estimate or I had to acknowledge an estimation and deal with the outcomes.

If you are involved in anything digital / software this is a recommended piece to read:

Anyone who built software for a while knows that estimating how long something is going to take is hard. It’s hard to come up with an unbiased estimate of how long something will take, when fundamentally the work in itself is about solving something. One pet theory I’ve had for a really long time, is that some of this is really just a statistical artifact.

Why software projects take longer than you think

I came across a very nice explanatory piece for QR codes. If you always wanted to know the basic principles this is a good chance to get a grasp.

QR code (abbreviated from Quick Response Code) is the trademark for a type of matrix barcode (or two-dimensional barcode) first designed in 1994 for the automotive industry in Japan. A barcode is a machine-readable optical label that contains information about the item to which it is attached. In practice, QR codes often contain data for a locator, identifier, or tracker that points to a website or application. A QR code uses four standardized encoding modes (numeric, alphanumeric, byte/binary, and kanji) to store data efficiently; extensions may also be used.[1]

Wikipedia

I am using QR codes in several of my projects – one example: Miataru uses QR codes to encode the device ID and help with the device handshake. You scan the QR code of your friend with your Miataru client app and immediately will be able to see his location in Miataru. Without the need to enter long rows of numbers.

Every task you take

my text editor

Every meeting you make

Every keyboard you break

Every note you take

I’ll be storing it for you

Well that was fun! And indeed: a big portion of my professional daily business is taking place in a text editor taking notes and scribbling ideas and thoughts.

I’ve tried many things but the only way that resonated with me was taking notes in Markdown in a text editor that supports markdown. Currently that editor is Atom.io. Mainly because it is not in the way and quite portable. Runs on Linux, Windows, MacOS.

This way – I just took a count – I noted down 364.416 words in the last 1.5 years on my current job (equals to about 46 hours of average speed reading…).

Along side those simple text notes and bullet lists I am doing very simple tables as well as ASCII scribbles in Markdown as well. With the right tools it’s extremely easy and much faster than booting up the Powerpoint or worse.

When you have all in Markdown you then can freely stylesheet away and convert to handy PDF files as well. All even with embedded images if you so desire.

But even if you sit on that treasure trove of Markdown there comes the time when you wish you could convert your scribbles to graphics. Even if it is for the sole reason to not have to draw it again for that fancy Powerpoint slide deck.

You’ve got multiple options to accomplish this:

svgbob is at first a command line tool that got a recent level-up with a proper web-frontend:

When given Markdown it creates graphics. In the picture above the input is on the left and the svgbob output on the right (as SVG).

Markdeep is the alternative. Which of both work for your case depends on that specific case. Knowing and using both properly is the best way.

We know that using swap space instead of RAM (memory) can severely slow down the performance of Linux. So then, one might ask, since I have more than enough memory available, wouldn’t it better to remove swap space completely? The short answer is, No. There are performance benefits when swap is enabled, even when you have more than enough ram.

Why you should almost always add swap space

vfs_cache_pressure – Controls the tendency of the kernel to reclaim the memory which is used for caching of directory and inode objects. (default = 100, recommend value 50 to 200)

swappiness – This control is used to define how aggressive the kernel will swap memory pages. Higher values will increase aggressiveness, lower values decrease the amount of swap. (default = 60, recommended values between 1 and 60) Remove your swap for 0 value, but usually not recommended in most cases.

https://access.redhat.com/solutions/103833

As I’ve now brought up the topic, go ahead and read the complete story at the source.

As Windows lately tends to make an effort to stay out of the way as an operating system and user-experience it seems that it regains more attention by developers.

For me this all is quite strange as I’ve personally would prefer switching from macOS to Linux rather than Windows.

But for those occasions you need to go with Windows. There’s a Terminal application now that gives you, well, a good terminal. Try FluentTerminal.