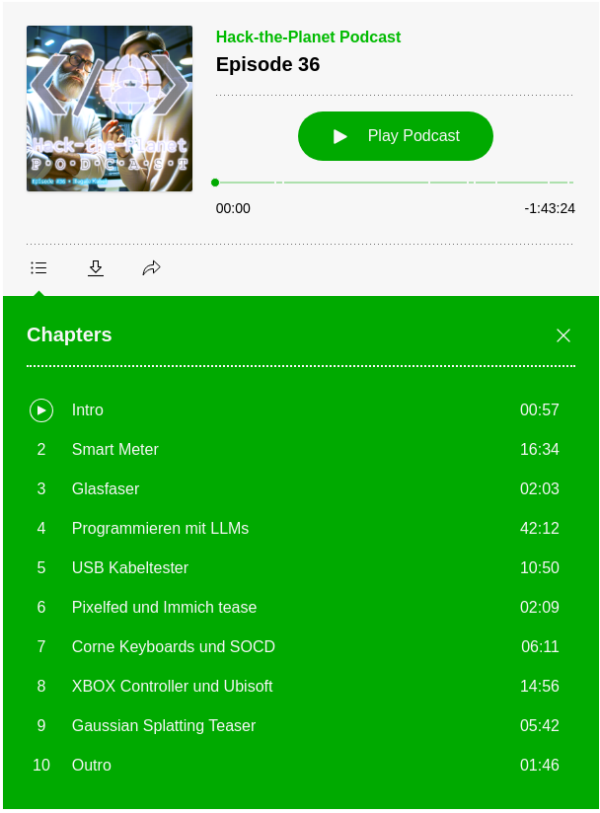

In dieser Folge des „Hack the Planet“ Podcasts diskutieren Daniel und Andreas über Smartmeter, Code mit LLMs, Grafiken (ohne KI), einen USB-Kabeltester, eine teure Gamer-Tastatur, selbstprogrammierte Features und den Xbox Elite Controller. Andreas berichtet über seine Erfahrungen mit Smartmetern und den Herausforderungen, die damit einhergehen, wie z.B. der Kosten und der Umstellung auf neue Technologien. Sie sprechen auch über Photovoltaikanlagen, Batteriespeicher und die Regulierungen, die es erforderlich machen, Smartmeter zu installieren. Außerdem geht es über die Herausforderungen und den Spaß, den sie beim Programmieren mit LLMs hatten, sowie über ihre Erlebnisse mit neuen technologischen Gadgets.

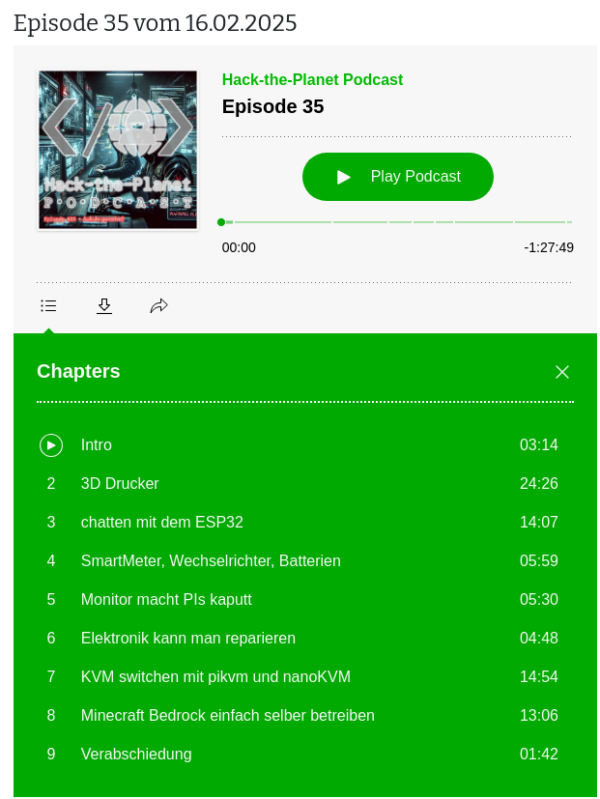

Hack-the-Planet Podcast Episode 35: Aufruhr generiert!

In der 35. Folge des Hack-the-Planet-Podcasts diskutieren Daniel und Andreas eine Vielzahl von Themen rund um Technik, 3D-Druck, persönliche Projekte und Community-Veranstaltungen. Sie beginnen mit einem Rückblick auf die lange Pause seit der letzten Folge und teilen ihre Erfahrungen mit technischen Problemen und Updates an ihren Computern und Software.

Ein zentrales Thema ist der 3D-Druck, wobei Daniel seine Erfahrungen mit verschiedenen 3D-Druckern und Materialien wie PLA und PETG teilt. Er beschreibt die Herausforderungen und Lernkurven beim 3D-Druck sowie die Nachbearbeitung der Drucke. Andreas berichtet von seinen Projekten, darunter der Bau einer kompletten Trooper-Uniform aus Star Wars und eines lebensgroßen B1-Battle-Droiden. Andreas berichtet von Science-Fiction-Treffen und Cosplay-Veranstaltungen, insbesondere dem jährlichen Treffen in Speyer, und beschreibt die Technikmuseen in Speyer und Sinsheim.

Daniel und Andreas diskutieren zudem verschiedene technische Gadgets, einschließlich der Nutzung von ESP32 für Chat-Anwendungen im Flugzeug, und teilen ihre Erfahrungen mit der Sicherheit und Lagerung von Lithium-Polymer-Batterien.

Ein weiteres Thema ist die Einrichtung eines eigenen Minecraft-Servers für die Familie und die Herausforderungen bei der Nutzung von Xbox und anderen Konsolen für Multiplayer-Spiele.

How about an Eki Stamp, Goshuin, Tetsuin collection app?

english version

I am currently contemplating the development of a mobile application that allows users to discover and collect various Japanese cultural stamps, such as 駅スタンプ (eki stamps), 御朱印 (goshuin), and 鉄印 (tetsuin). Additionally, this app will enable users to share their collections. My plan is to utilize OpenStreetMap data and provide functionality for users to contribute new stamp locations to the OSM database directly from the app. I have prepared a comprehensive “vision-readme” document that outlines the initial version of the application, detailing various aspects like functionalities, design considerations, and target audience.

I am seeking support as I currently lack expertise in adding structured data to OSM. My experience with OSM data and app development includes hosting my own Overpass server with a full global dataset. This server supports two iOS mobile applications I developed: (1) miataru and (2) Toilets around me.

I am in the research and conceptualization phase and am looking for collaborators interested in contributing to the concept, implementation, and operation of this project.

You can find more details on the vision and concept here:

Overview

EkiStamp Quest is an engaging mobile application designed for travelers in Japan. It’s a perfect companion for those who enjoy collecting unique Eki Stamps from train stations and tourist spots across the country. The app also supports the collection of Goshuin and Tetsuin, catering to a wide range of cultural enthusiasts.

Goshuin are traditional seals collected at temples and shrines, symbolizing a visit and prayer.

Tetsuin are railway station-specific stamps, often celebrating historic or scenic railway lines. EkiStamp Quest offers a fun and interactive way to explore and appreciate Japan’s cultural landmarks, including temples, shrines, and railway stations.

Features

- Stamp Locator: Utilize your location to discover nearby tourist spots, train stations, temples, and shrines with Eki Stamps, Goshuin, and Tetsuin.

- Interactive Map: Navigate through different regions and find locations offering these cultural stamps and seals.

- Collection Tracker: Keep track of the stamps and seals you’ve collected and the locations you’ve visited.

- Stamp and Seal Information: Access detailed information about each stamp and seal, including their design, station history, and cultural insights.

- Community Sharing: Share your collection with others and explore collections from various users.

- Rewards and Challenges: Engage in challenges such as stamp rallies and historic railway journeys to collect special stamps and earn rewards.

- In-App Cropping Tool: Save and personalize your stamp collection with a cropping tool, allowing for cut-out versions of stamps.

- Customizable Collection Books: Choose from various designs to display your stamp collection in a style that suits you.

- Social Media Integration: Easily share your stamps, overlaid on personal photos, on social networking sites.

- Stamp Rally Participation: Join stamp rallies organized by different locations or operators, adding an exciting dimension to your collection experience.

EkiStamp Quest enriches the cultural experience of its users, enabling them to delve into and appreciate the diverse aspects of Japanese heritage through the collection of unique stamps and seals from various locations. This app transforms the traditional hobby of stamp collecting into an interactive and memorable journey through Japan’s rich cultural landscape.

Contact

To ask any question or offer help, please contact me through the comment function of this blog or by email: bietiekay -at- gmail.com

japanese version

私は日本語のネイティブスピーカーではなく、翻訳ツールを使っています。

こんにちは、現在私は、駅スタンプ、御朱印、鉄印などの様々な日本の文化的なスタンプを発見し、収集できるモバイルアプリケーションの開発を検討しています。さらに、このアプリではユーザーが自分のコレクションを共有できるようになります。私の計画は、OpenStreetMapのデータを利用し、ユーザーがアプリから直接OSMデータベースに新しいスタンプの場所を追加できる機能を提供することです。私は、アプリケーションの初期バージョンを概説する包括的な「ビジョンリードミー」ドキュメントを用意しました。これには、機能、デザインの考慮事項、対象オーディエンスなど、さまざまな側面が詳述されています。

私は現在、OSMに構造化データを追加する専門知識が不足しているため、サポートを求めています。私のOSMデータとアプリ開発の経験には、全世界のデータセットを持つ自分自身のOverpassサーバーをホストすることが含まれます。このサーバーは、私が開発した2つのiOSモバイルアプリケーションをサポートしています:(1) miataru および (2) Toilets around me。

私は現在、研究および概念化の段階にあり、このプロジェクトのコンセプト、実装、運用に貢献したいと思っているコラボレーターを探しています。

ビジョンとコンセプトの詳細はこちらでご覧いただけます:

概要

EkiStamp Questは、日本を旅する人々向けの魅力的なモバイルアプリです。全国の鉄道駅や観光地で集められるユニークな駅スタンプを愛する人に最適。このアプリは御朱印や鉄印の収集もサポートし、幅広い文化愛好家を対象としています。

御朱印は、寺社で受ける証しの印章で、訪問と祈りを表します。 鉄印は、鉄道駅固有のスタンプで、特に歴史的または景観の良い鉄道路線を記念しています。EkiStamp Questは、日本の寺院、神社、鉄道駅などの文化的名所を探索し、楽しむためのインタラクティブな方法を提供します。

機能

- スタンプ探索: 現在地を利用して、近くの観光地や鉄道駅で駅スタンプ、御朱印、鉄印を見つけます。

- インタラクティブマップ: 地図上で様々な地域をナビゲートし、これらの文化的スタンプや印章がある場所を探します。

- コレクション追跡: 収集したスタンプや印章、訪れた場所を記録します。

- スタンプ・印章情報: 各スタンプや印章の詳細な情報、デザイン、駅の歴史、文化的背景などを提供します。

- コミュニティ共有: 自分のコレクションを共有し、他のユーザーのコレクションを見ることができます。

- 報酬とチャレンジ: スタンプラリーや歴史的鉄道旅行などのチャレンジに参加し、特別なスタンプを獲得し、報酬を得ます。

- クロッピングツール: アプリ内のクロッピングツールを使って、スタンプコレクションを保存し、カスタマイズします。

- カスタマイズ可能なコレクションブック: 自分の好みに合わせた様々なデザインの中からコレクションブックを選べます。

- SNS統合: スタンプを個人の写真に重ねて、SNSで簡単に共有できます。

- スタンプラリー参加: 様々な場所や運営者が主催するスタンプラリーに参加することができます。

EkiStamp Questは、日本の豊かな文化を通じて、ユニークなスタンプや印章を集めることでユーザーの体験を深めます。このアプリは、伝統的なスタンプ収集を、日本の文化的景観を巡るインタラクティブで記憶に残る旅に変えます。

連絡先

ご質問やお手伝いの申し出は、このブログのコメント機能または電子メールでご連絡ください:bietiekay -at- gmail.com

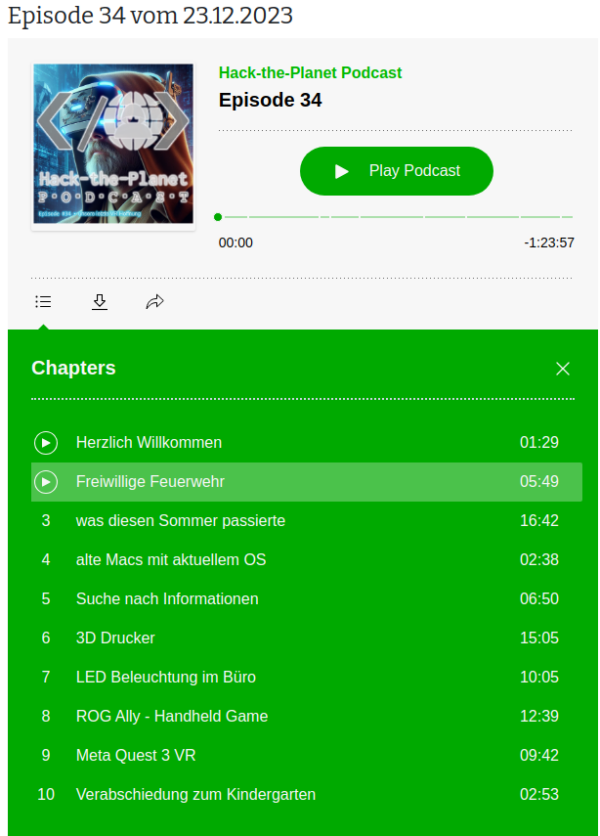

Hack-the-Planet Podcast Episode 34: unsere letzte VR Hoffnung

Shownotes:

- Freiwillige Feuerwehr – https://de.wikipedia.org/wiki/Freiwillige_Feuerwehr

- Tetra Digitalfunk bei der Feuerwehr – https://www.feuerwehrmagazin.de/thema/digitalfunk-feuerwehr

- Zoom CEO: Remote work does not work https://www.theverge.com/2023/8/7/23823464/zoom-remote-work-return-to-office-hybrid

- Pfusch beim Tiefbau: Immer mehr Gemeinden streiten mit Deutsche Glasfaser – Golem.de

- Open Access Network – Wikipedia

- OpenCore Legacy – https://dortania.github.io/OpenCore-Legacy-Patcher/

- ChatGPT export to Markdown – https://github.com/pionxzh/chatgpt-exporter

- LLM Farm – https://llmfarm.site/

- Anycubic 3D Drucker – https://de.anycubic.com/collections/schneller-3d-drucker-materialien/products/kobra-2-neo

- OnShape 3D Software – Onshape | Product Development Platform

- 3D Modelle für 3D Druck – Thingiverse – Digital Designs for Physical Objects

- Persistence of Vision Raytracer – http://www.povray.org/

- Blender – https://www.blender.org/

- Govee – Govee – Ihr Leben wird intelligenter – EU-GOVEE

- Govee auf Google Maps – https://maps.app.goo.gl/SoUovsYyTPSxsFnj9

- Steamdeck OLED – Introducing Steam Deck OLED

- ROG Ally – https://rog.asus.com/de/gaming-handhelds/rog-ally/rog-ally-2023/

- Starfield – https://bethesda.net/de/game/starfield

- Cyberpunk Phantom Liberty – https://www.cyberpunk.net/us/de/phantom-liberty

- Persona 5 – https://de.wikipedia.org/wiki/Persona_5

- Meta Quest 3 – https://www.meta.com/de/quest/quest-3/

- Motion Sickness – https://de.wikipedia.org/wiki/Reisekrankheit

- Red Matter – https://redmattergame.com/

- Red Matter 2 – https://redmatter2.com/

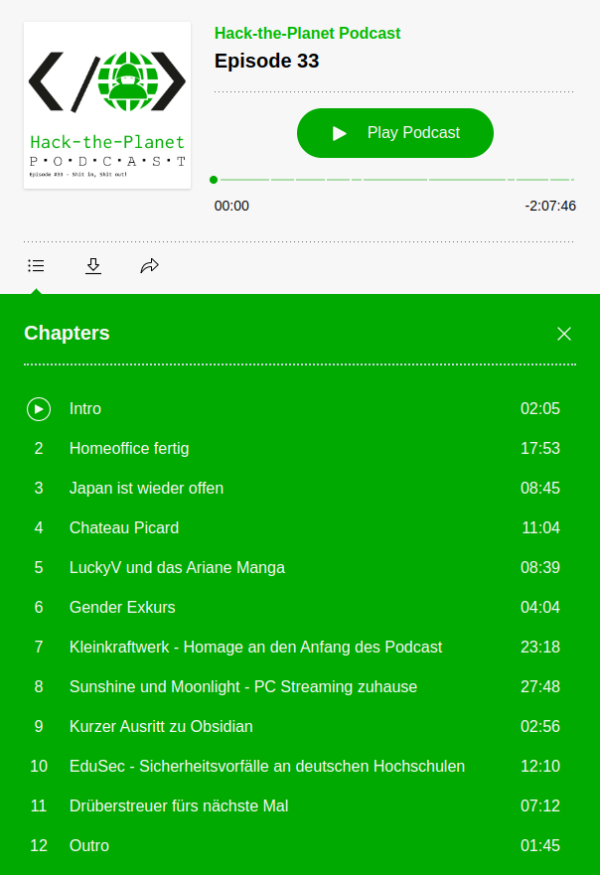

Hack-the-Planet Podcast Episode 33: Shit in, Shit out!

Nach über einem Jahr haben wir uns wieder zusammengefunden und aufgezeichnet. Aber hört selbst:

Links:

- Wielandstecker vs. Schuko https://www.homeandsmart.de/balkonkraftwerk-wieland-steckdose-schuko-steckdose#:~:text=Der%20Wieland%20Stecker%20ist%20aus,die%20%C3%9Cberhitzung%20und%20Brandgefahr%20minimiert.

- Trunking und Glas Router für wenig Geld – https://mikrotik.com/

- Edogawa Ryukan Waterworks – https://www.ktr.mlit.go.jp/edogawa/edogawa_index042.html

- Château Picard: https://startrekwines.com

- LuckyV – https://www.luckyv.de

- Ariane Manga Band 1 Release Party Stream Aufzeichnung – https://www.youtube.com/watch?v=Vlc_JfYNETk

- Ariane Manga Band 1 – https://www.fischerverlage.de/buch/pandorya-ariane-9783733550271

- Ariane Manga Band 2 – https://www.fischerverlage.de/buch/pandorya-ariane-hard-reset-daemonen-der-vergangenheit-9783733550301

- GL.Inet Reiserouter – https://www.gl-inet.com/

- Pico4 VR Headset – https://www.picoxr.com/de

- Pico4 Specs: https://www.picoxr.com/de/products/pico4/specs

- PC-VR mit VirtualDesktop – https://www.vrdesktop.net

- Spiele gespielt und gestreamt – https://youtube.com/@bietiekay

- Sunshine – https://app.lizardbyte.dev/

- Moonlight – https://moonlight-stream.org/

- VirtualHere USB – https://www.virtualhere.com

- Obsidian – https://obsidian.md/

- EduSec – https://aheil.de/edusec/

- ChatGPT – https://en.wikipedia.org/wiki/ChatGPT

Finally arrived in VR – Virtual Reality in late 2022

I have really waited this out. Some “galaxy-sized brains” tell us for decades now that virtual reality is the next big thing. And it might as well have been.

Almost nobody (me included) cared to even try – and with good reason: There’s no way to transport the experience that virtual reality creates in an easy way. Language and “flat-screen-video” is not enough. Even any 3D video is not going to come even close to deliver.

And I knew this was the case. Apart from a 30-second rollercoaster ride years ago I never had any direct contact with virtual reality technology until late this year 2022.

I did of course read about the technology behind all this. About the rendering techniques and the display – sensor – battery – processing hardware. I had read about the requirements for many-frames-per-second to have a believable and enjoyable experience. Would the hardware not be fit for the job the papers said: You will feel sick, very fast.

So I hesitated for years to purchase anything related to this. I wanted to “wait it out” as I had calculated the average spending required for a good set of hardware and software would easily roam into 2k-5k euro territory.

This year the time had come: the prices where down significantly for all components needed. Even better: There where some new hardware releases that tried to compete with existing offerings.

Of course the obvious thing to do would have been to purchase either a Valve Index or some Oculus,eh, Meta VR headsets. But that would have easily blown any budget and actually none of these is technologically interesting in End-2022.

My list of requirements was like this:

- lightweight and comfortable to wear

- inside-out tracking (see: Pose Tracking)

- CPU+GPU inside – the headset needs to be able to work stand-alone for video playback and gameplay

- battery for at least 1-2 hour wireless play

- touch+press controllers

- capable of being used as SteamVR / PCVR headset – wireless and wired

- Pancake lenses (as in “no fresnel”)

- do-not-break-bank price

And what can I say. There was at least one VR headset released in december 2022 that fit my requirements: PicoXRs PICO 4 headset.

Pico 4 VR headset

So I went ahead and purchased one – which was delivered promptly. It came with charger, USB-C cable, two controllers and the headset itself. The case I got in addition to carry it around and safely store it when not in use.

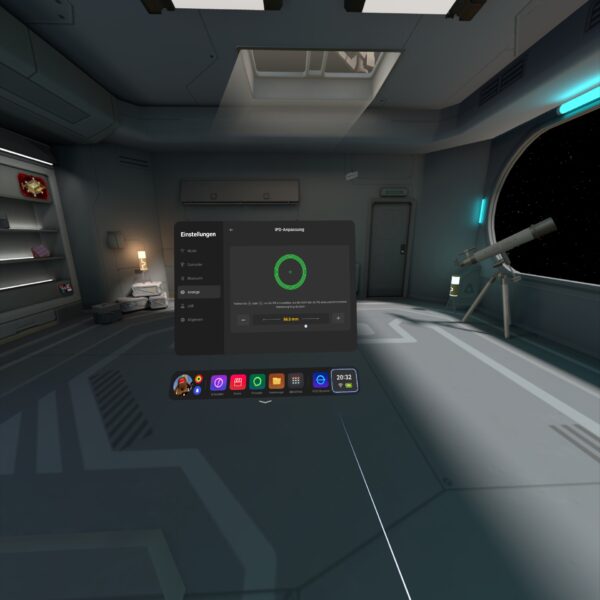

At first I tried only applications and games that can be run directly on the headset. Of course some video streaming from YouTube and the likes. There is VR/180/360 content readily available with a huge caveat: I quickly learned that even 8K video is not enough pixels when it’s supposed to fill 360 degress around you. 8K video is rather the minimum that starts to look good.

Then there’s formats of videos. Oh god there are formats. I’d probably spend another blog article just on video formats for VR and 180 or 360 degree formats. Keep in mind that you can add 3D to the equation as well. And if you want decent picture quality you see yourself easily pushing 60 frames of 8K (or more) times 2 (eyes) through to the GPU of the little head mounted displays. The displays can do 2160×2160 per eye. So you can imagine how much video you should be pushing until the displays are at their potential. And then think: 2160 per eye is NOT yet a pixel-density that you would not be able to see pixels sometimes. I do not see a screen-door-effect and the displays are really really good. But more pixels is…well more.

Anyways: There’s plenty of storage on the device itself so on the next airplane trip I can look funny with the headset on and being immersed in a movie…

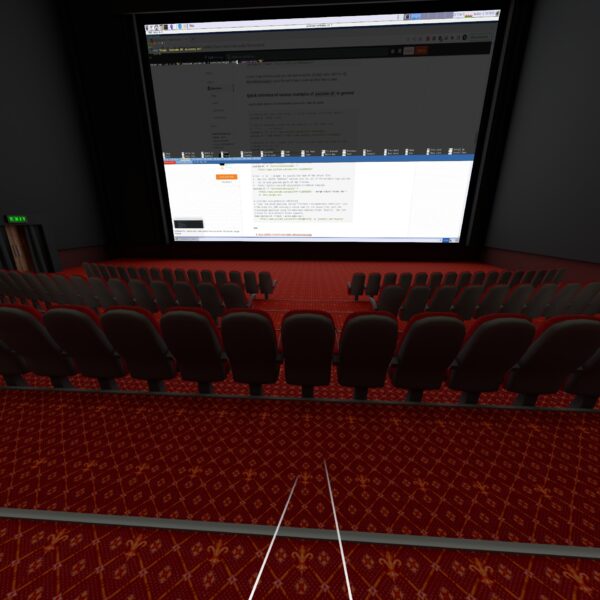

Or a remote desktop session:

After about a week of testing and playing (Red Matter 1 for example…) I was convinced that I’d like the technology and the experiences it offered.

The conclusion after the first week was as good as I could have hoped with the first 500 euro investment done: I would not get sick moving around in VR. I would enjoy the things offered. I was convinced that I was able to experience things otherwise not possible.

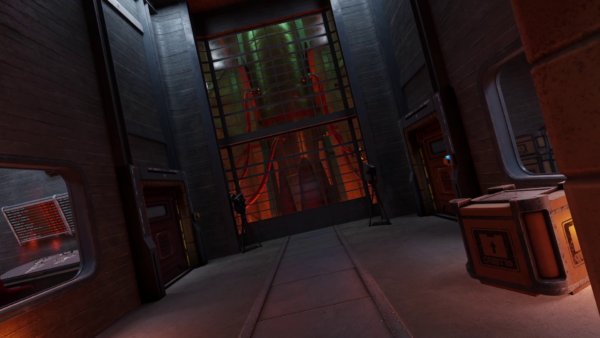

And I was convinced that I could not have come to any conclusion when not actually having owned such a headset and tried myself. It’s just not possible to describe to you what the feeling of being able to walk into a 3-dimensional world that gets rendered by a computer and fools your brain so well. Of course it’s NOT reality. That’s not the point. I do not feel like going to the holo-deck. But it feels like computer games become “3D touchable”. In virtual reality games there is a lot more going on than in non-VR games. And that’s the main reason that there are not more good VR games. It’s hard to build an immersive, believable game world. It’s real effort and I named Red Matter specifically because it was one of the most immersive and approachable puzzle, non-stressing games I have played.

Being convinced brought up the question: Now what?

Until this point there was no computer in our household that could even dream of powering a modern virtual reality PCVR experience. But there was one Windows PC which I could use to do the due dilligence for “what to buy” and if it at all would work as I wanted.

What did I want?

- a set-up that would allow me to play any modern PC VR game

- play the games with at least high details and with framerates and resolutions that would not make me sick

- no wired connection to the computer necessary

- ideally the computer would not even be in the same room or floor

So I had to do some testing first to figure out if the most basic requirements would work. So I purchased “Virtual Desktop” on the headset built-in store and installed the streamer app on the one Windows PC in the household that had a very old dedicated GPU.

I did the immediate extreme test. The computer connected to the wired network in the house. The headset connected to the house wifi shared with 80+ other devices. And it worked. It worked beautifully. Just out of the box with my mediocre computer I had the desktop screen of the computer floating in front of me. I was able to launch applications and I was even able to run simple 3D VR applications like Google Earth VR. I literally only had Steam and Virtual Desktop installed, clicked around and got the earth in front and below me in no time.

Apparently the headset was smart enough to connect to the 5ghz Wifi offered in addition to the crowded 2.4ghz. Latencies, bandwidth all in good shape.

To make things just a bit more forseeable I’ve dedicated a mobile access point to the headset. My usual travel access point (GLinet OPAL) apparently works quite well for this purpose.

It’s connected to the house wired network and creates an access point just for the headset. The headset then has reliable 500+ Mbit/s access to any computer in the household.

After some more playing around and simulating some edge case scenarios I came to the conclusion that his would work. I would not even have to touch a computer to do all this. It could all be done remotely over a fast-enough network connection.

After consulting with my knowledgable brother-in-law I then settled for a budget and had a computer built for the purpose of VR game streaming. After about 2k Euros and 2 weeks of waiting I received the rig and did the most reasonable thing: Put it in the server room in the house basement where it’s cool and most importantly far enough away from my ears.

So this one is closed up and sitting in the server room. The only thing other than power and ethernet that is plugged into the machine is an HDMI display emulator dongle:

The purpose of this HDMI plug without anything connected to it is to tell the graphics card that there’s display connected. It even tells the graphics card about all those funky resolutions that ghostly display can do… When there’s nothing connected to the HDMI ports the only resolutions that you can work with out-of-the-box are the default resolutions up to 1080p. This device enables you to go beyond 2160p.

I did a bit of setting-up for wake-on-lan and some additional fall-back remote desktop services in case something fails.

To wake-up the machine it’s sufficient to send the “magic packet” – either through the remote play client built-in features (Moonlight can do it…) or through the house-internal dashboard:

streaming games

For VR game streaming it’s as I had tested beforehand: Steam + Virtual Desktop doing their thing. Works, as expected, very pleasently even with high/ultra details set.

The machine can also be used to play normal non-VR games. For this I am using the open source Sunshine (server) / Moonlight (client) combination with great success.

I can either just open up the Moonlight app on my iPad, iPhone, RaspberryPi or Mac computer and connect to the computer in the basement and use it with 60-120fps 1080p to 4k resolutions without even noticing that there is no computer under the desk…

Oh – I do notice that there’s no computer under the desk because of the absence of any noise while using it.

What I have found is really astonishing for me – as I was not expecting a that well integrated and working solution without having to solve problems ahead.

Virtual Reality games are just working. It’s like installing, starting, works. The biggest issue I had run into was the controllers not being correctly mapped for the game – easily solvable by remapping.

I “upped” the stakes a bit a couple of days ago when I installed OBS Studio to live stream my VR session of playing Red Matter 2 (the sequel…).

After installing OBS and setting up the “capture this screen” scene it was very nice to see that not only did OBS record the right displays (when set right) but out of the box it recorded the correct audio AND the correct microphone. Remember: I am playing in a specific room at the top floor of my house. Using the awesome tracking of the head-set for room-scale VR to the fullest.

The computer in the basement means that the only connection from headset to the computer is through Virtual Desktop – 5ghz WiFi – Ethernet – Virtual Desktop Streamer.

I did not expect a microphone to be there but it is. I did not expect the microphone to work well. But it does. I did not expect the microphone being seamlessly forwarded to the computer in the basement and then OBS effortlessly picking it up correctly as a separate microphone for the twitch streaming. I was astounded. It-just-worked.

adding an (usb) gamepad

After a bit of fooling around, especially with standard PC games I found that some games make me miss a game pad. It was out of the question to connect a gamepad directly to the computer the games ran on – that one was in the basement and no USB cable long enough.

I remembered playing with USB-over-IP in recent years just for fun but also remembered not getting it to work properly ever. After investigating any hardware options I decided to give software another look.

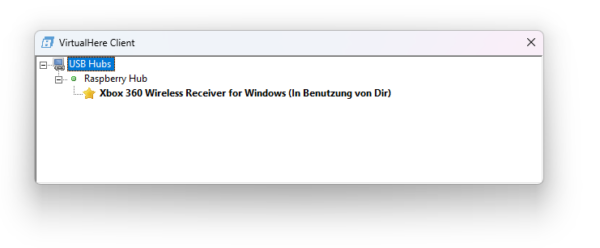

Apparently a company called “VirtualHere” had seen their chance since I played around the last time. They offer a server and client software that seemingly can run anywhere.

So I picked an old RaspberryPi 1 out of the drawer and flashed a fresh version of RaspberryPi OS. Booted it up and copied the one Linux ARM7 binary over that VirtualHere offers. It started without issues and further dependencies.

On the Windows Machine you also only have to run a simple application and it’ll scan the network for “VirtualHere USB hubs”.

For me it immediately showed up the RaspberryPi as an USB hub. I plugged in my old Xbox 360 wireless receiver and it showed up and connected on Windows. When I then powered up an Xbox 360 wireless controller it made the well known Windows “device plugged in” sound and I had a working gamepad ready to use in Windows – all over the network.

I cannot notice any added latency for the controller. And essentially anything I had plugged into the USB ports of the RaspberryPi could immediately be used/mounted on the computer in the basement all over the already existing network.

It cannot be overstated how little hassle this solution was over any other way I know and would have tried. The open source USB/IP project is still there and seems to work on modern Windows BUT you have to deal with driver signing and security issues yourself.

VirtualHere does cost money but it’s at least not a subscription but a perpetual license you can purchase after trying out the fully functional 1-device versions. For me it now brings working USB-over-my-existing-network to any device I want around the house. There are some other uses I will look into – like that flatbed scanner I have. That camera that can now connect anywhere via USB… so many options…

conclusion

I went head-first into the virtual reality rabbit hole and it’s quite fun so far. The costs of this came down far enough and I was able to learn a lot of things I would otherwise not have been able to. Looking into the technology-side of how all this comes together and how latencies add up, build or ruin an experience is remarkable.

If you want to get a (albeit clumsy and not 3D) look of what one of the many options to do in VR is – take a look at a VR session recording from two days ago:

Bonus: The GLinet OPAL travel router does have 1 USB port. And you can run the USB VirtualHere hub software as an MIPSEL binary on there and you would not need the RaspberryPi anymore. The only thing you must figure out yourself is how to route the traffic out the right ports.

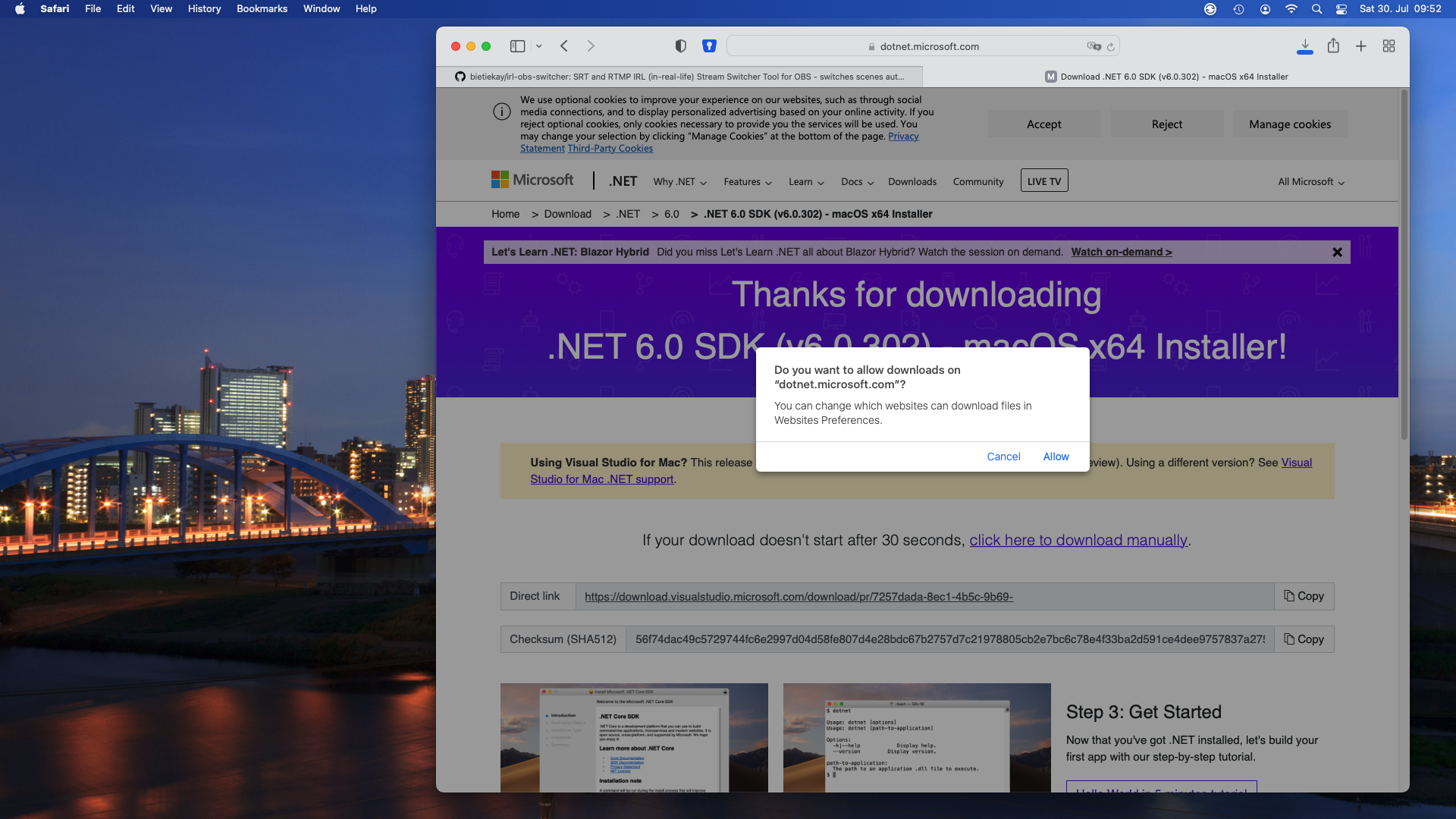

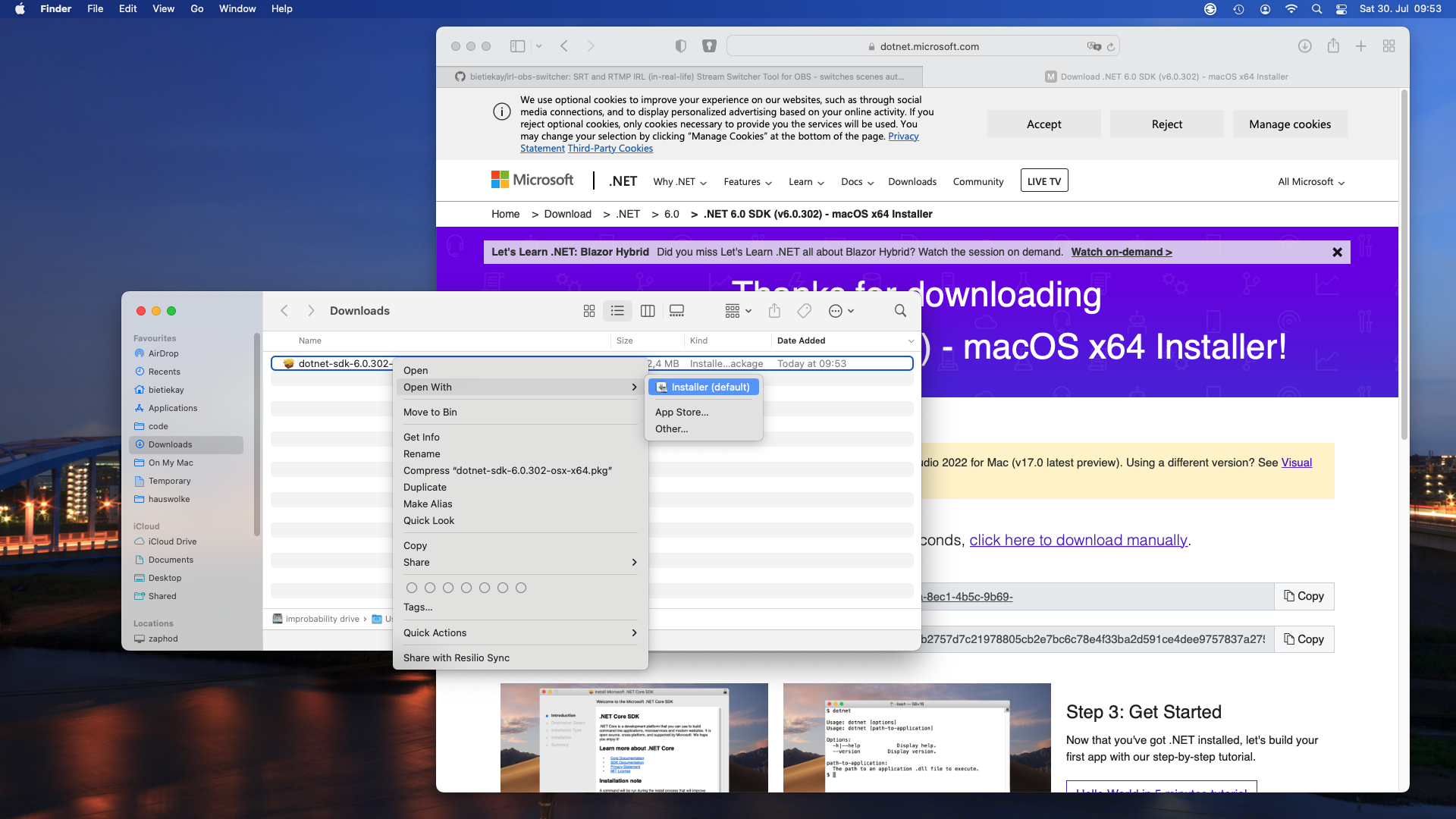

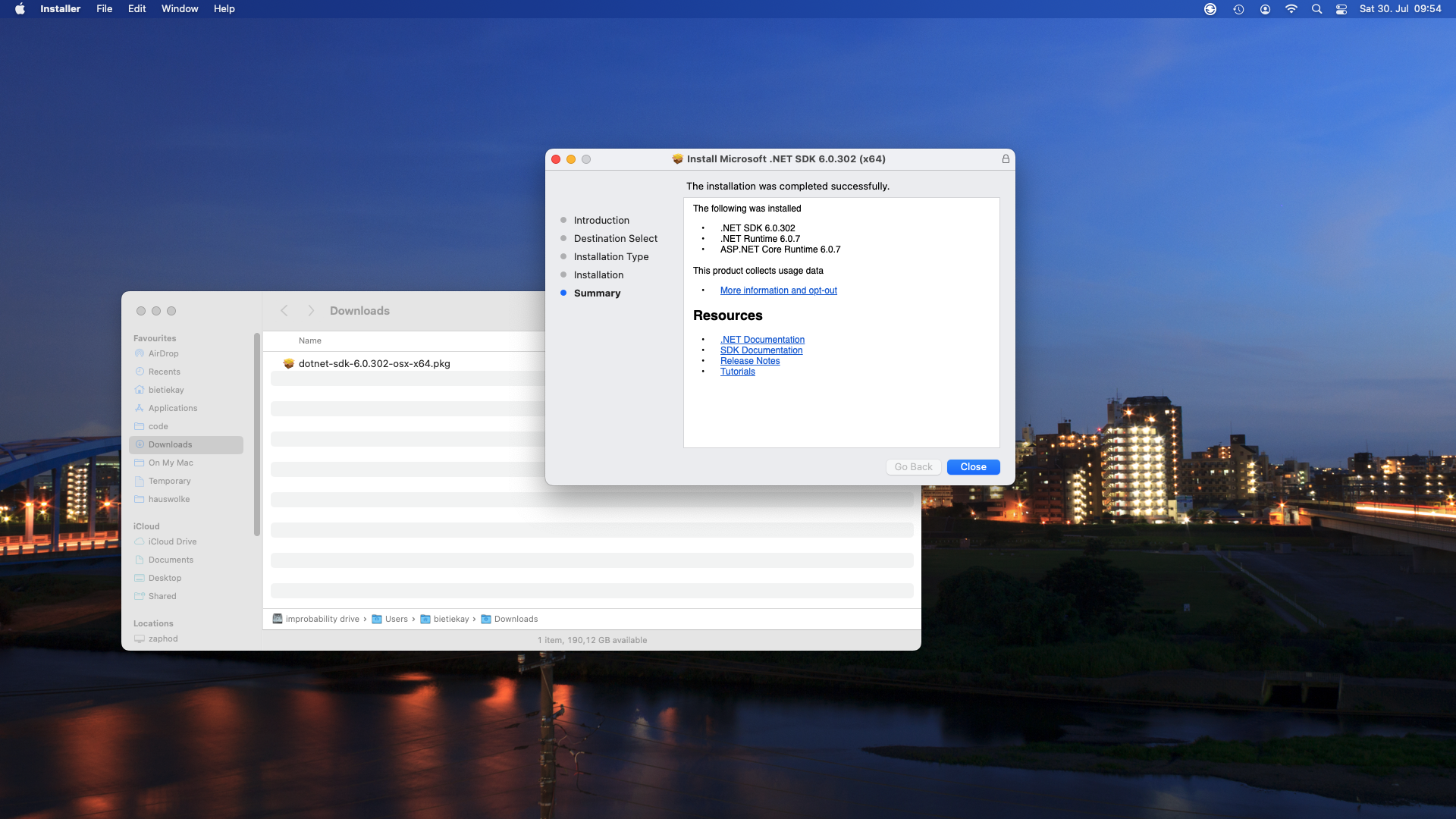

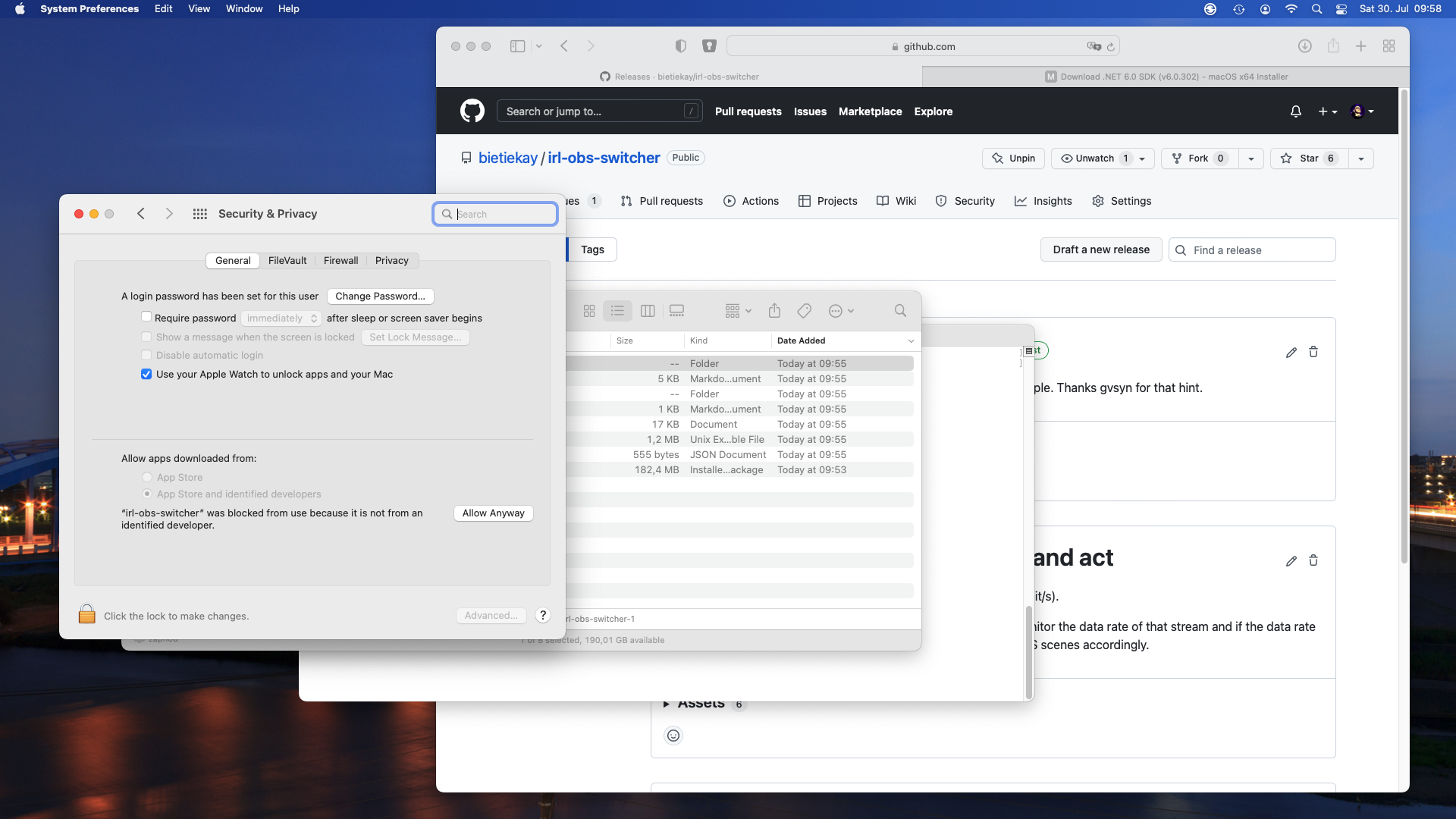

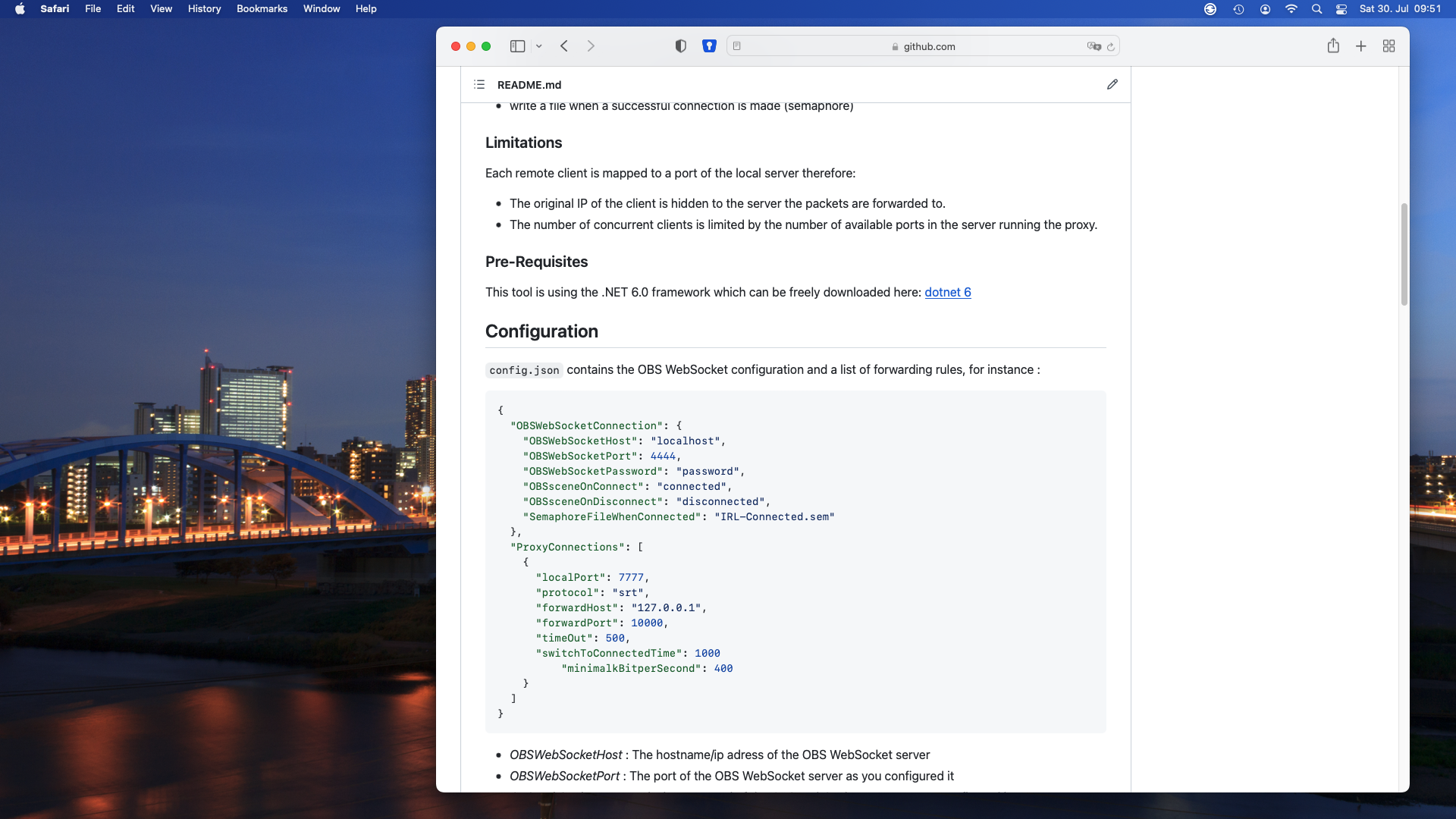

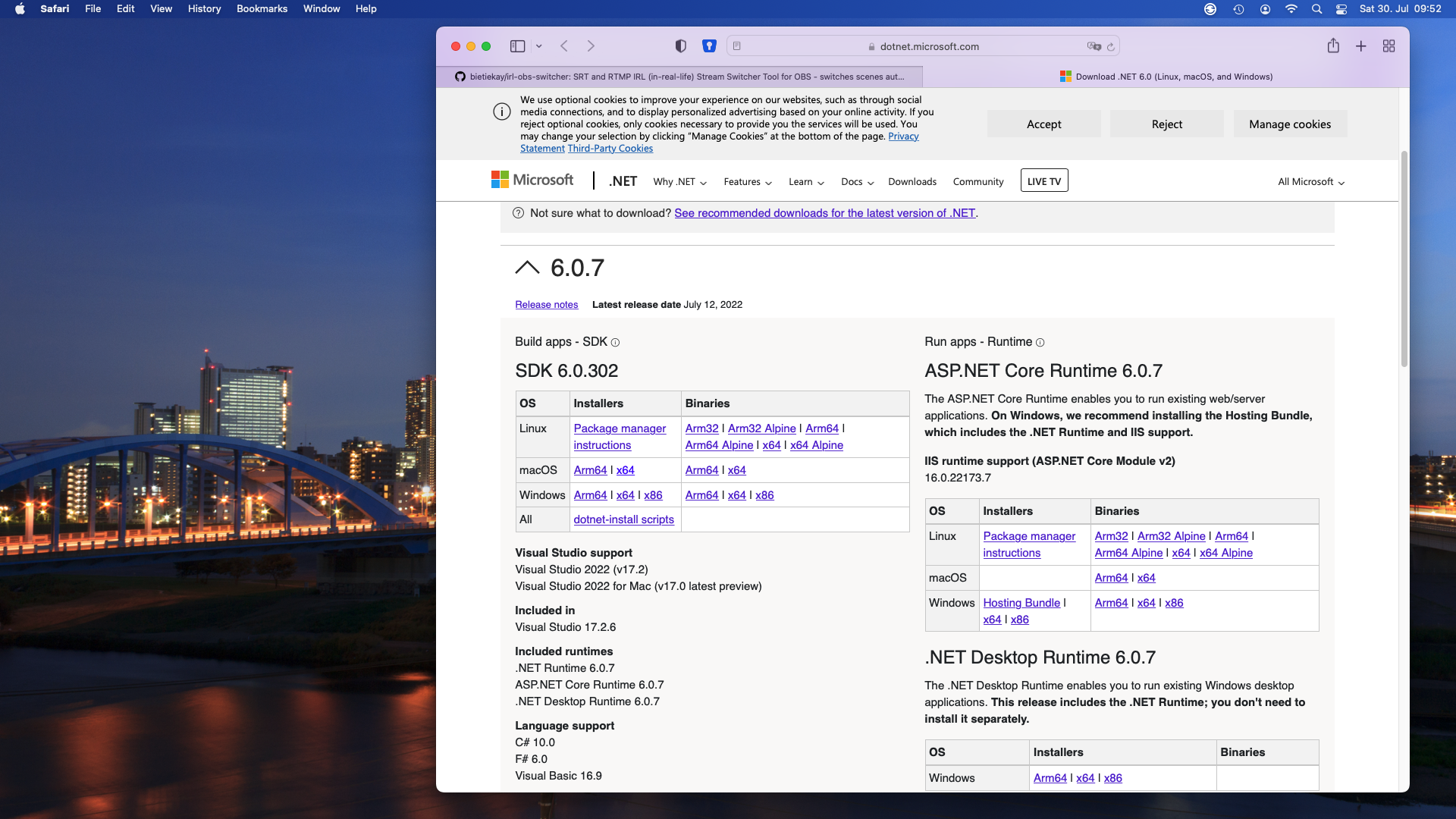

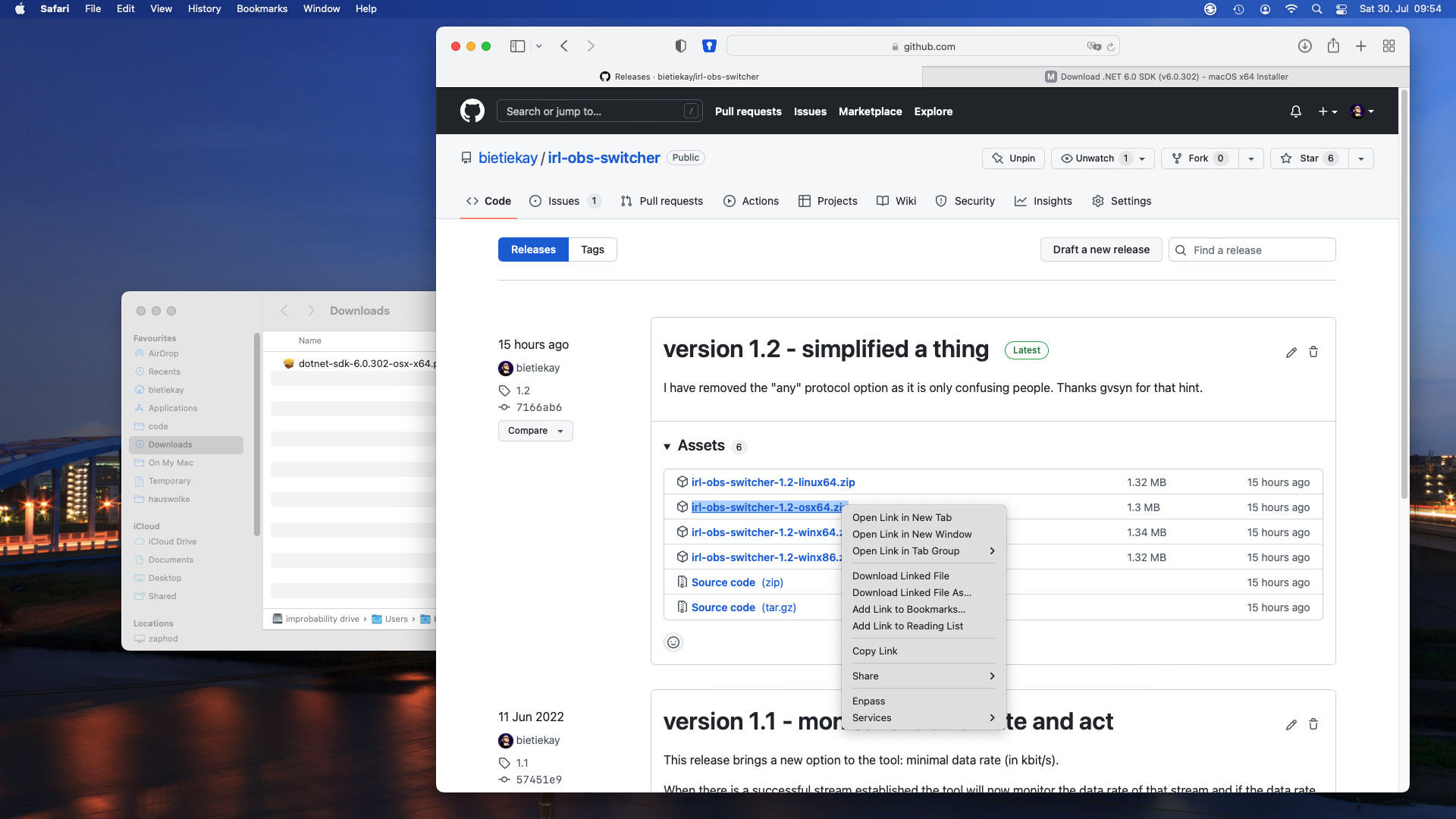

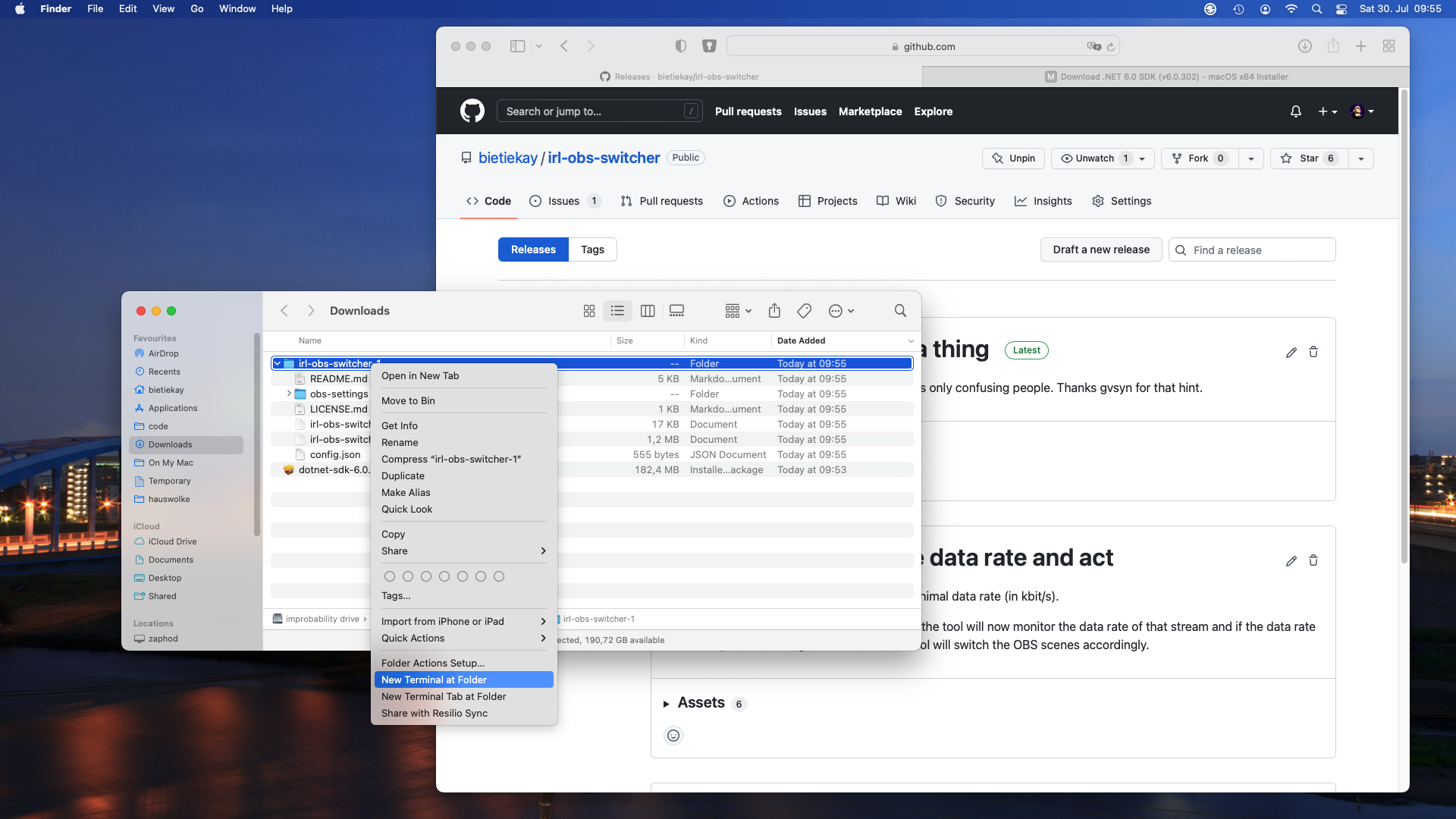

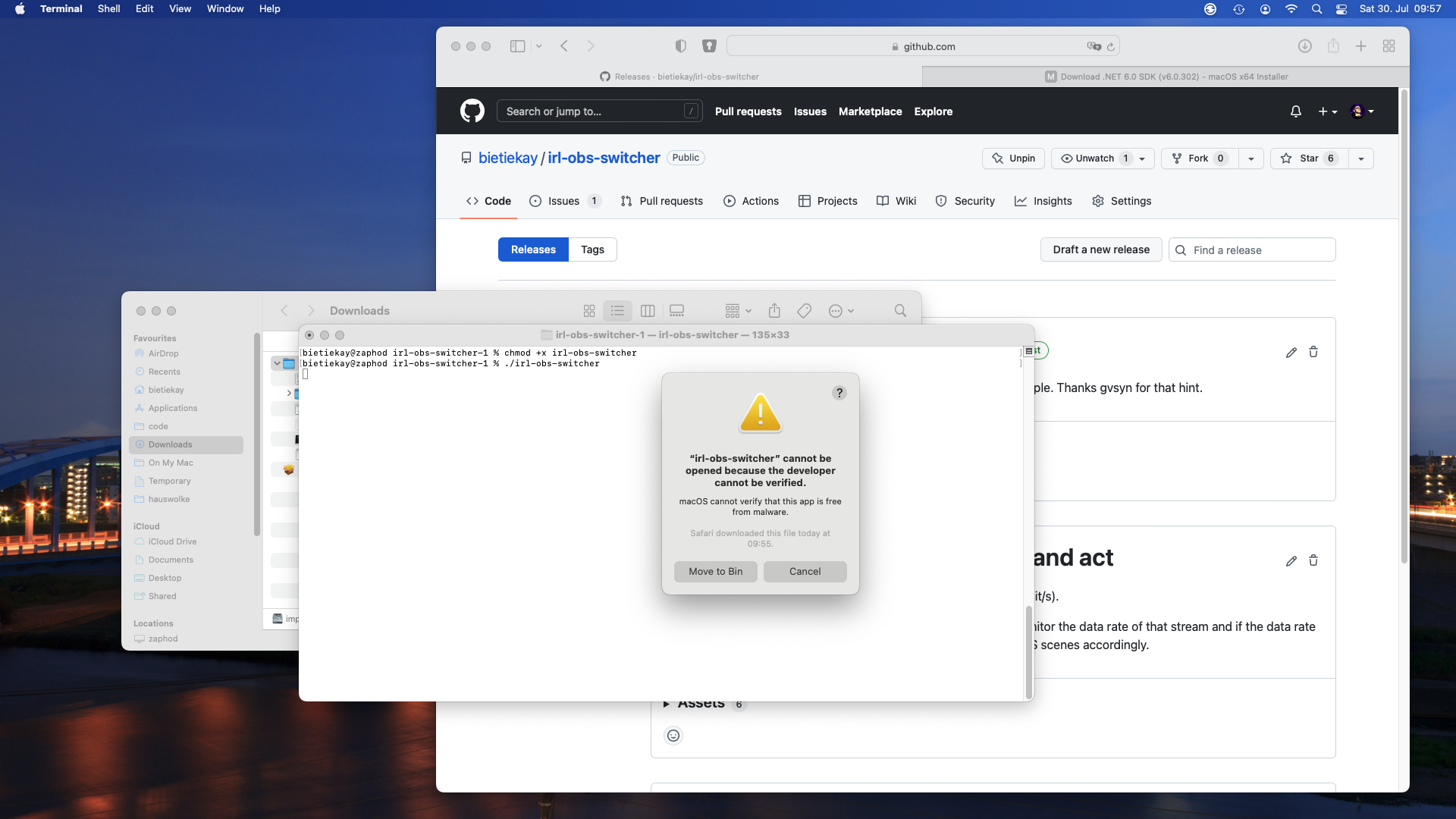

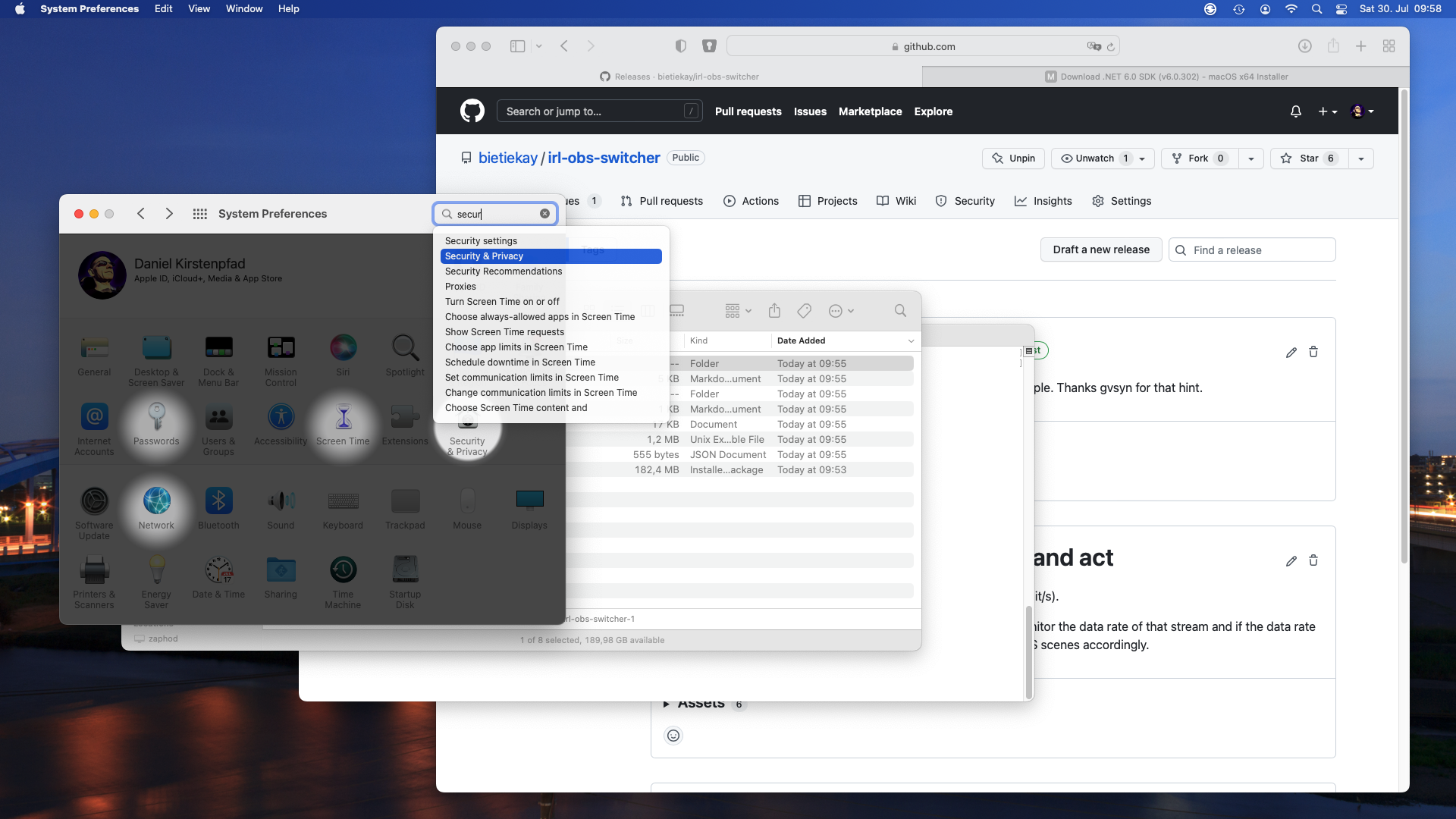

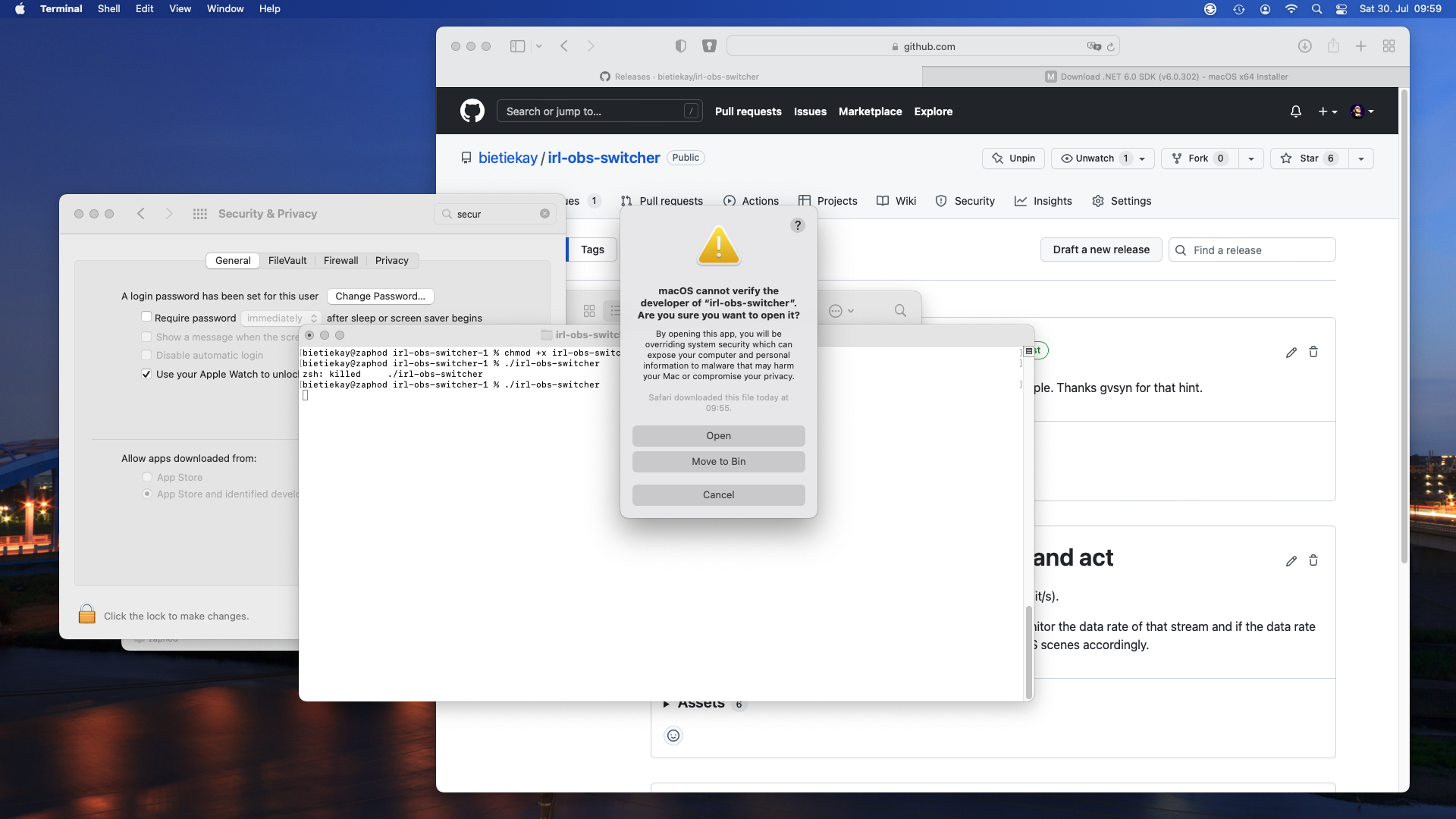

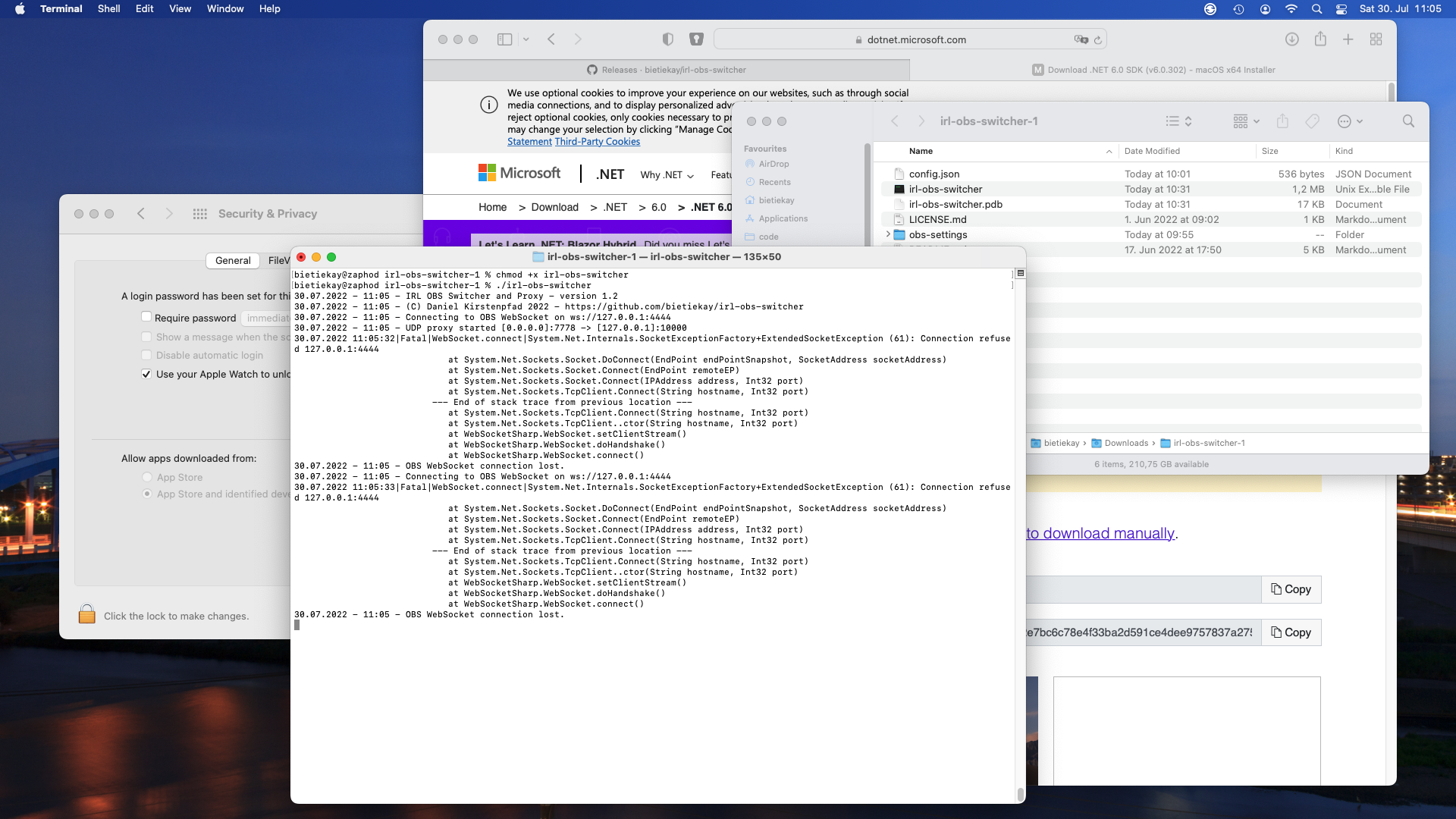

How to run the irl-obs-switcher on macOS?

Last week I‘ve received a comment on the initial irl-obs-switcher blog article regarding the use of the tool on macOS.

And good point: Apart from the source code release on GitHub I am generating binary releases for several operating systems and processor architectures.

For Windows it‘s quite straight forward. If you do not have dotnet 6 installed you will be guided automatically.

For Linux you gotta install dotnet 6 basically according to the official documentation and then run the irl-obs-switcher file.

For macOS it‘s a bit less straight forward – so here are screenshots and comments to guide through the process:

I hope this short step-by-step works for you. In fact by making it I‘ve found some bugs and fixed them along the way.

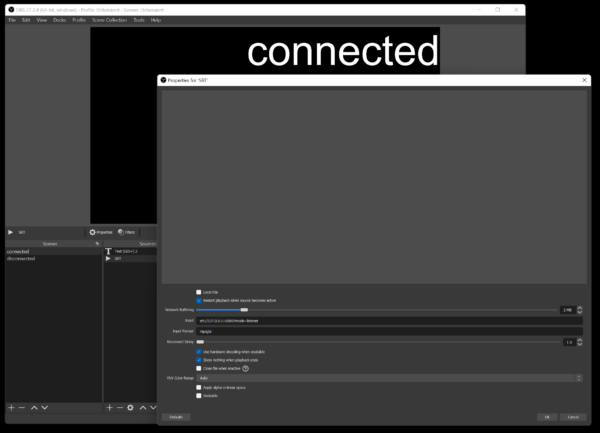

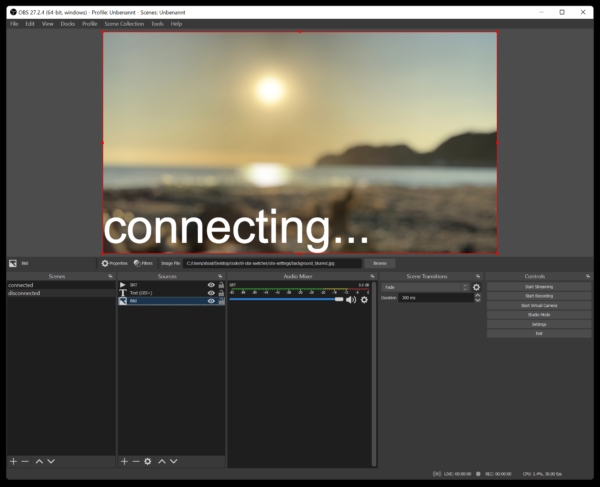

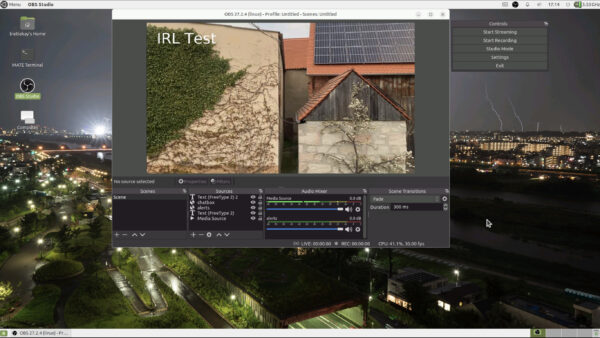

I made an open source IRL (in-real-life) streaming proxy and scene switcher for OBS studio

Ever since I stumbled across several IRL streamers I was intrigued by the concept of it.

IRL or “in-real-life” is essentially the art of streaming everyday life. For hours and totally mobile. Of course there are some great gems in the vast sea of content creators. One of them – robcdee – streams for hours live almost every day and shows you his way around in Japan.

Apart from the content – Japan is great – the technical side of this IRL streaming set-ups is quite interesting. Imagine: These streamers wander around with usually a backpack filled with batteries, several modems (4G/5G…) that load balance and bundle a 2-6 Mbit/s video+audio stream that gets sent to a central server either through SRT or RTMP protocol. This central server runs OBS Studio and receives the video stream offering the ability to add overlays and even switch between different scenes and contents.

After I had a basic understanding of the underlying technologies I went ahead and started building my own set-up. I do have plenty of machines with enough internet bandwidth available so they could be the host machine of OBS Studio. I wanted all of this live in a nice docker container.

I went ahead and built a docker container that is based upon the latest Ubuntu 21.04 image and basically sets up a very minimal desktop environment accessible over VNC. In this environment there is OBS Studio running and waiting for the live stream to arrive to then send out to Twitch or YouTube.

How I have set-up this docker desktop environment exactly will be part of another blog article.

So far so good. OBS offers the ability to define multiple scenes to switch between during a live stream.

These IRL streamers usually have one scene for when they are starting their stream and two more scenes for when they are having a solid connection from their camera/mobile setup and when they are currently experiencing connection issues.

All of the streamers seemingly use the same tooling when it comes to automatically switch between the different scenes depending on their connectivity state. This tool unfortunately is only available for Windows – not for Linux or macOS.

So I thought I give it a shot and write a platform independent one. Nothing wrong with understanding a bit more about the technicalities of live streaming, right?

So I wrote something: IRL-OBS-Switcher. You can get the source code, documentation and the pre-compiled binaries here: https://github.com/bietiekay/irl-obs-switcher

It runs on Linux, Windows, macOS as I have used the .NET framework 6.0 to create it. It is all open source and essentially just a bit of glue and logic around another open source tool called “netproxy” and OBS WebSocket.net.

My tool basically runs on all sorts of platforms – including Linux, Windows and macOS. I run it inside the docker container with the OBS Studio. It essentially proxies all data to OBS and monitors wether or not the connection is established or currently disconnected. Furthermore it can be configured to switch scenes in OBS. So depending on wether there is a working connection or not it will switch between a “connected” and “disconnected” scene all automatically.

So when you are out and about live streaming your day this little tool takes care of controlling OBS Studio for you.

Health related Icons for your Apps and Sites

Found that nice heap of Icons that are free to use and high-quality:

Health Icons is a volunteer effort to create a ‘global good’ for health projects all over the world. These icons are available in the public domain for use in any type of project.

The project is hosted by the public health not-for-profit Resolve to Save Lives as an expression of our committment to offer the icons for free, forever.

https://healthicons.org/about

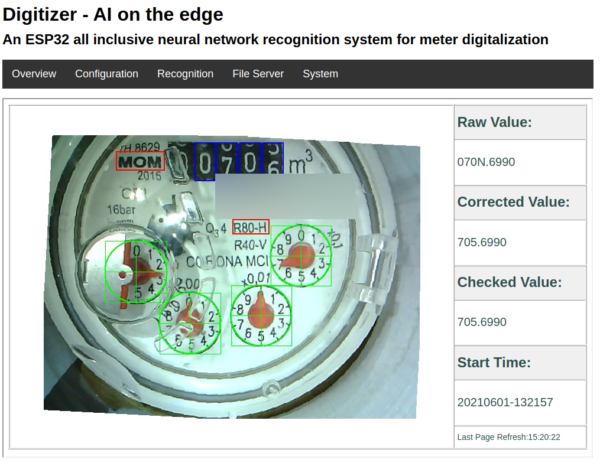

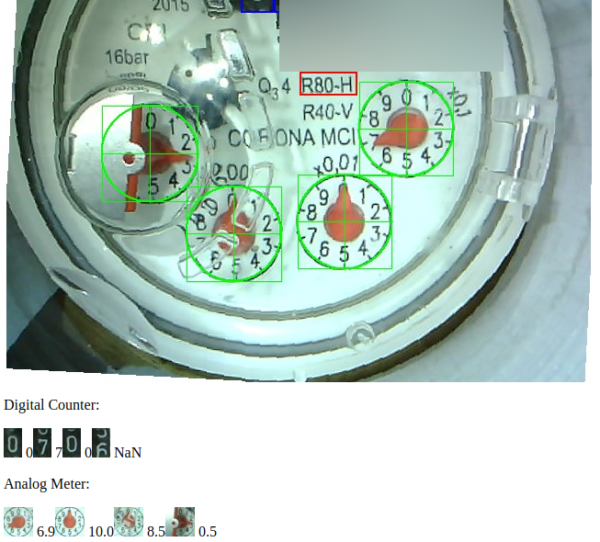

Reading out non-smart (water/gas/…) meters

The only meter in our house that I was not yet able to read out automatically was the water meter.

With the help of a great open source project by the name of AI-on-the-edge and an ESP32-Camera Module it is quite simple to regularly take a picture of the meter, convert it into a digital read-out and send it away through MQTT.

The process is quite simple and straightforward.

- Flash the ready made Firmware image to the module

- Configure the WiFi using a SD card

- Put the module directly over the meter

- Connect to it and setup the reference points and the meter recognition marks

As you can see above all the recognition is done on the ESP32 module with its 4MByte of RAM.

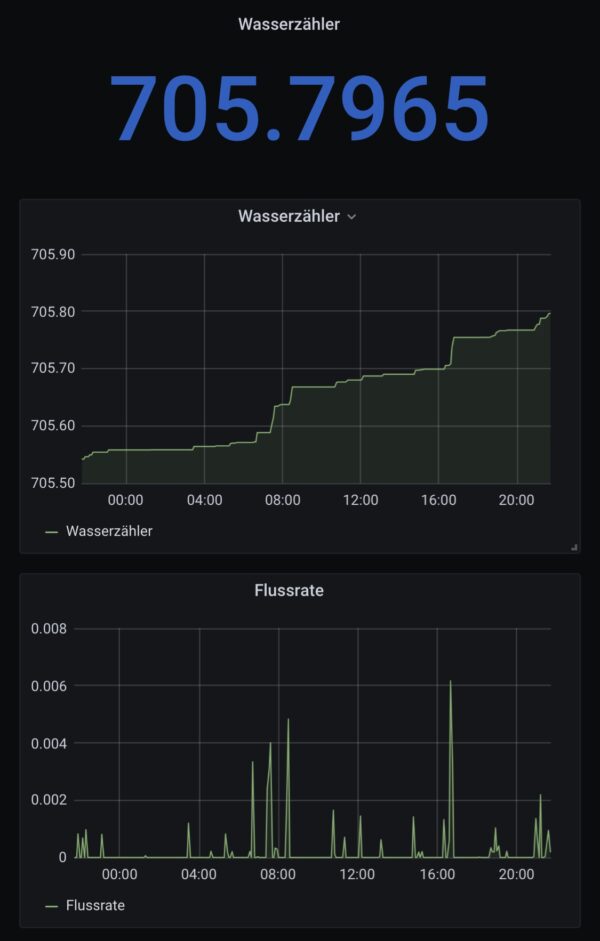

With the data sent through MQTT it’s easy to draw nice graphs:

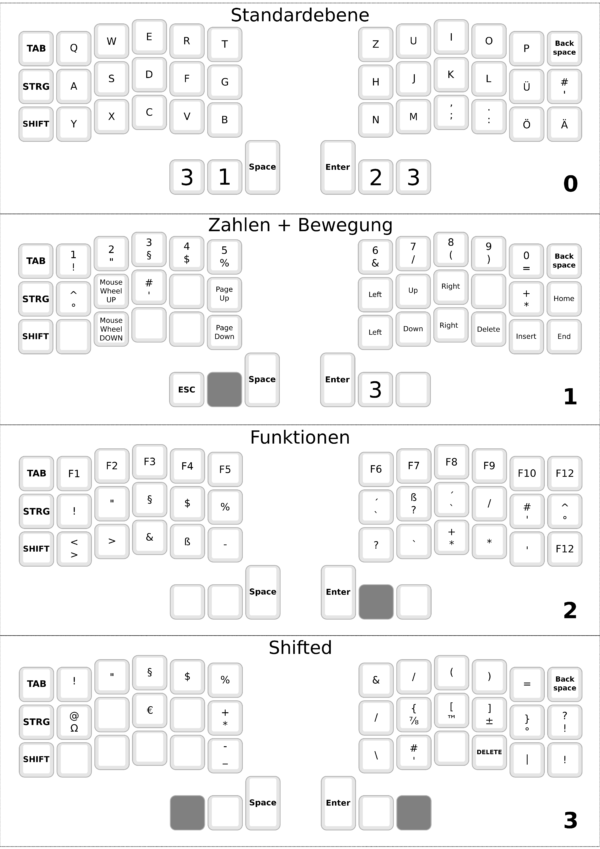

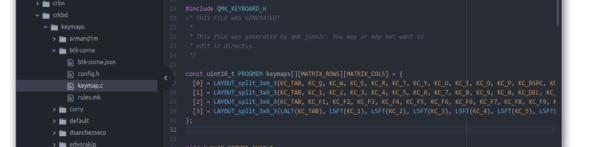

my 4 layer corne split keyboard layout (germany)

I’ve been using my corne split keyboard for about 3 weeks now and during that time I’ve made a couple of changes to the layout.

Right now I am quite happy with the content I am typing these days but I guess over time I am still going to optimize further.

Nevertheless I want to document my layout here, in a picture and with the json file that can be used with the QMK configurator.

building a corne split keyboard

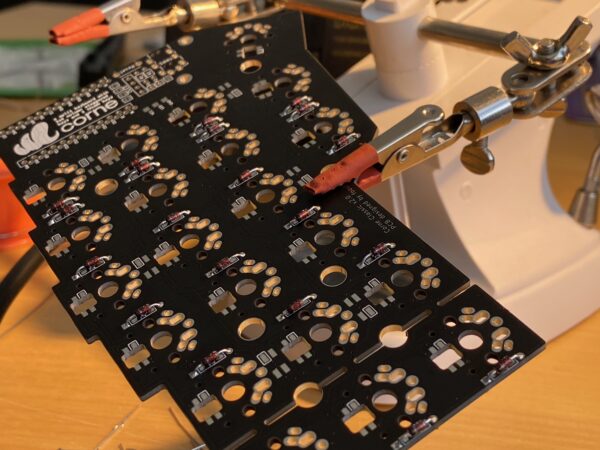

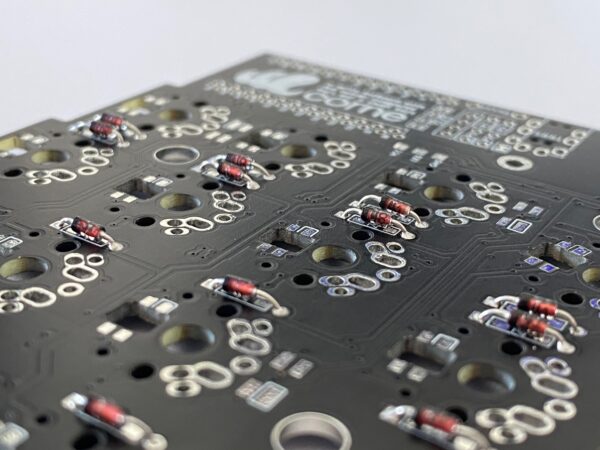

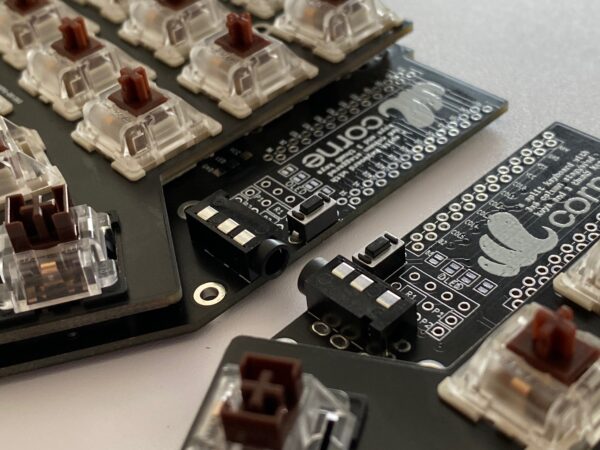

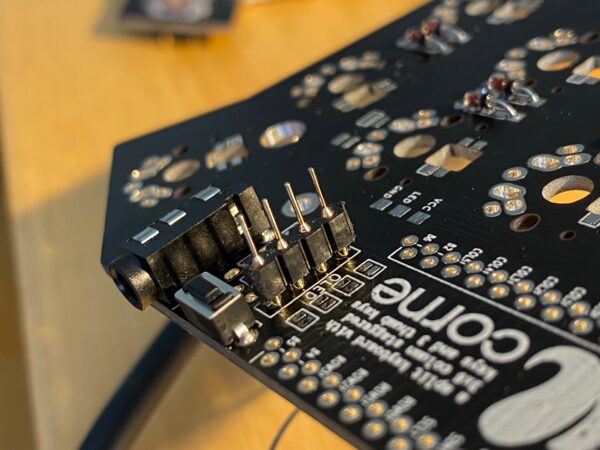

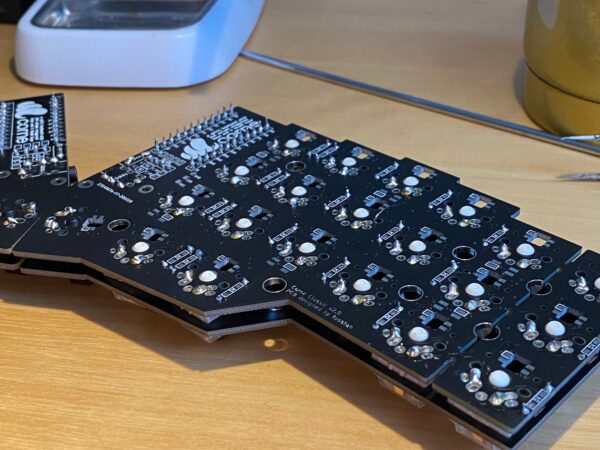

It’s been a while since during a Hack-the-Planet episode I was gifted two PCBs of a corne keyboard by PH_0x17 of Nerdbude and ClickClackHack fame.

Since a picture says more than a thousand words, I give you the result first:

This keyboard design is made from the ground up as open source and naturally is fully available as a GIT repository containing everything you need to start: PCB schematics, drawing, documentation and firmware source code.

It took me a couple of months to get all the required parts ordered and delivered. Many small envelopes with parts that seemlingly are only produced by a handful of manufacturers. But anyways: After everything had arrived and was checked for completeness my wife took the hardware parts into her hands and started soldering and assembling the keyboard.

And so this project naturally is split up between my wife and me in the most natural (to us) way: My wife did all the hardware parts – whilst I did the software and interfacing portion. (Admittedly there only was to be figured out how to get the firmware compiled and altered to my specific needs)

Hardware

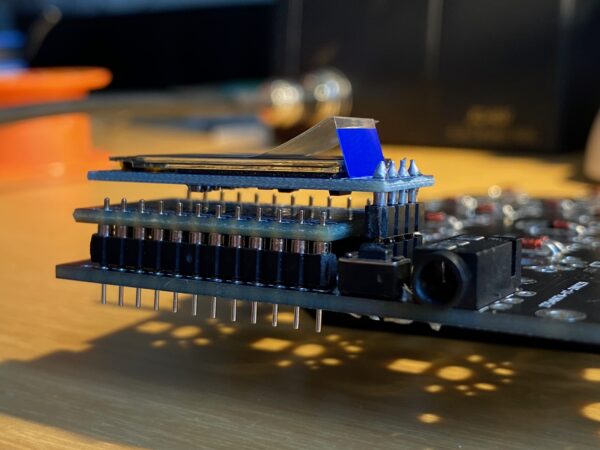

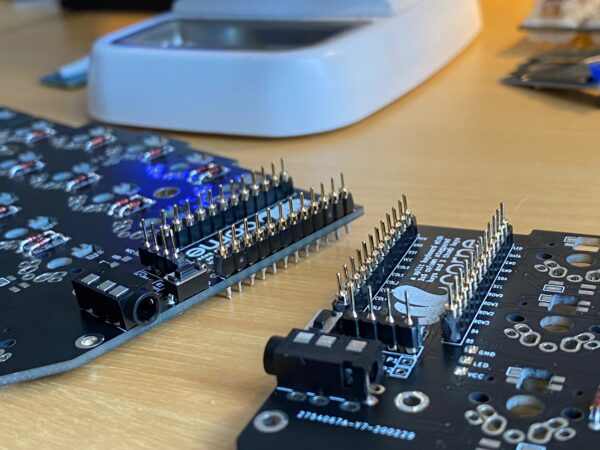

So make the jump over to the blog of my wife and enjoy the hardware portion over there. Come back for the software portion. I will only leave some pictures of the process here:

Software

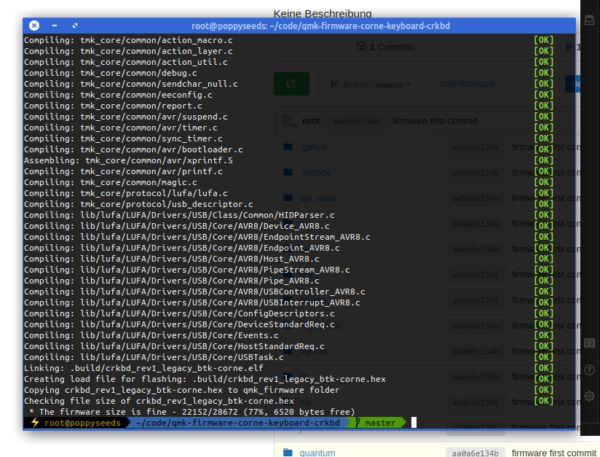

After putting the hardware together it was time to get the firmware sorted as well. This keyboard design is based upon the open source QMK (Quantum Mechanical Keyboard) firmware.

Conveniently QMK comes with it’s own build tools – so you will be up and running in no time. Since I had purchased Arduino ProMicro controllers I was good with the most basic setup you can imagine. As the base requirements for the toolchain where minimal I went with the machine that I had in front of me – a Raspberry Pi 4 with the standard Raspberry Pi OS.

These where the steps to get going:

- get Python 3 and the qmk tool installed – I’ve chosen not to use the tool setup procedure but instead go with a separate clone of the QMK firmware repository.

python3 -m pip install --user qmk- clone the QMK firmware repository and get the QMK tool running (in the /bin folder of the firmware repository – it’s actually just a python script)

git clone https://github.com/qmk/qmk_firmware.git

cd qmk_firmware

git submodule sync --recursive

git submodule update --init --recursive --progress

make crkbd:default- create your own keymap to work with. You gotta use the crkbd firmware options as a default for this keyboard. The command below will generate a subfolder with the name of your keymap in the keyboards/crkbd/keymaps folder with the default settings of the crkbd keyboard firmware.

qmk new-keymap -kb crkbd- build your first firmware by running the command below (note: btk-corne is the name of my keymap)

qmk compile --clean -kb crkbd/rev1/legacy -km btk-corne- now you can flash the firmware to both ProMicro controllers. The most straight forward way for me was using avrdude on the commandline. In my case the device is added as /dev/ttyACM0 and the compiled firmware named crkbd_rev1_legacy_btk-corne.hex.

When you got all this information you need to plug in the ProMicro and trigger a reset by bridging Ground and the Reset Pin. If you added, like we did, a button for reset you can use this. After hitting reset the ProMicro bootloader will enter the state where it’s possible to be flashed. Reset it and THEN run the avrdude commandline.

The full commandline is:

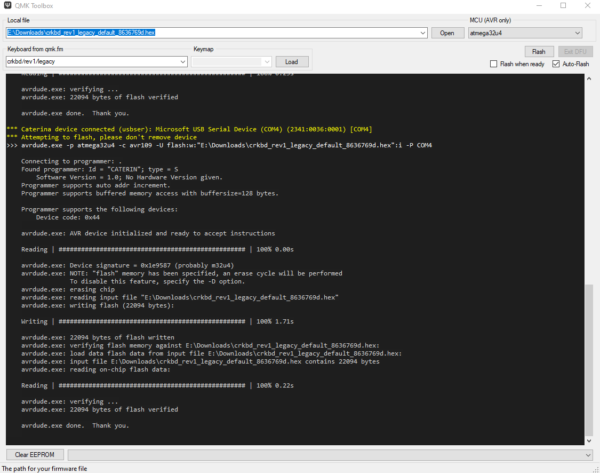

avrdude -p atmega32u4 -P /dev/ttyACM0 -c avr109 -e -U flash:w:crkbd_rev1_legacy_btk-corne.hex- (alternatively) you can also use QMK Toolbox to flash the firmware. Also works.

So now you know how to get the firmware compiled and running (if not, look here further). But most probably you are not happy with some aspects of your keymap or firmware.

By now you might ask yourself: Hey, I’ve got two ProMicros on one keyboard. Both are flashed with the same firmware. Into which of the two do I plug in the USB cable that then is plugged into the computer?

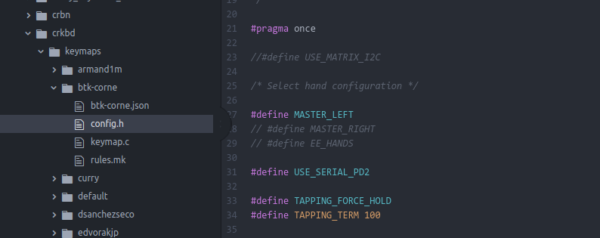

The answer is: by default QMK assumes that you are plugging into the left half of the keyboard making the left half the master. If you prefer to use the right half you can change this behaviour in the config.h file in the firmware:

You have to plug in both of them anyway at times when you want to flash a new firmware to them as you adjust and make changes to your keymap.

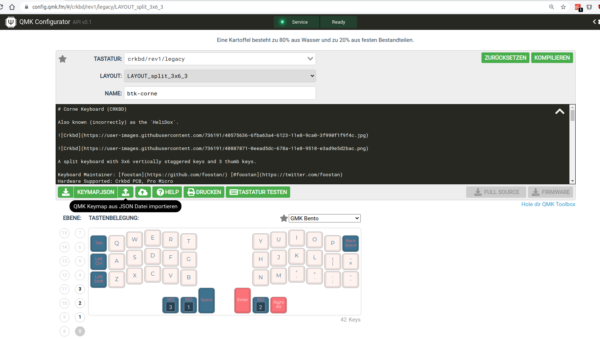

Thankfully QMK comes with loads of options and even a very useful configurator tool. I used this tool to adjust the keymap to my requirements. The process there is straightforward again. Open up the configurator and select the correct keyboard type. In my case that is crkbd/legacy. The basic difference between legacy and common is a different communication protocol between the two halves. This really only is important when features are used that require some sort of sync between the two haves – like some RGB LED effects. Since I did not add any LEDs to the build I go with legacy for now. Maybe I need some features later that require me to go with common.

The configurator allows you to set up the whole keymap and upload/download it as a .json file.

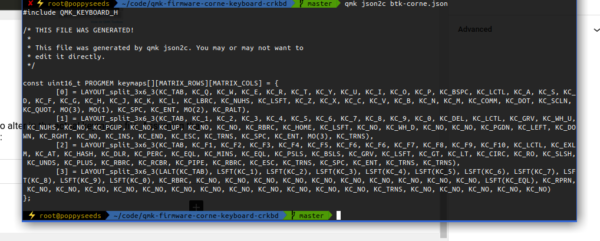

That .json file can easily be converted into the C code that you need to alter in the actual keymap.c file. Assuming that the .json file you got is named btk-corne.json the full commandline is:

qmk json2c btk-corne.jsonThen simply take this output and replace the stuff in the keymap.c with it:

Now you compile and flash again. And if all went right you’ve got the new keymap and firmware on your keyboard and it’ll work just like that :)

a network sniffer – like wireshark – for the terminal

Termshark is a terminal based UI that you can use to debug network traffic when you do not have a GUI available to you.

So, we’re building something

For some weeks now I am working on the design of something that is being built within the next couple of weeks out of wood and metal (and electronics).

It’s hopefully going to be as nice as I dream it up… What could it be?

I did this design based upon some pixel-material and pictures I’ve gathered around the internets – and took a lot of inspiration from them.

Although I had to create everything in vectors from those small pixel templates… But now everything above is going to be printed on vinyl in glorious vectors – no pixeljunk.

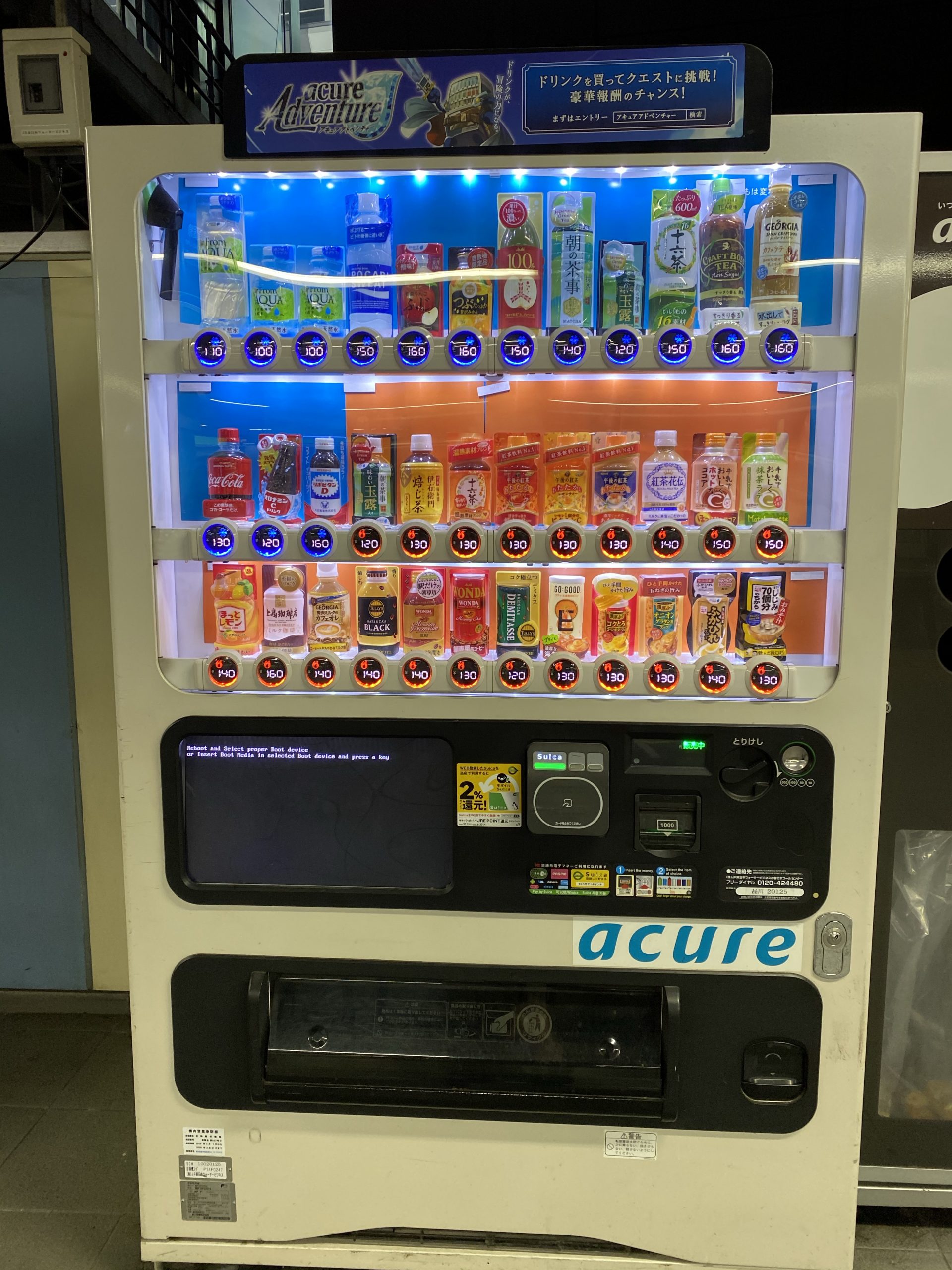

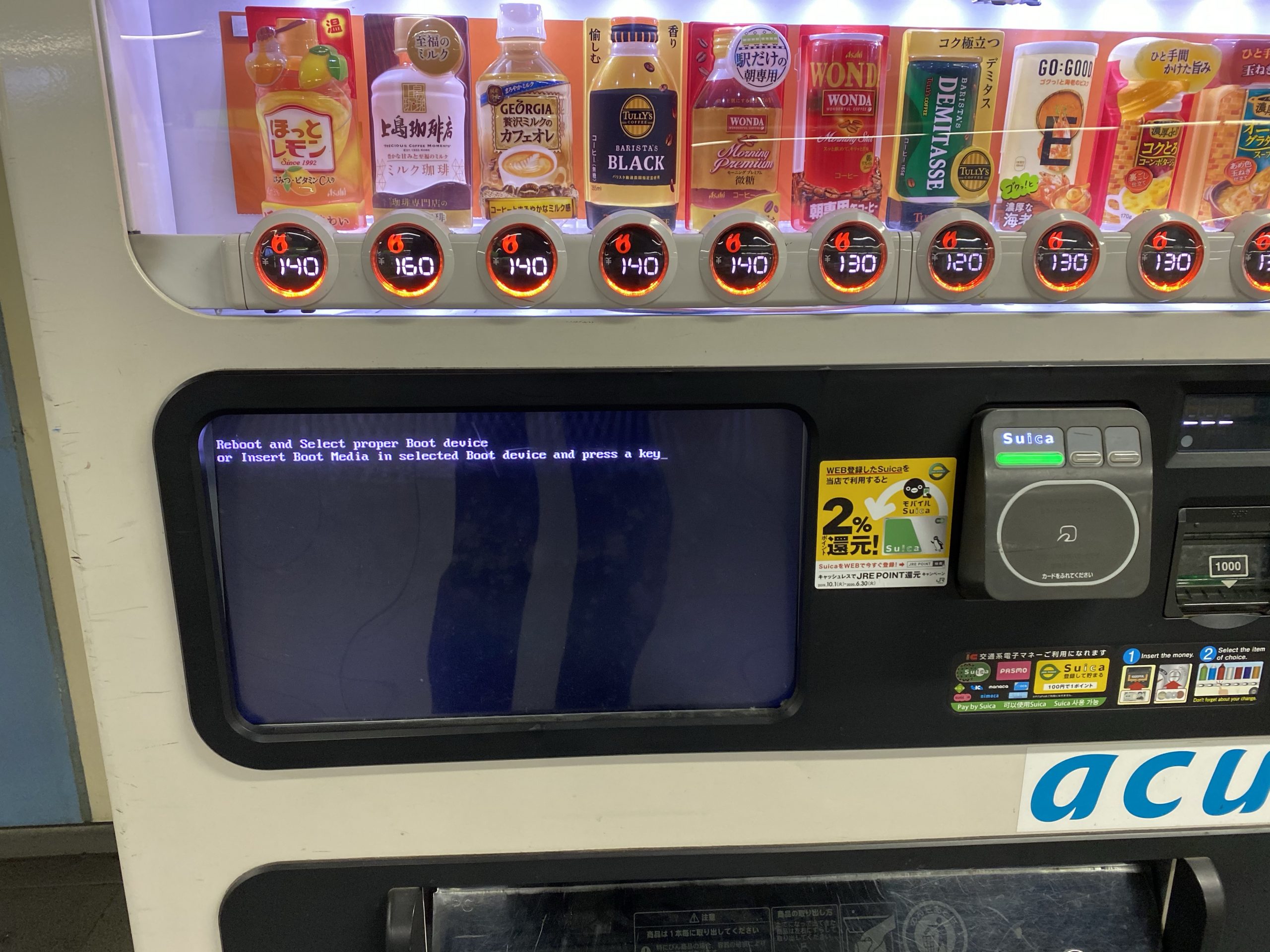

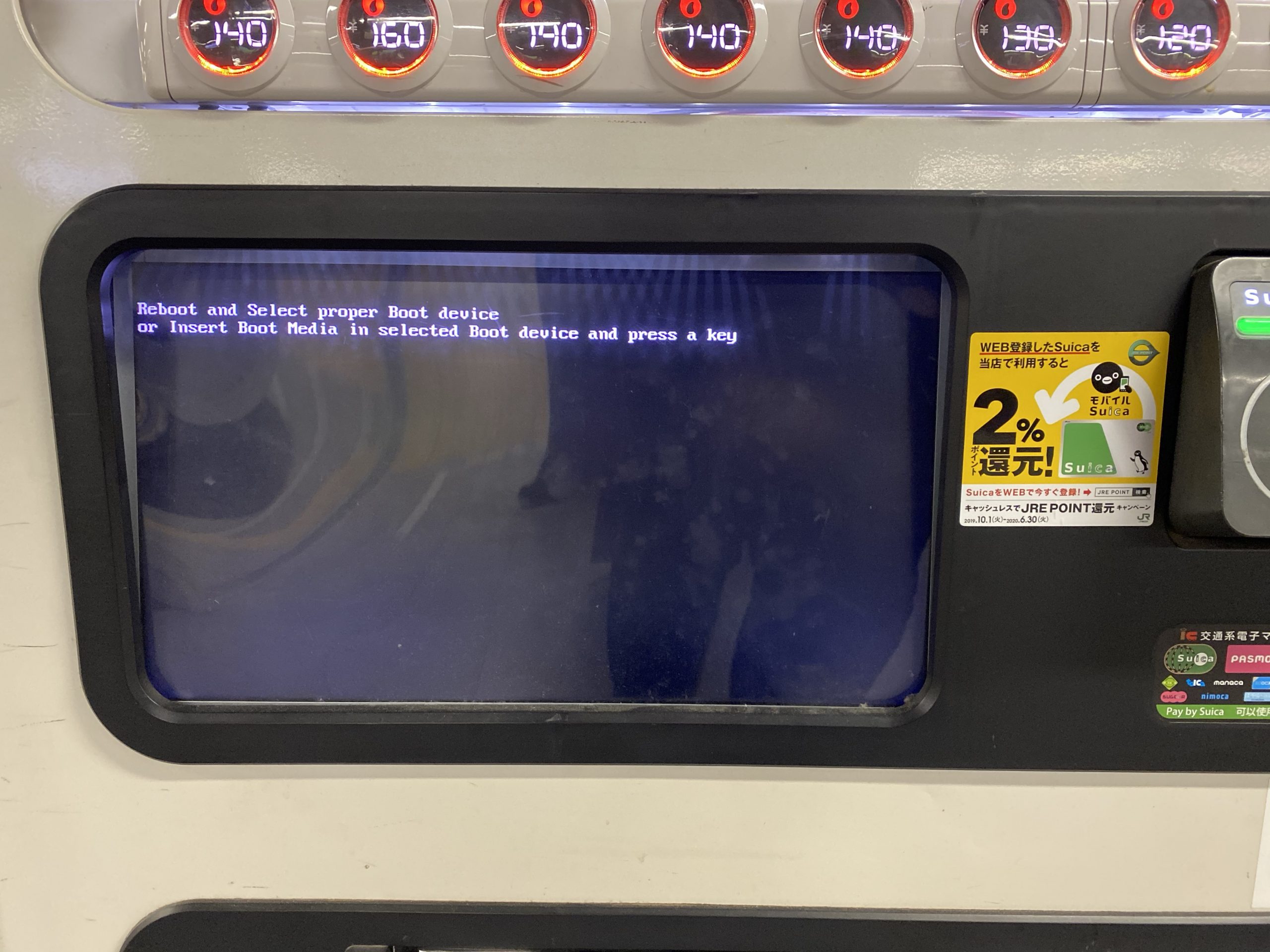

Please reboot the vending machine

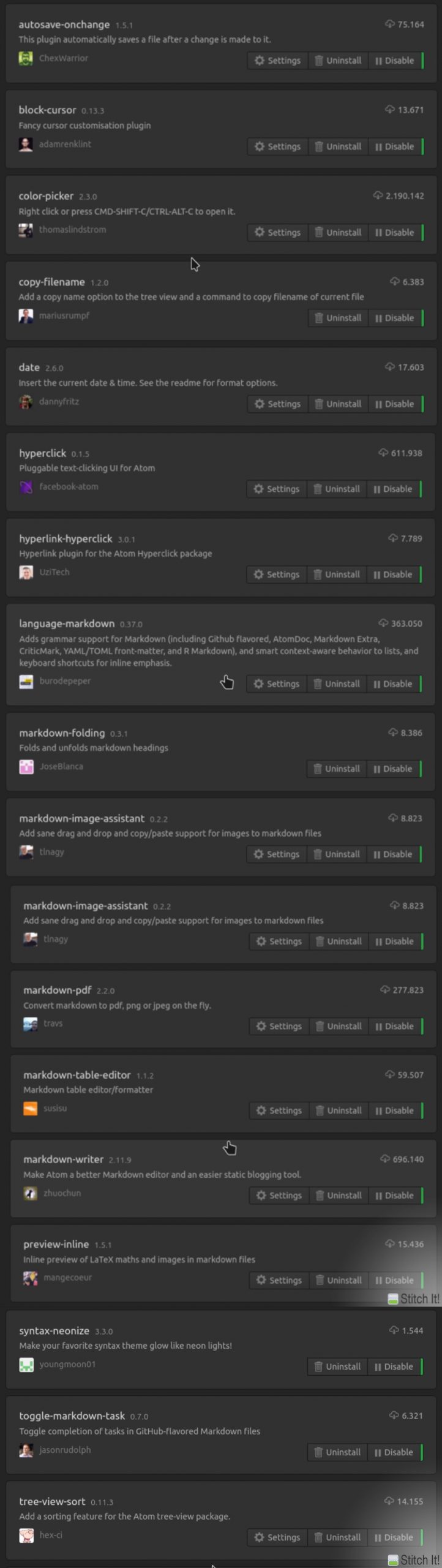

all ATOM editor plugins I am using

Odroid Go Advance

You might want to get one of these. Can be ordered since today. Delivered starting February 6th.

It runs emulators up to Playstation 1. Most importantly it will run SNES and NeoGeo flawlessly (so they say).

I will report when mine arrived.

DOS64

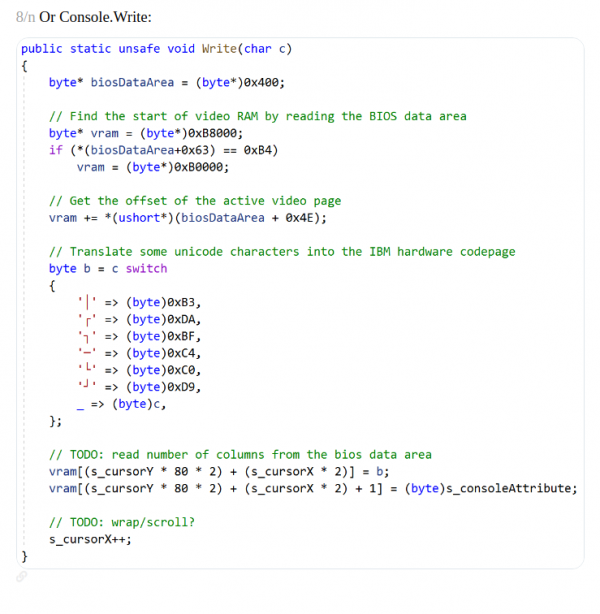

So this is interesting: Normally a Windows program (executable) if you try to run it anywhere else will show a message “cannot be run here” and terminates.

Printing this message is actually done by a little program whos task is to only print out this very message. So it can be overwritten.

Michael Strehovský did exactly this, very impressively. He documented what he did to get the game “snake”, written in C#, running on DOS instead of the “does not run here” stub. In an executable file that would run both, on standard 90s MS-DOS as well as on Windows with the .NET Framework installed.

He used a quite elaborate toolchain – namely DOS64-stub.

You can read all of this in the full thread. I recommend a deeper dive, as it’s a great start to better understand the inner workings of your computer…

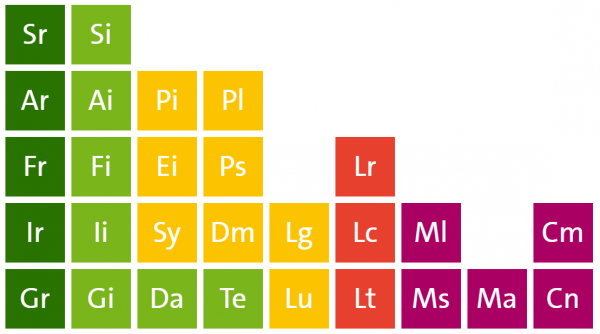

Periodensystem der KI

Jeder kennt das »Periodensystem der Elemente« aus dem Chemieunterricht. Das Periodensystem ist ein intuitiver und schneller »Lego-Baukasten«, der uns unterstützt, komplizierte Zusammenhänge zwischen Bausteinen (Atomen) und Molekülen (Naturstoffe, Steine oder Metalle) intellektuell zu erfassen.

Der amerikanische Informatiker Kristian Hammond hat den Versuch unternommen, eine Lingua Franca für künstliche Intelligenz zu konzipieren. In Anlehnung an die Chemie bezeichnet er sie als »Periodensystem der Künstlichen Intelligenz«.

Das Periodensystem der Künstlichen Intelligenz unterstützt dabei, den Begriff KI auf Geschäftsprozesse abzubilden und ein Verständnis der Elemente aufzubauen – ähnlich wie im Periodensystem der chemischen Elemente. Der Ansatz hilft beim Verständnis und bei der Einschätzung von Marktreife, Aufwänden, benötigtem Maschinentraining sowie Wissen und Erfahrungen der Mitarbeiter.

when AI dreams…

Video incoorporating image processing via python and BigGAN adversarial artificial neural network to breed new images. There are papers about “high fidelity natural image synthesis”.

Anthony Baldino – Like Watching Ghosts from his recently released album Twelve Twenty Two

Anthony Baldino

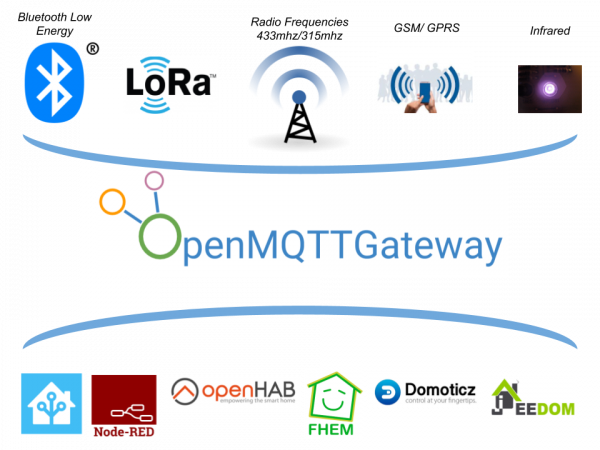

multi-Protocol to MQTT tool

When you are dealing with IoT protocols, especially at hobby-level, you probably came across the MQTT protocol and the challenge to have all those different devices that are supposed to be connected actually get connected – preferably using the MQTT protocol.

Recently this little project came to my attention:

OpenMQTTGateway project goal is to concentrate in one gateway different technologies, decreasing by the way the number of proprietary gateways needed, and hiding the different technologies singularity behind a simple & wide spread communication protocol: MQTT.

OpenMQTTGateway

OpenMQTTGateway support very mature technologies like basic 433mhz/315mhz protocols & infrared (IR) so as to make your old dumb devices “smart” and avoid you to throw then away. These devices have also the advantages of having a lower cost compared to Zwave or more sophisticated protocols. OMG support also up to date technologies like Bluetooth Low Energy (BLE) or LORA.

Of course, there is a compatible device list…

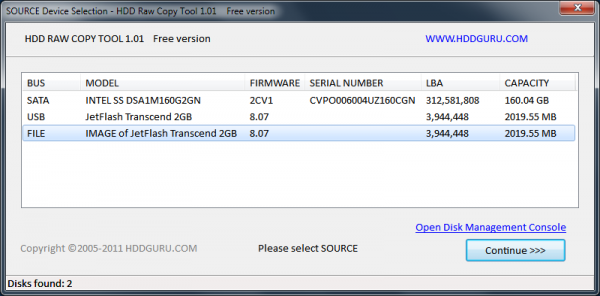

bootable disks and raw disk copies

Every once in a while I need to take an image or duplicate an SSD/SDCard/Harddisk. And it’s gotten quite complicated to get the proper formatting and alignment when you want to achieve certain things.

For example creating a EFI compatible bootable USB stick is not as straight forward as one would think.

In those cases, a tool called rufus helps:

For all other cases I am using the HDDGuru tool on Windows.

HDD Raw Copy Tool is a utility for low-level, sector-by-sector hard disk duplication and image creation.

- Supported interfaces: S-ATA (SATA), IDE (E-IDE), SCSI, SAS, USB, FIREWIRE.

- Big drives (LBA-48) are supported.

- Supported HDD/SSD Manufacturers: Intel, OCZ, Samsung, Kingston, Maxtor, Hitachi, Seagate, Samsung, Toshiba, Fujitsu, IBM, Quantum, Western Digital, and almost any other not listed here.

- The program also supports low-level duplication of FLASH cards (SD/MMC, MemoryStick, CompactFlash, SmartMedia, XD) using a card-reader.

HDD Raw Copy tool makes an exact duplicate of a SATA, IDE, SAS, SCSI or SSD hard disk drive. Will also work with any USB and FIREWIRE external drive enclosures as well as SD, MMC, MemoryStick and CompactFlash media.

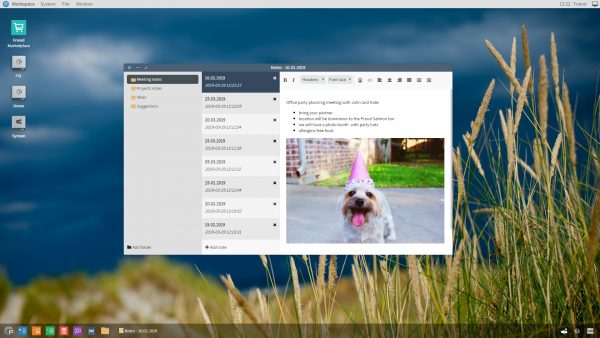

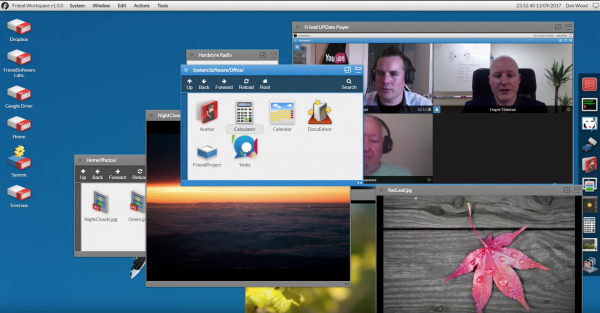

FriendOS – OS concept in your browser

brace for marketing:

Friend OS, a modular, fully-customizable operating system accessible via any device that can support a modern web browser, or Friend’s Android and iOS apps. Friend OS leverages Internet and blockchain technologies to offer all the features of a commercial operating system, but one that gives you access to a secure and private cloud-based virtual desktop anytime, anywhere, no matter what hardware or software you use.

So what does this all mean? It’s apparently a web application scaled up to behave and be used like an operating system. It encapsulates an application and directory/filesystem like concept and essentially lives in one of your browser windows.

As long as you’ve got a supported browser, all your apps and data will be accessible through this. They claim.

It’s interesting as there is a lot of open source in there and even some docker effort made to get it running. Seems abandoned / not updated at the time of writing, but it’s a nice concept to begin with anyways.

generative art: flowers

It started with this tweet about someone called Ayliean apparently drawing a plant based upon set rules and rolling a dice.

And because generative art in itself is fascinating I am frequently pulled into such things. Like this dungeon generator or these city maps or generated audio or face generators or buildings and patterns…

On the topic of flowers there’s another actual implementation of the above mentioned concept available:

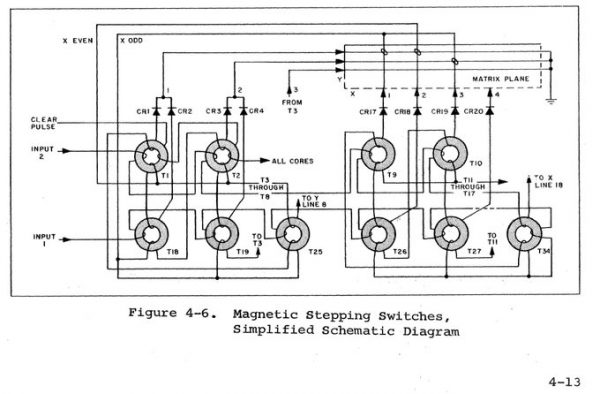

TubeTime and BitSavers

I was pointing to BitSavers before. And I will do it again as it’s a never ending source of joy.

Now some old schematics had been spilled into my feeds that show how logic gates had been implemented with transformers only.

And not only BitSaver is on this path of sharing knowledge, also TubeTime is such a nice account to follow and read.

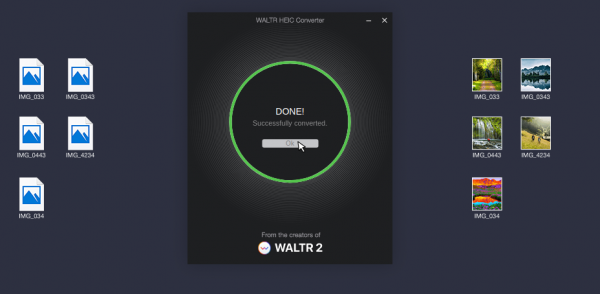

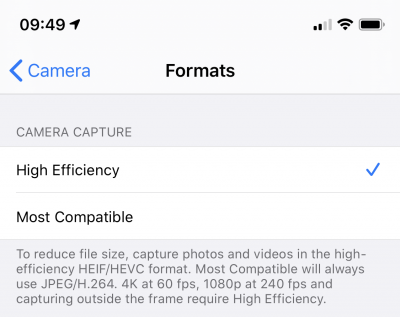

batch convert HEIF/HEIC pictures

When you own a recent iOS device (iOS 11 and up) you’ve got the choice between “High Efficiency” or “Most Compatible” as the format all pictures are being stored by the camera app.

Most Compatible being the JPEG format that is widely used around the internet and other cameras out there and the “High Efficiency” coming from the introduction of a new file format and compression/reduction algorithms.

A pointer to more information about the format:

High Efficiency Image File Format (HEIF), also known as High Efficiency Image Coding (HEIC), is a file format for individual images and image sequences. It was developed by the Moving Picture Experts Group (MPEG) and is defined by MPEG-H Part 12 (ISO/IEC 23008-12). The MPEG group claims that twice as much information can be stored in a HEIF image as in a JPEG image of the same size, resulting in a better quality image. HEIF also supports animation, and is capable of storing more information than an animated GIF at a small fraction of the size.

Wikipedia: HEIF

As Apple is aware this new format is not compatible with any existing tool chain to work with pictures from cameras. So you would either need new, upgraded tools (the Apple-way) or you would need to convert your images to the “older” – not-so-efficient JPEG format.

To my surprise it’s not trivial to find a conversion tool. For Linux I’ve already wrote about such a tool here.

For macOS and Windows, look no further. Waltr2 is an app catering your conversion needs with a drag-and-drop interface.

It’s advertised as being free and offline. And it works a treat for me.

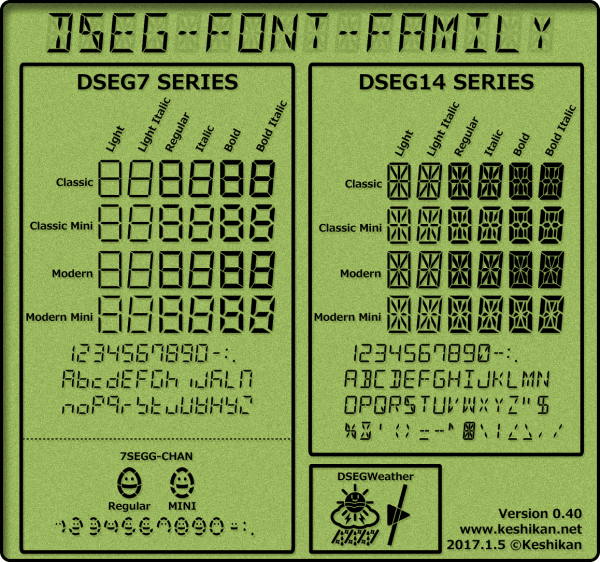

a proper 7-segment / 14-segment font

DSEG is a free font family, which imitate seven and fourteen segment display(7SEG,14SEG). DSEG have special features:

- DSEG includes the roman-alphabet and symbol glyphs.

- More than 50 types are available.

- True type font(*.ttf) and Web Open Type File Format (*.woff, *.woff2) are in a package.

- DSEG is licensed under the SIL Open Font License 1.1.

Get it here.

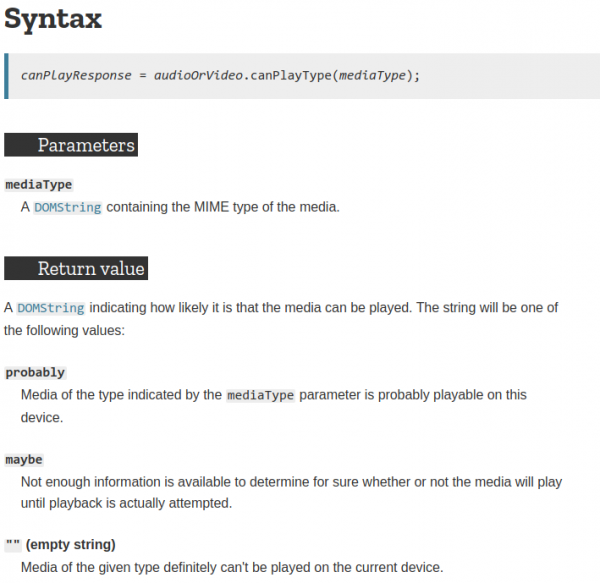

can it play? maybe? probably!?

Sometimes you come across things in documentations. You read them. And then you read them again.

And then you write a post about it. May I present HTMLMediaElement.canPlayType():

It almost feels like we’ve made a step forward into a more probabilistic approach of computing…

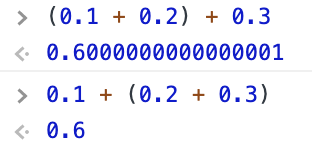

Reminder: addition of floating point numbers is NOT associative

Reminder: addition of floating point numbers is NOT associative… (0.1 + 0.2) + 0.3 ≠ 0.1 + (0.2 + 0.3) …and this is true in basically _any_ language that uses floating point numbers. Here it is in javascript in the browser console:

Mark Kriegsman on Twitter

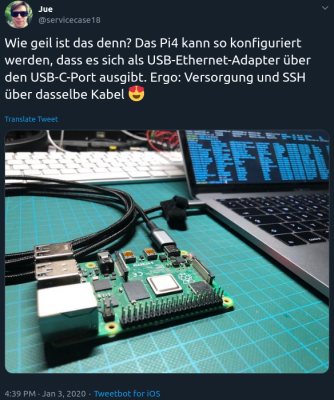

TIL: Pi Zero and Pi4 can be used as USB ethernet adapters

And if you want it too, there is the how-to available on the RaspberryPi forum.

dangerously curious bitcoins

Some things you find on GitHub are more interesting and frightening than others.

This one is both and some more. What is it you ask?

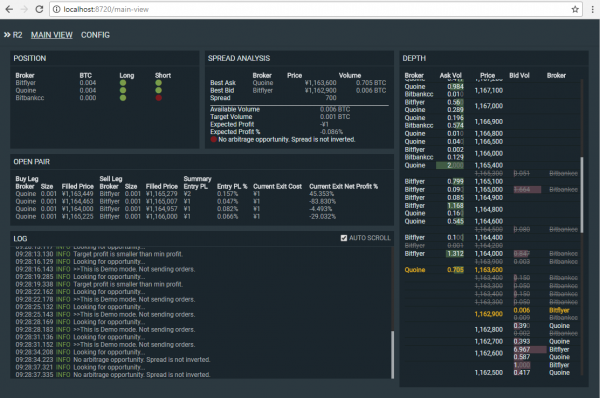

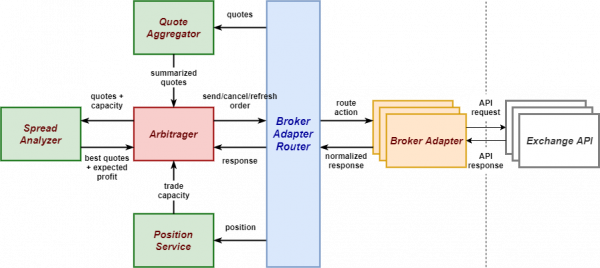

R2 Bitcoin Arbitrager is an automatic arbitrage trading application targeting Bitcoin exchanges.

So it’s buying and selling Bitcoins. And it’s doing this on different markets.

On the topic of arbitrage Wikipedia has something to say:

In economics and finance, arbitrage is the practice of taking advantage of a price difference between two or more markets: striking a combination of matching deals that capitalize upon the imbalance, the profit being the difference between the market prices at which the unit is traded.

https://en.wikipedia.org/wiki/Arbitrage

For example, an arbitrage opportunity is present when there is the opportunity to instantaneously buy something for a low price and sell it for a higher price.

Now this already is the second version of the tool and already 2 years old. See it as some sort of interesting archeological specimem. Please refrain to actually so something harmful with it.

I am writing this down here because apart from it’s obvious horrors this is a good starting point to understand how these computer-trading-systems do work in principle.

Given that an architectural drawing is also included it gives all sorts of starting points to thoughts.

Also. What could possibly go wrong if a tool to buy/sell on actual markets with actual bitcoins is confident enough to include the “maxTargetProfit” configuration option. Effectively setting the top-line of profit you’re going to make!!!111

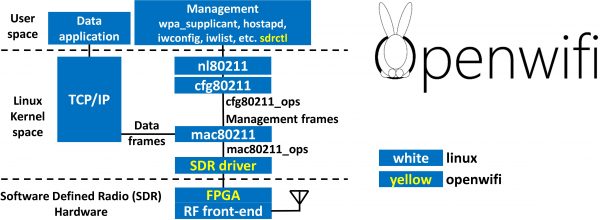

Linux mac80211 compatible full-stack Wi-Fi design based on SDR

In a tweet we were given an early christmas present – open-sdr released an open source software Wi-Fi stack that utilizes software-defined-radio technology to implement actual working Wi-Fi.

Features:

- 802.11a/g; 802.11n MCS 0~7; 20MHz

- Mode tested: Ad-hoc; Station; AP

- DCF (CSMA/CA) low MAC layer in FPGA

- Configurable channel access priority parameters:

- duration of RTS/CTS, CTS-to-self

- SIFS/DIFS/xIFS/slot-time/CW/etc

- Time slicing based on MAC address

- Easy to change bandwidth and frequency:

- 2MHz for 802.11ah in sub-GHz

- 10MHz for 802.11p/vehicle in 5.9GHz

- On roadmap: 802.11ax

See this demonstration:

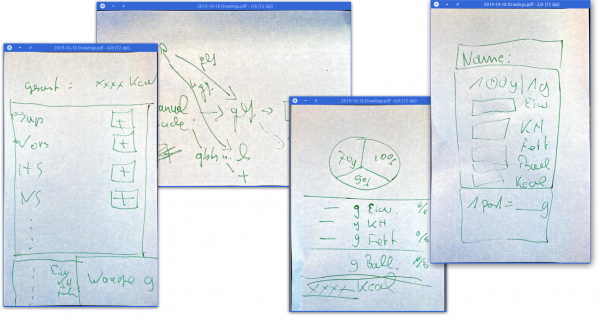

Tabemono – from a name to UX and UI…

As you might know by now I am re-implementing MyFitnessPal functionality into my own application to be deeper integrated with kitchen hardware and my own personal use-cases rather than to be an add infested subscription based 3rd party applilcation.

So the development of this is ongoing, but I wanted to note down some progress and explanation.

Let’s start with explaining the name: Tabemono.

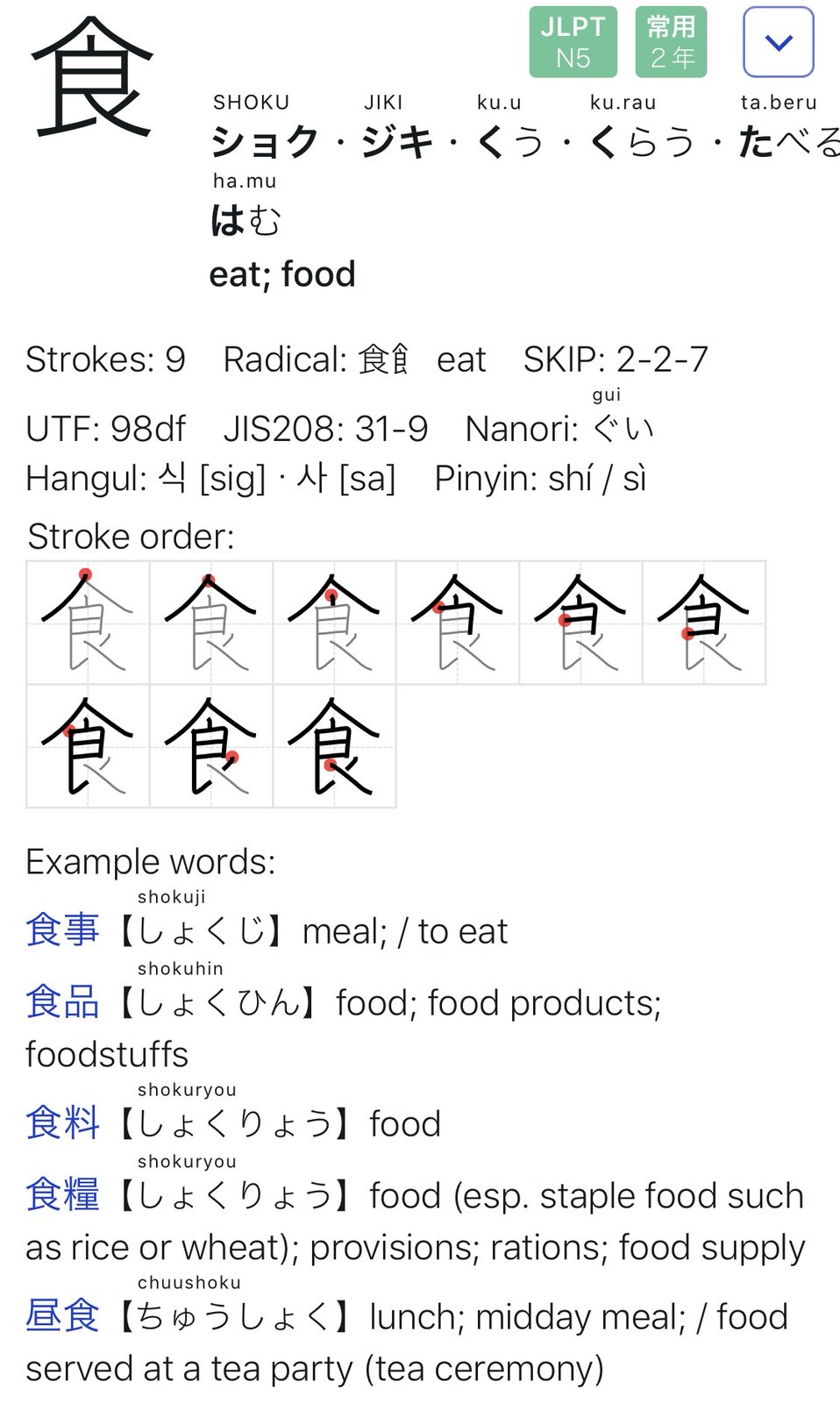

It does really mean something – and as some might have guessed – in japanese:

Tabemono – 食べ物

Taking just the first Kanji:

Implementing the UI from the UX has proven to be as challenging as expected.

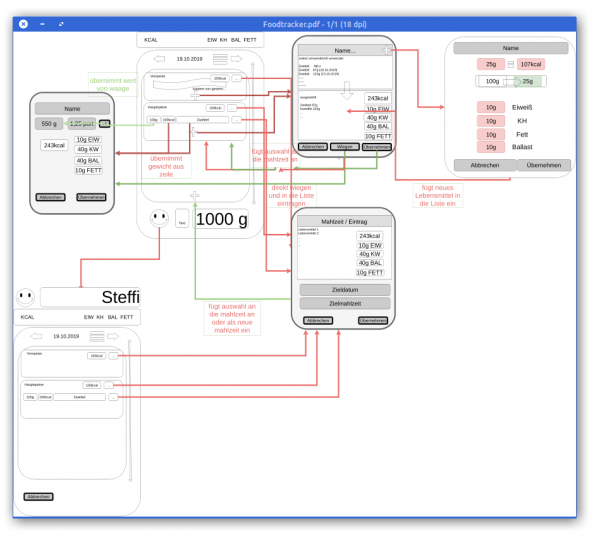

When we started to toss around the idea of re-implementing our food-tracking-needs we started with a simple scribble on post-it notes.

This quickly led to a digital version of this to better reflect what we wanted to happen during the different steps of use…

It wasn’t nice but it did act as an reminder of what we wanted to achieve.

The first thing we learned here was that this will all evolve while we are working on it.

So during a long international flight I’ve spent the better part of 11 hours on getting the above drawing into something resembling an iOS user interface mock-up. With the help of the (free for 1 private project) Adobe XD I clicked along and after 10 hours, this was the video I did of the click-dummy:

Since then I’ve spend maybe 1 more day and started the SwiftUI based implementation of the actual iOS application.

And this brought the first revelation: There are so many ideas that might make sense on paper and in a click-dummy. But only because those are just tools and not reality. It’s absolutely crucial to really DO the things rather than imagine them.

And so the second revelation came: If I had an advise to any product manager or developer out there: Go on and pick a project and try to go full-circle.

You ain’t full stack if you’re missing out on the understanding of the work and skill that your team members have and need.