In times when mobile phone cameras produce pictures of 2 MBytes each and decent DSLR cameras produce pictures in the range of more than 20 Mbytes each – not speaking of the various sensors around the house the question of how all of this is going to be stored is an interesting one.

Prices for mass storage is dropping for years and sized of hard disks are getting bigger and bigger. 3 Tbyte drives are fairly cheap now. Cheap enough to consider serious redundancy even for home use.

Having that home automation hobby and having very specific needs when it comes to home entertainment or even watching TV (we don’t watch live-tv…) we have a relatively huge demand for storage space. That way we are already storing over 10 Tbyte of data, fully encrypted, redundant and backed-up.

Our file server infrastructure grew with the needs over the years.

It started way back in 2003 when I set-up the first fileserver for my apartment back then. It was a fairly huge 19 inch case with 5 hard disks (100 Gbyte each). This machine was filled in 2005 and needed replacement.

We’re in IDE land back then. Because the system hardware died on me due to a power surge all the disks and a new mainboard were seated in a new case with room for a lot of disks.

One interesting detail might be that I consistently used Windows Server for that purpose.

The machine always wasn’t just a fileserver. It was smtp, imap, nntp and media server all the time. That lead to a growing demand of CPU and memory resources. It started with an 800 Mhz AMD Athlon (which died quickly) and for the next years to come I used a 2.8 Ghz Intel Pentium 4. Everything started with Windows Server 2003 – bought in the Microsoft Store when I was a Microsoft employee.

Diskspace demand kept growing and in 2009 a new case, new mainboard + memory and new disks where due.

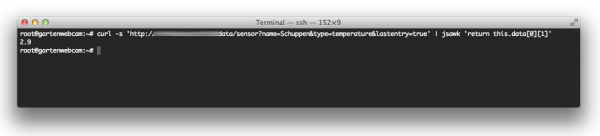

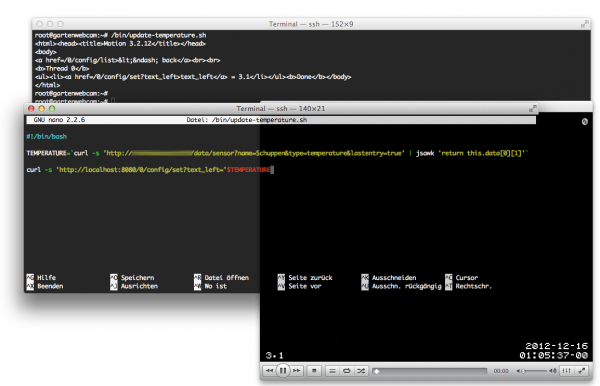

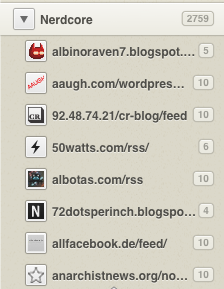

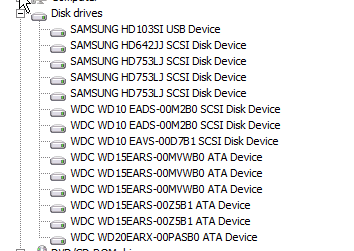

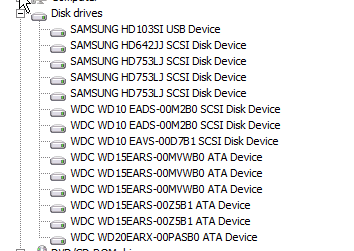

Since 2009 a Core4Quad Q9550 with 2.8 Ghz and 16 Gbyte of Memory is the heart of our fileserver. Since we’re frequently live-transcoding video streams to feed iPads and iPhones around the house that machine has plenty of grunt to feed the demand. We can have 2 iPhones and 2 iPads playing 720p content without getting stutters. Back in 2009 we also switched to a mixed IDE and SATA setup as you can see in the picture:

Plenty of room when the new case arrived – it was getting crowded just 2 years later in 2011. Every seat was taken – which means 13 disks are in that case and 1 attached through USB.

That adds up to more than 16 Tbyte of raw storage. In 2011 we also upgraded to Windows Server 2008. We never lost a bit with that operating system, not under the heaviest load and even through serious hardware malfunctions. A lot of disks of those 13 died throughout the years: Almost 1 every 2 months was replaced – most of them through extended waranties – of course we have a spare always ready to take the place. Only one time I had to rush to a store to get a replacement drive when two disks failed short after each other. That’s why there’s that 2 Tbyte drive in the 1.5 Tbyte compound…

So it’s getting full again. Since that case isn’t really holding more disks and replacing them is getting harder because of the tight fit the idea was born to now add a bigger case but to just add a NAS/SAN which holds between 6 to 8 disks at once, comes with it’s own redundancy management and exports one big iSCSI volume.

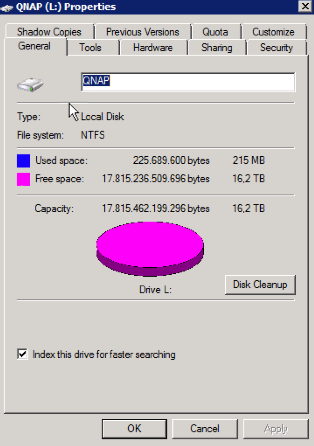

That said a network card was added to the fileserver and a QNAP TS-859 Pro+ 8-bay appliance was bought. This one is a shiny black device which uses less power then an aditional case with extra cpu and memory would have use and after calculating through a number of combinations it’s even the cheapest solution for an 8 drive set-up.

After some intensive testing it seems that the iSCSI approach is the most robust one. Since I am just done with testing the appliance the next step is to buy drives. So stay tuned!

Source 1: http://www.qnap.com/de/index.php?lang=de&sn=375&c=292&sc=528&t=532&n=3486