Cascading Style Sheets or CSS in short are a very powerful tool to control how content is being displayed.

CSS is designed to enable the separation of presentation and content, including layout, colors, and fonts. This separation can improve content accessibility, provide more flexibility and control in the specification of presentation characteristics, enable multiple web pages to share formatting by specifying the relevant CSS in a separate .css file, and reduce complexity and repetition in the structural content.

Wikipedia

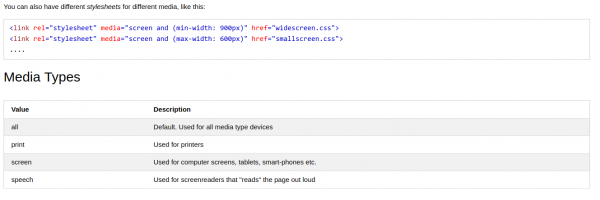

Separation of formatting and content also makes it feasible to present the same markup page in different styles for different rendering methods, such as on-screen, in print, by voice (via speech-based browser or screen reader), and on Braille-based tactile devices. CSS also has rules for alternate formatting if the content is accessed on a mobile device.

So with CSS you can differentiate between target audiences. It gives you control over the output being rendered for specific render targets.

I frequently come across content I want to read. And almost as frequently I do not have time for a longer read when I come across interesting content.

My workflow for this is: keeping some to-be-read backlog of PDF files I have printed from websites. These PDF files are automatically synced to various devices and I can read them at a later stage.

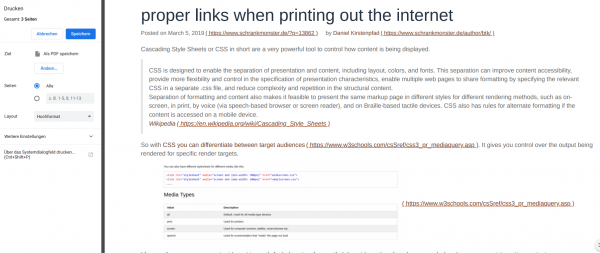

What often is frustrating to see: bad the print results of website layouts as these websites have not even thought of the remote option of being printed.

With this blog I want to support any workflow and first and foremost my own. Therefore printing this blog adds some print-audience specifics.

For example the links I am using in the articles are usually inline when you are using a browser. When you’re printing the article those links get converted and are being written out with the text. So you can have them in your print-outs without loosing information.

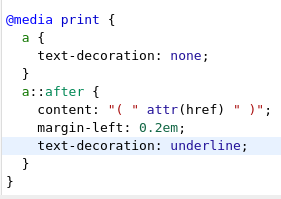

And the changes you need to apply to any webpage to instantly enable this are very simple as well! Just add this to your page stylesheet:

@media print {

a {

text-decoration: none;

}

a::after {

content: "( " attr(href) " )";

margin-left: 0.2em;

text-decoration: underline;

}

}