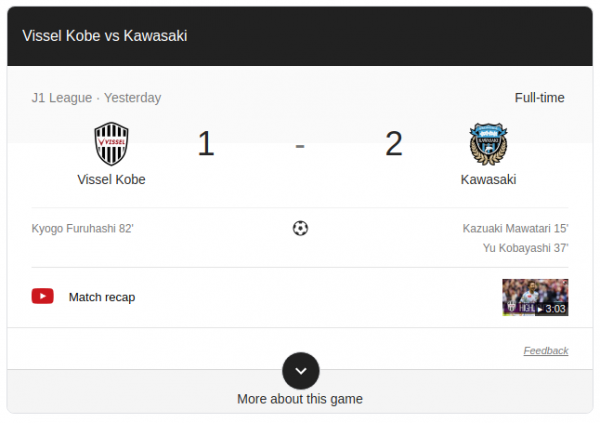

Kawasaki Frontale vs. Vissel Kobe

First: I am not at all interested or knowledgeable in football / soccer. But…

Several times a year I am for multiple weeks in the area of Musashikosugi ((武蔵小杉) which is part of the greater Tokyo area in Japan.

And because of these stays – I’ve probably been there for the accumulated time of 1 year – I’ve got attached to this area / community over time.

This includes all those things the community shares on various places on the internet so to a small degree I can stay informed.

For example: There is a fantastic blog (as for many other communities in Tokyo) that specifically shares community related information about Musashikosugi.

To understand the context you need to know that I’ve worked for Rakuten. The number one eCommerce company in Japan. This surely kick-started my interest in Japan overall.

I know from my time at Rakuten that the company engaged in a couple of sport sponsorships. One of them was the J1 League football club “Vissel Kobe“.

Kawasaki, the area of which Musashikosugi is part of, also has such a football team called “Kawasaki Frontale“.

Through said blog it came to my attention that there was a game between those two football teams and…

Kawasaki won the game!

So despite me not being particularly interested in sports this news was quite exciting to see. It almost feels like some local patriotism feelings come up. And with the direct connection to my past employment it get’s even more exciting.

Go Kawasaki, go!

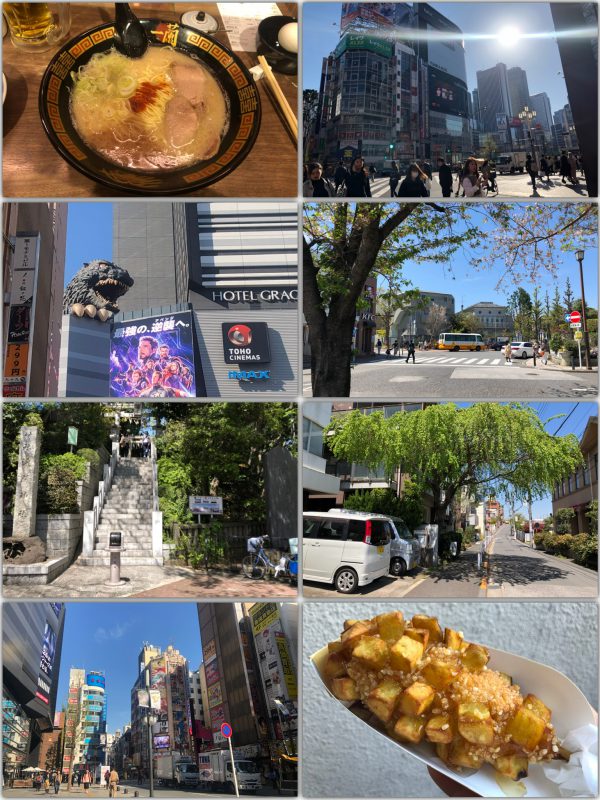

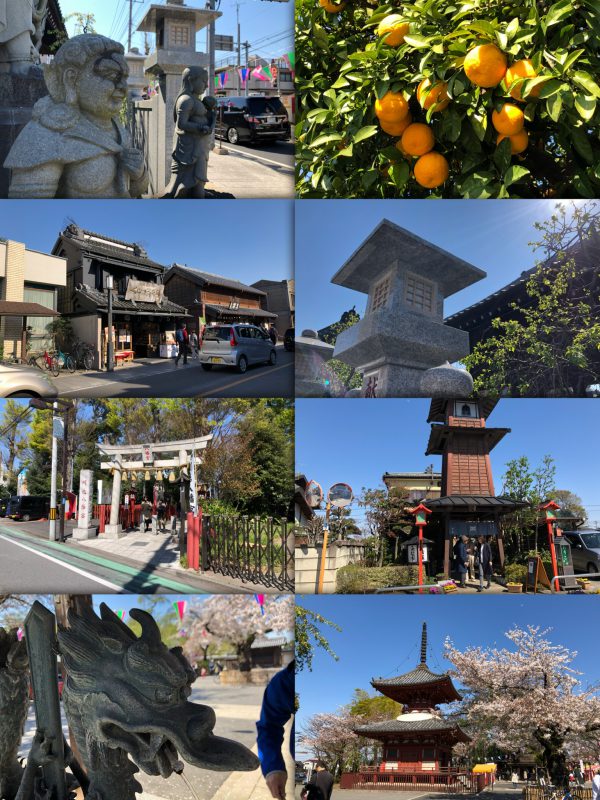

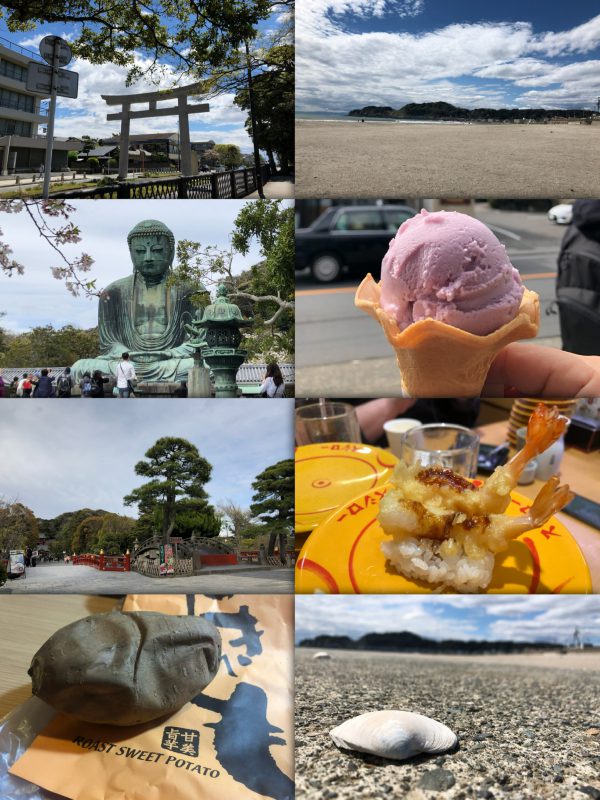

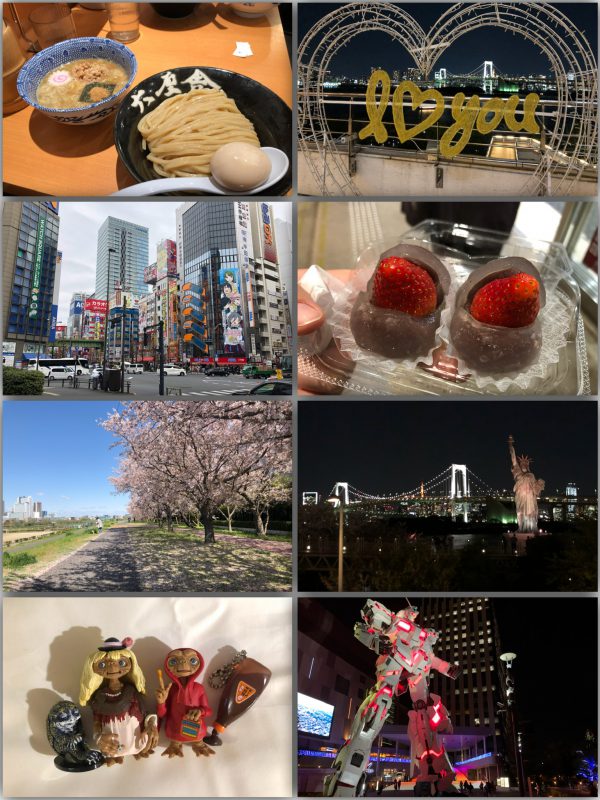

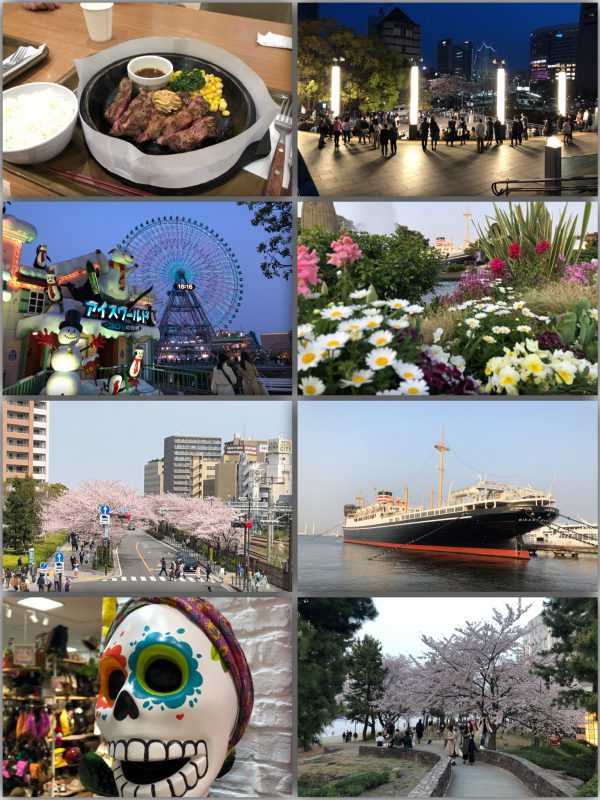

Japan family trip April 2019 (part 9)

“Kombinose”

Kombinose [kɔmbi̯noːzə], die

Zusammengesetztes Substantiv aus den Worten “Kombination” und “Symbiose”.

Verstärktes Zusammenwirken von mehreren Faktoren in Kombination, die sich vielfach gegenseitig begünstigen.

I love the small train stations in 東京…

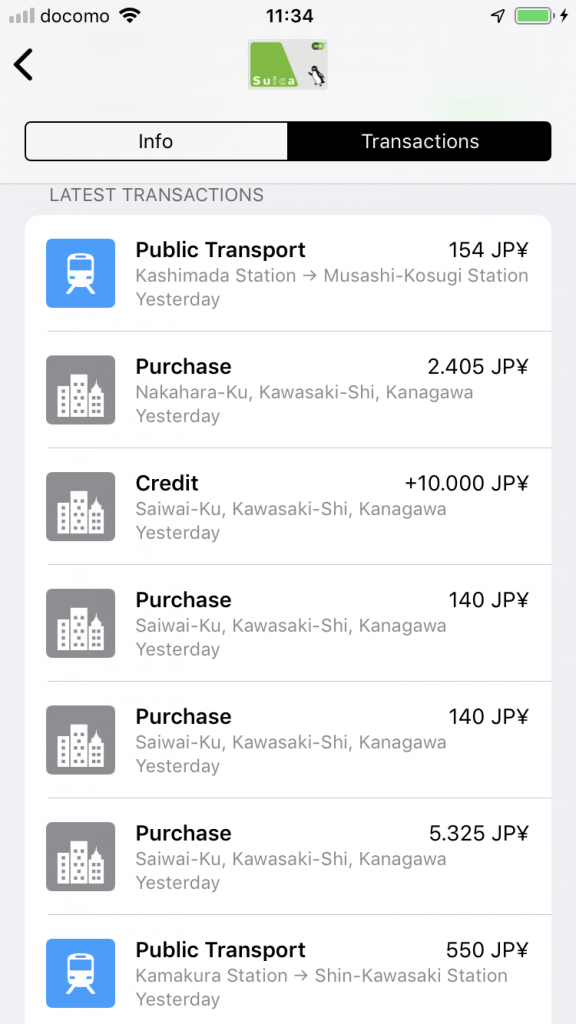

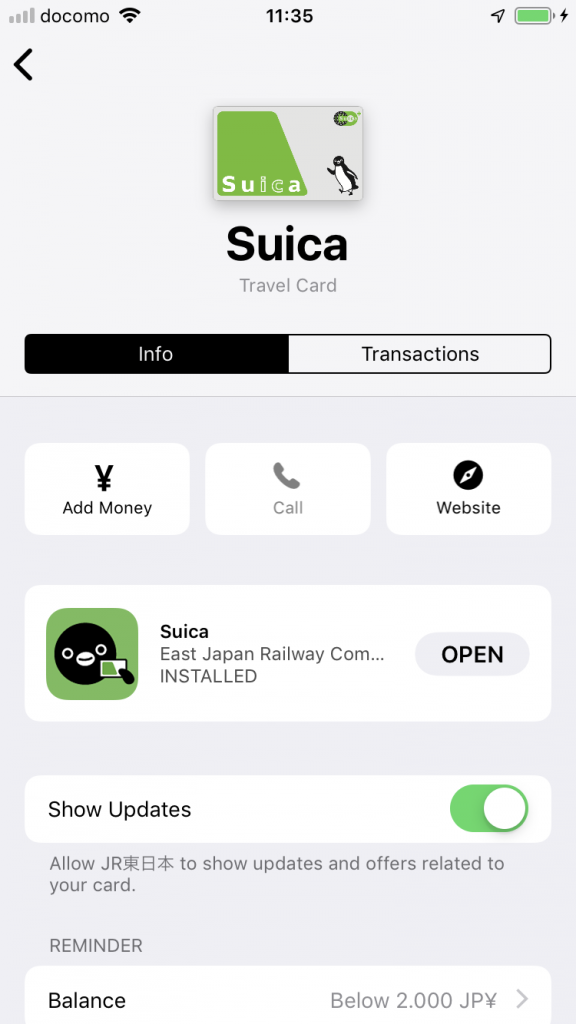

Super Urban Intelligent CArd

Suica (スイカ Suika) is a rechargeable contactless smart card, electronic money used as a fare card on train lines in Japan, launched on November 18, 2001. The card can be used interchangeably with JR West’s ICOCA in the Kansai region and San’yō region in Okayama, Hiroshima, and Yamaguchi Prefectures, and also with JR Central’s TOICA starting from spring of 2008, JR Kyushu’s SUGOCA, Nishitetsu’s Nimoca, and Fukuoka City Subway’s Hayakaken area in Fukuoka City and its suburb areas, starting from spring of 2010. The card is also increasingly being accepted as a form of electronic money for purchases at stores and kiosks, especially within train stations. As of October 2009, 30.01 million Suica are in circulation.

https://en.wikipedia.org/wiki/Suica

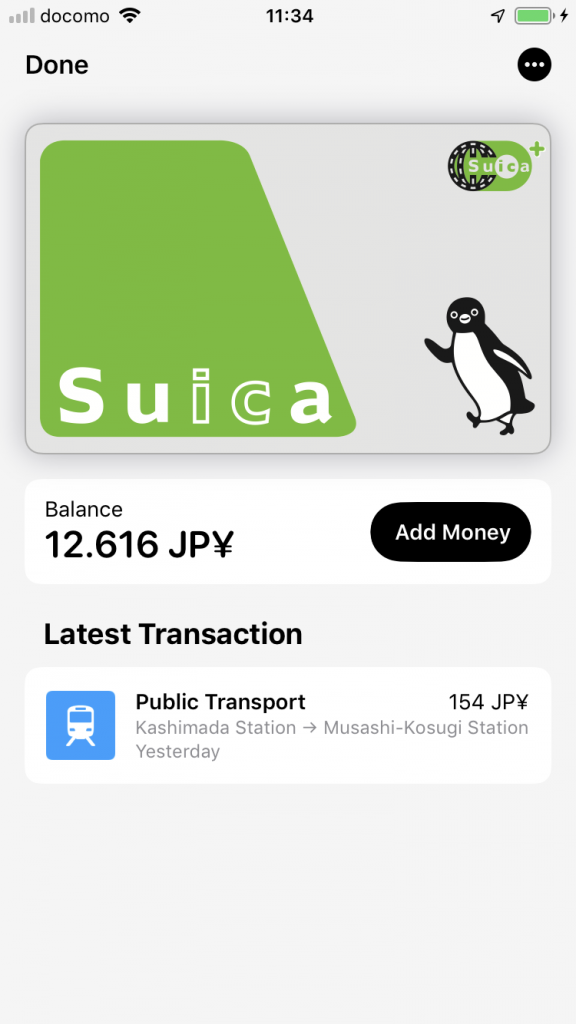

This time around we really made use of electronic payment and got around using cash whenever possible.

There where only a few occasions when we needed the physical credit card. Of course on a number of tourist spots further away from Tokyo centre cash was still king.

From my first trip to Japan to today a lot has changed and electronic payment was adopted very quickly. Compared to Germany: Lightning fast adoption in Japan!

The single best thing that has happened recently in this regard was that Apple Pay got available in Germany earlier this year. With the iPhone and Watch supporting SUICA already (you can get a card on the phone/watch) the availability of Apple Pay bridged the gap to add money to the SUICA card on the go. As a visitor to Japan you would mostly top up the SUICA card in convenience stores and train stations and mostly by cash. With the Apple Pay method you simply transfer money in the app from your credit card to the SUICA in an instant.

This whole electronic money concept is working end-2-end in Japan. Almost every shop takes it. You wipe your SUICA and be done. And not only for small amounts. Everything up to 20.000 JPY will work (about 150 Euro).

And when you run through a train station gate to pay for your trip it you hold your phone/watch up to the gate while walking past and this is it in realtime screen recorded:

I wish Germany would adopt this faster.

Oh, important fact: This whole SUICA thing is 100% anonymous. You get a card without giving out any information. You can top it up with cash without any link to you.

Phonebooks… Why do they still exist?

Japan family trip April 2019 (part 8)

Japan family trip April 2019 (part 7)

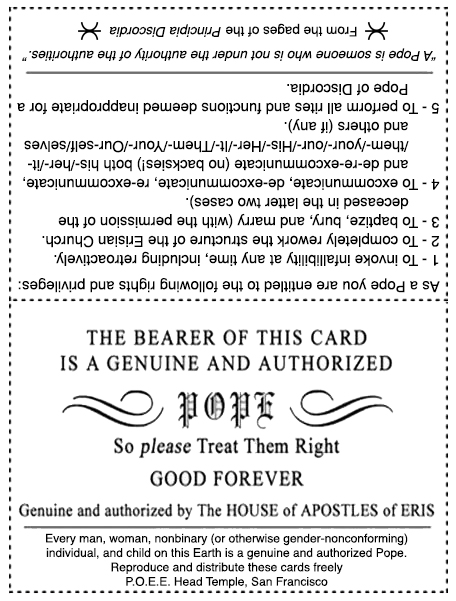

Celebrate Universal Ordination Day

Commemorating the Ordination of the Universe by passing out as many Authorized and Authentic All-Purpose Discordian Society Ordination Certificates as possible.

Discordian Wiki

Upon completing 52 years and 11 days of studying the universe, Omar Khayyam Ravenhurst (under his alias of Kerry Wendell Thornley) became an ordained Minister of the Universal Life Church — on Sweetmorn 43 Discord, 3156 (April 26th 1990).

A subtle Buddhist teaching that nobody without the Buddha Mind understands is that when the Buddha was enlightened, the whole universe — with all its sentient beings, inanimate objects and blunt instruments — attained Satori with him.

On April 26th of 1990 the entire cosmos — people, stars, space rubbish and all — became an ordained minister and so anyone or anything is now legally qualified in most states to get drunk at weddings and giggle at funerals, spit holy water, christen puppies and preach salvation by fire and brimstone.

Only an ordained minister, however, can see how this is possible.

So, on Universal Ordination Day we commemorate the Ordination of the Universe by passing out as many Authorized and Authentic All-Purpose Discordian Society Ordination Certificates as possible.

Whoever distributes the most of these becomes Pastor Present of the Permanent Universal Tax Strike Universal Life Church of the Permanent Universal Rent Strike and may fly anywhere in the world, for a whole year, free — if they can figure out how to fly and providing they always first say “Up, up and away!”

“Every Man, Woman and Child is a Pope!”

undefined parameters

bliss is: waking up to this

This is how my bedroom sounds at 5 am. There’s barely light here in Germany but spring can’t hold it back. There are so many birds right around the house and in the garden around the house that it’s really really really impressive to hear.

Yes. I am not living in a city. And still you can barely hear the hum of cars/trucks in the background. Sadly.

I made this recording by holding my iPhone up in the air and with the Voice Recorder app included in the OS.

Nevertheless this is quite a contrast to the last 2+ weeks in Tokyo.

Japan family trip April 2019 (part 6)

Japan family trip April 2019 (part 5)

Japan family trip April 2019 (part 4)

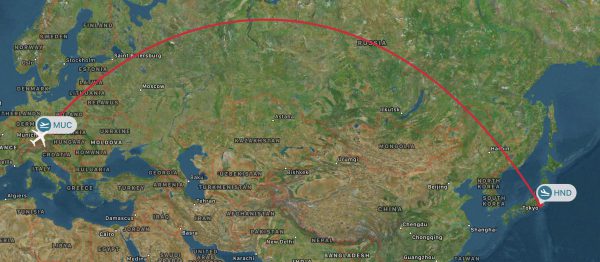

gone fishing and back

Probably this blog had been a bit more silent than usual in the past days. This is because we had been away and out of the country.

Japan family trip April 2019 (part 3)

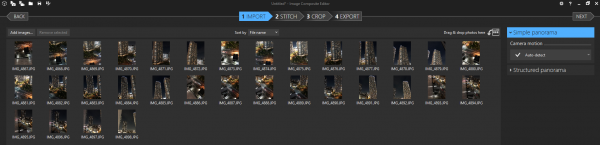

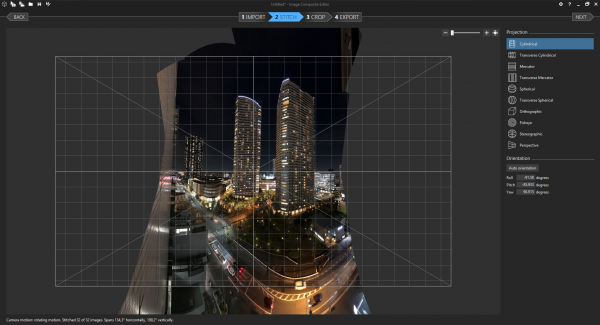

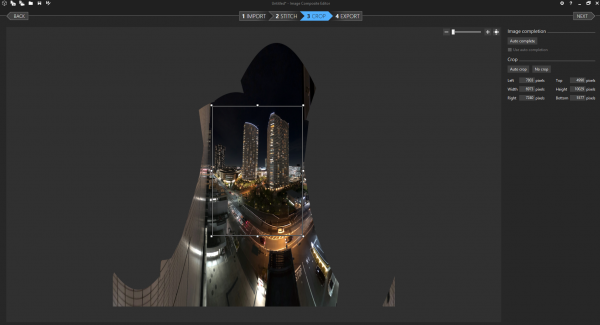

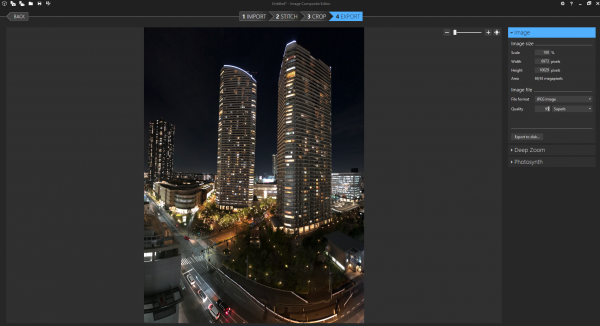

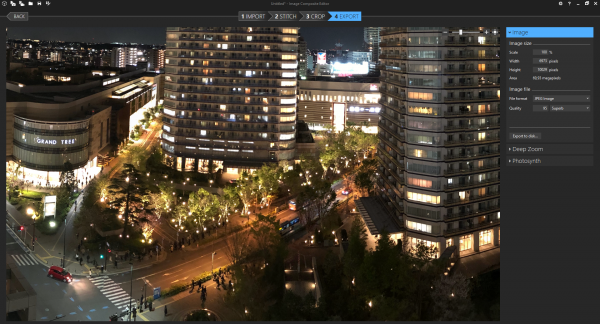

Panoramic Images free (-hand)

I really like taking panoramic images whenever I can. They convey a much better impression of the situation I’ve experienced then a single image. At least for me. And because of the way they are made – stitched together from multiple images – they are most of the time very big. A lot of pixels to zoom into.

The process to take such a panoramic image is very straight forward:

- Take overlapping pictures of the scenery in multiple layers if possible. If necessary freehand.

- Make sure the pictures overlap enough but there’s not a lof of questionable movement in them (like a the same person appearing in multiple pictures…)

- Copy them to a PC.

- Run the free Microsoft Image Composite Editor.

- Pre-/Post process for color.

The tools used are all free. So my recommendation is the Microsoft Image Composite Editor. Which in itself was a Microsoft Research project.

Image Composite Editor (ICE) is an advanced panoramic image stitcher created by the Microsoft Research Computational Photography Group. Given a set of overlapping photographs of a scene shot from a single camera location, the app creates high-resolution panoramas that seamlessly combine original images. ICE can also create panoramas from a panning video, including stop-motion action overlaid on the background. Finished panoramas can be saved in a wide variety of image formats,

Image Composite Editor

Here’s how the stitching process of the Musashi-kosugi Park City towers night image looked like:

Japan family trip April 2019 (part 2)

Eleven

It’s been 11 years today that I am married with my beloved Stephanie.

We rocked 11 years. Let’s rock a lot more!

グランツリー武蔵小杉 and Park City Forest Towers

Japan family trip April 2019 (part 1)

How to solve the city traffic problem

Constructive Feedback in Difficult Situations

This post is a good part just a “repost” of something I’ve read on Volkers blog: “How to Deliver Constructive Feedback in Difficult Situations” and I want to have noted down here as well.

“We are dangerous when we are not conscious of our responsibility for how we behave, think, and feel.“

Marshall B. Rosenberg, Nonviolent Communication

There’s a good article about the book. Volkers article sparked my interest and I am reading.

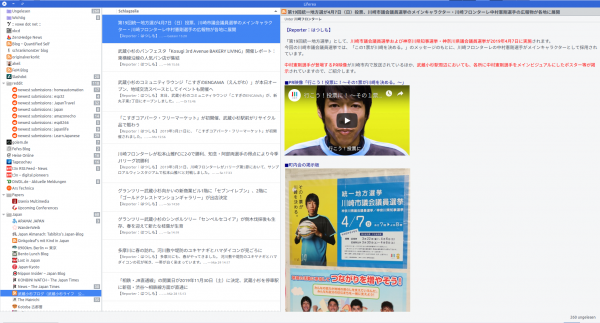

RSS is here. Use it!

RSS aka RDF Site Summary aka Rich Site Summary aka Really Simple Syndication is a standardized web format that works for you.

At least it would work for you if you would use a a tool which would allow you to “subscribe” to RSS feeds from all sorts of websites. These tools are called feed-reader.

The website you are reading this on offers such a link. By subscribing to its feed you will be able to see all content but without having to actually go to each of your subscriptions one by one. That is done by the feed reader. This process of aggregation is it why feed readers are also called aggregator.

Invented exactly 20 years ago this month on the back-end of a feverish dot-com boom, RSS (Real Simple Syndication) has persisted as a technology despite Google’s infamous abandonment with the death of Google Reader and Silicon Valley social media companies trying and succeeding to supplant it. In the six years since Google shut down Reader, there have been a million words written about the technology’s rise and apparent fall.

RSS is Better Than Twitter

Here’s what’s important: RSS is very much still here. Better yet, RSS can be a healthy alternative…

I am using Liferea as my feed reader on desktop and Reeder on all that is iOS/macOS.

I’ve found that by using RSS feeds and not following a pre-filtered timeline I would not “follow” 1000 sources of information but choose more carefully whom to follow.

Some do not offer any feeds – so my decision in these cases is wether or not I would invest the time to create a custom parser for their content to pull in.

After RSS being just another XML format you quickly realize that HTML is just another XML format as well. There are simple ways to convert between both on the fly. Like fetchrss.com or your command-line.

Of course RSS is not the only feed format: ATOM would be another one worth mentioning.

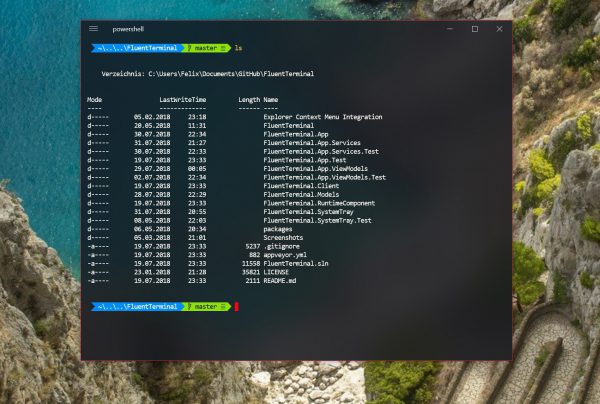

a terminal for Windows

As Windows lately tends to make an effort to stay out of the way as an operating system and user-experience it seems that it regains more attention by developers.

For me this all is quite strange as I’ve personally would prefer switching from macOS to Linux rather than Windows.

But for those occasions you need to go with Windows. There’s a Terminal application now that gives you, well, a good terminal. Try FluentTerminal.

not the only pope writing code

Recently news broke…

…Code.org CEO Hadi Partovi noted in late 2016 that he was “still working on Pope Francis.” GeekWire reports that Partovi was able to cross that one off his bucket list Thursday, as he helped Pope Francis become ‘the first Pope to write a line of code’ at a ‘Programming for Peace’ event…

slashdot

At first I was confused which pope could it be that has not written any code before. But I quickly realized that the news is about the 266th pope of the catholic church.

Of course a lot more popes already had coded before him. Evidently a lot earlier. And to complete the circle, make yourself comfortable: In discordianism you are a pope!

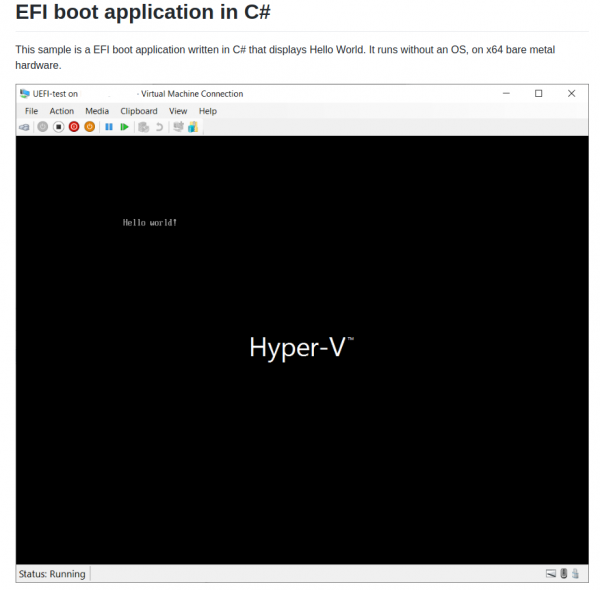

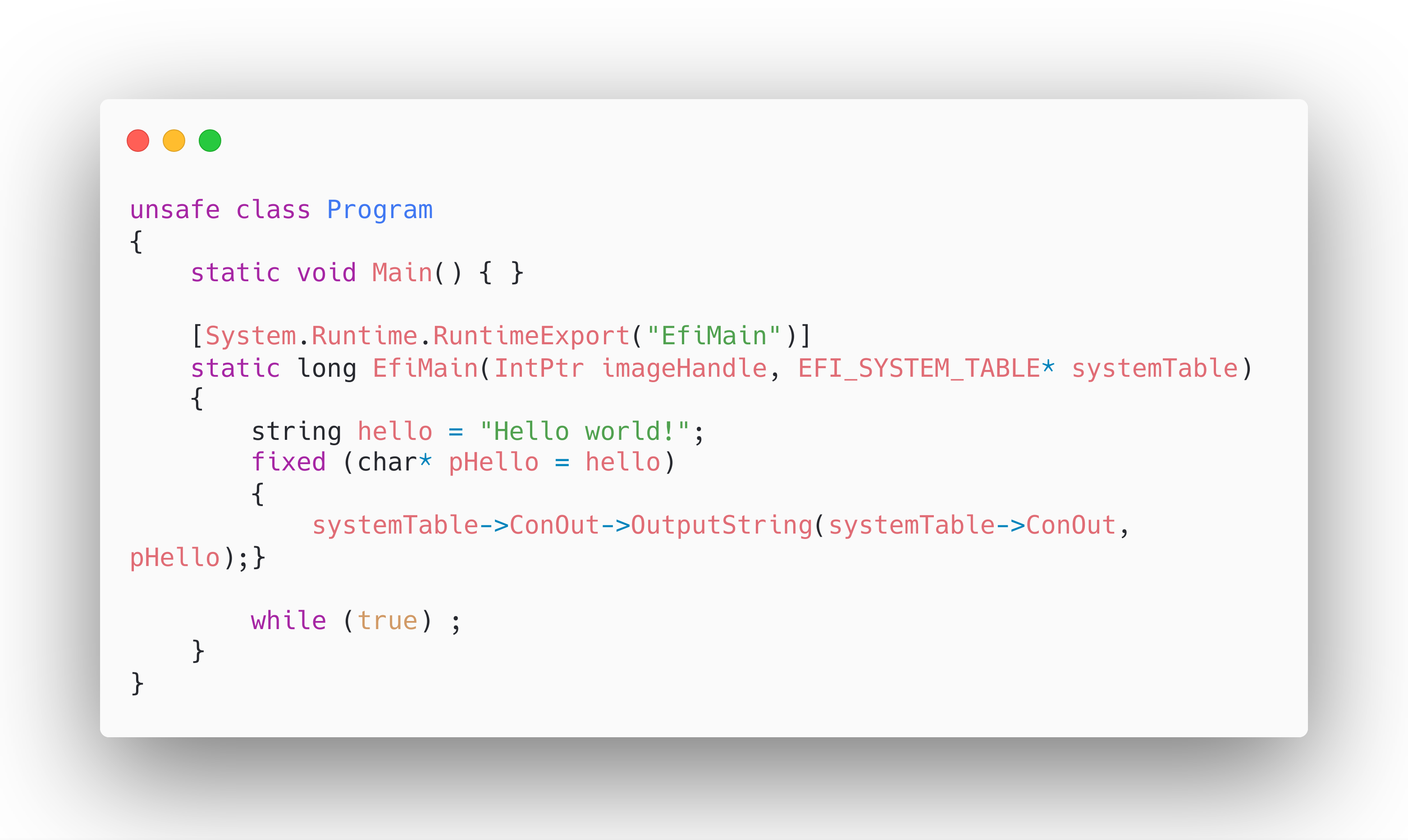

EFI boot app in C#

Zero-Sharp is using the CoreRT runtime to very impressively demonstrate how to get down to bare-metal application operation using C#. It compiles programs into native code…

Everything you wanted to know about making C# apps that run on bare metal, but were afraid to ask:

A complete EFI boot application in a single .cs file.

Michal Stehovsky on Twitter

This is seriously impressive and the screenshot says it all:

archive your slice of the web

Many use and love archive.org. A service that roams the public internet and archives whatever it finds. It even creates timelines of websites so you can dive right into history.

Have a piece of history right here:

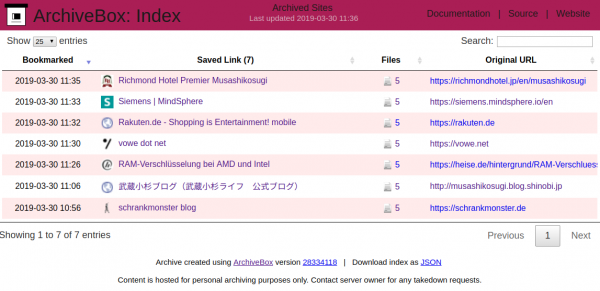

You can have something similar hosted in your own environment. There are numerous open source projects dedicated to this archival purpose. One of them is ArchiveBox.

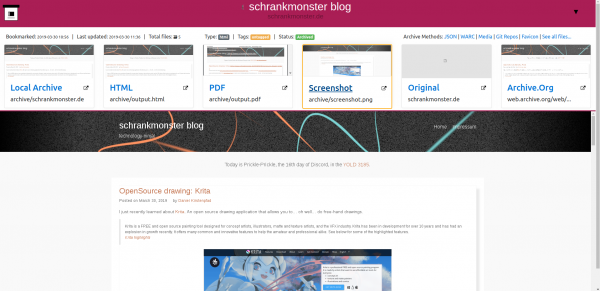

ArchiveBox takes a list of website URLs you want to archive, and creates a local, static, browsable HTML clone of the content from those websites (it saves HTML, JS, media files, PDFs, images and more).

I’ve done my set-up of ArchiveBox with the provided Dockerfile. Every once in a while it will start the docker container and check my Pocket feed for any new bookmarks. If found it will then archive those bookmarks.

As the HTML as well as PDF and Screenshot is saved this is extremely useful for later look-ups and even full-text search indexing.

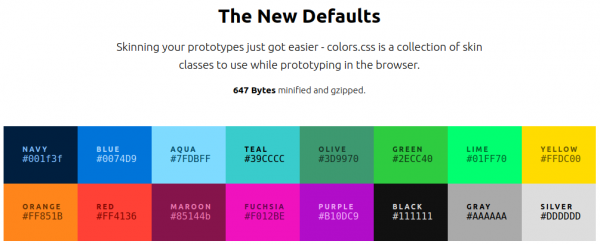

nicer color palette for the web

ポカヨケ

Poka-yoke (ポカヨケ, [poka yoke]) is a Japanese term that means “mistake-proofing” or “inadvertent error prevention“. A poka-yoke is any mechanism in any process that helps an equipment operator avoid (yokeru) mistakes (poka). Its purpose is to eliminate product defects by preventing, correcting, or drawing attention to human errors as they occur.[1] The concept was formalised, and the term adopted, by Shigeo Shingo as part of the Toyota Production System.[2][3] It was originally described as baka-yoke, but as this means “fool-proofing” (or “idiot-proofing“) the name was changed to the milder poka-yoke.

https://en.m.wikipedia.org/wiki/Poka-yoke

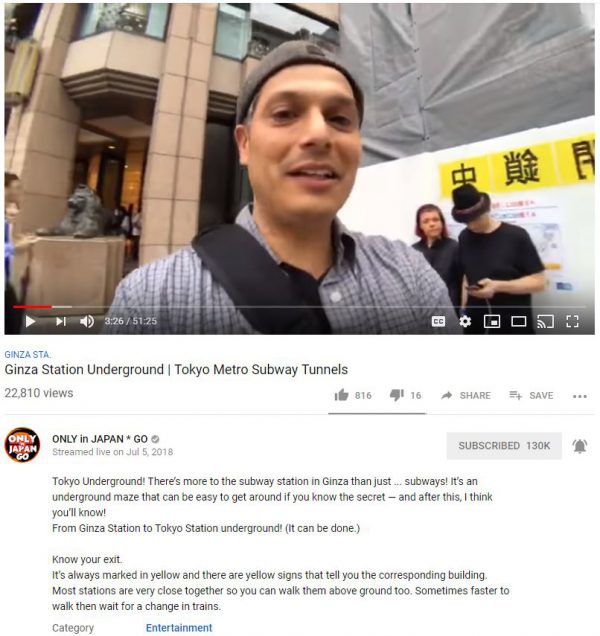

Only in Japan

When you are searching the internet for more information and things to learn about Japan you will inevitably also find John Daub and his “Only in Japan” productions. And that is a good thing!

ONLY in JAPAN is a series produced in Tokyo by one-man band John Daub.

Only in Japan Patreon page

Back in 2018 we even where around when John announced that he is going to live-stream.

And so we met up with him and eventually even said “Hi”.

Of course it wasn’t just us who got a good picture. We were part of the live stream as well – involuntarily as we had tried very hard to not be in frame.

Video Game History

Have you ever asked yourself what those generations coming after us will know about what was part of our culture when we grew up? As much as computers are a part of my story a bit of gaming also is.

From games on tape to games on floppy disks to CDs to no-media game streaming it has been quite a couple of decades. And with the demise of physical media access to the actual games will become harder for those games never delivered outside of online platforms. Those platforms will die. None of them will remain forever.

Hardware platforms follow the same logic: Today it’s the new hype. Tomorrow the software from yesterday won’t be supported by hardware and/or operating systems. Everything is in constant flux.

Emulation is a great tool for many use-cases. But it probably won’t solve all challenges. Preserving access to software and the knowledge around the required dependencies is the mission of the Video Game History Foundation.

Video game preservation matters because video games matter. Games are deeply ingrained in our culture, and they’re here to stay. They generated an unprecedented $91 billion dollars in revenue in 2016. They’re being collected by the Smithsonian, the Museum of Modern Art, and the Library of Congress. They’ve inspired dozens of feature films and even more books. They’re used as a medium of personal expression, as the means for raising money for charity, as educational tools, and in therapy.

Video Game History Foundation

And yet, despite all this, video game history is disappearing. The majority of games that have been created throughout history are no longer easily accessible to study and play. And even when we can play games, that playable code is only a part of the story.