When you want to make things happen on a schedule or log them down when they took place a calendar is a good option. Even more so if you are looking for an intuitive way to interact with your home automation system.

Calendars can be shared and your whole family can have them on their phones, tablets and computers to control the house.

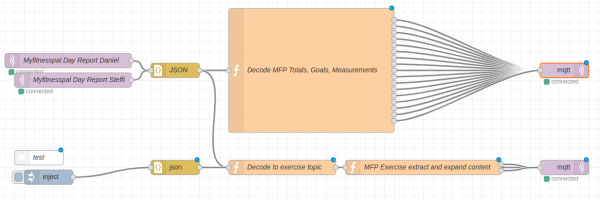

In general I am using the Node-Red integration of Google Calendar to send and receive events between Node-Red and Google. I am using the node-red-node-google package which comes with a lot of different options.

Of course when you are using those nodes you need to configure the credentials

Part 1: Control

So you got those light switches scattered around. You got lots of things that can be switched on and off and controlled in all sorts of interesting ways.

And now you want to program a timer when things should happen.

For example: You want to control when a light is being switched on and when it’s then again been switched off.

I did create a separate calendar on google calendar in which I am going to add events to in a notation I came up with: those events have a start-datetime and of course an end-datetime.

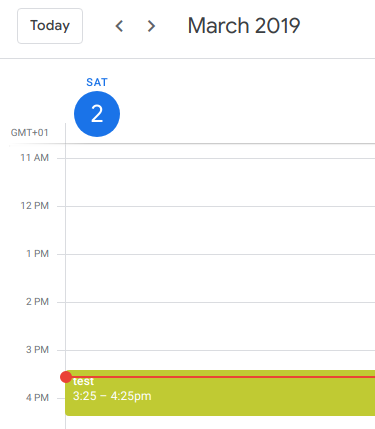

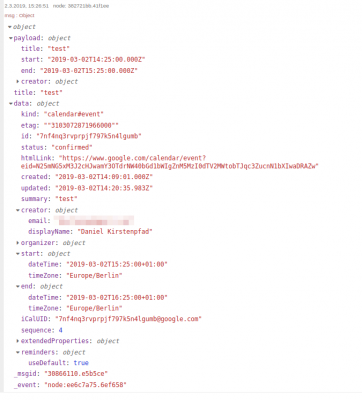

When I now create an event with the name “test” in the calendar…

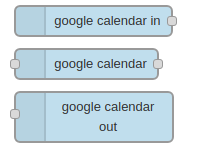

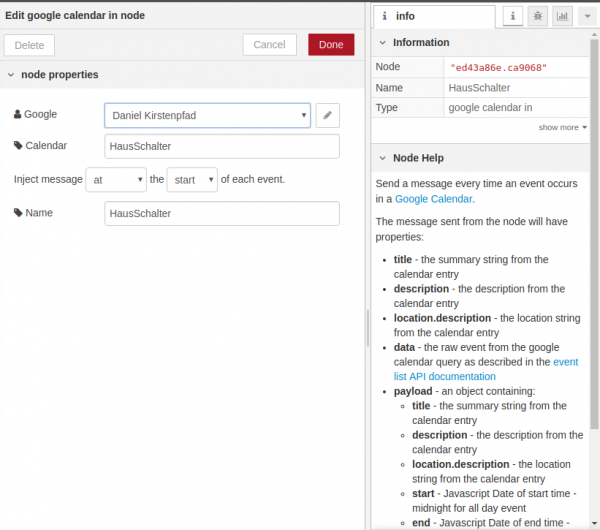

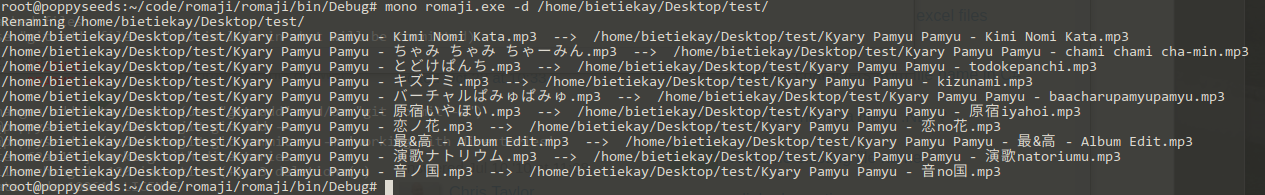

And in Node-Red you would configure the “google calendar in”-Node like so:

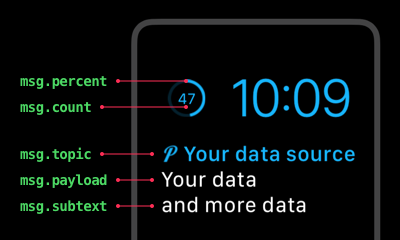

When you did wire this correctly everytime an event in this calendar starts you will get a message with all the details of the event, like so:

With this you can now go crazy on the actions. Like using the name to identify the switch to switch. Or the description to add extra information to your flow and actions to be taken. This is now fully flexible. And of course you can control it from your phone if you wanted.

Part 2: Information

So you also may want to have events that happened logged in the calendar rather than a plain logfile. This comes very handy as you can easily see this way for example when people arrived home or left home or when certain long running jobs started/ended.

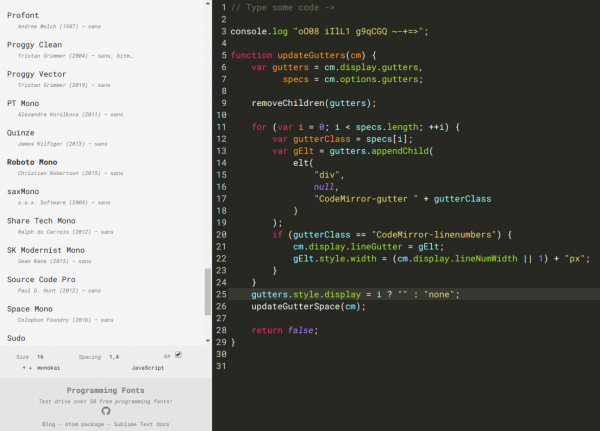

To achieve this you can use the calendar out nodes for Node-Red and prepare a message using a function node like this:

var event = {

'summary': msg.payload,

'location': msg.location,

'description': msg.payload,

'start': {

'dateTime': msg.starttime,//'2015-05-28T09:00:00-07:00',

'timeZone': 'Europe/Berlin'

},

'end': {

'dateTime': msg.endtime,//'2015-05-28T17:00:00-07:00',

'timeZone': 'Europe/Berlin'

},

'recurrence': [

//'RRULE:FREQ=DAILY;COUNT=2'

],

'attendees': [

//{'email': 'lpage@example.com'},

//{'email': 'sbrin@example.com'}

],

'reminders': {

'useDefault': true,

'overrides': [

//{'method': 'email', 'minutes': 24 * 60},

//{'method': 'popup', 'minutes': 10}

]

}

};

msg.payload = event;

return msg;

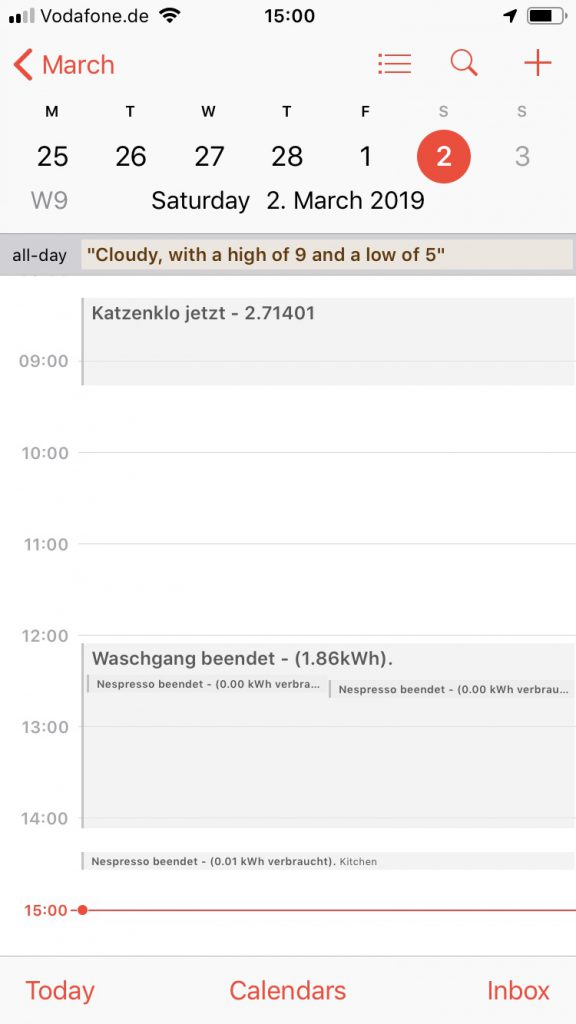

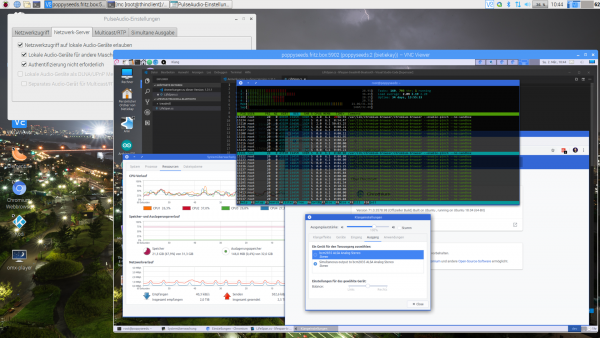

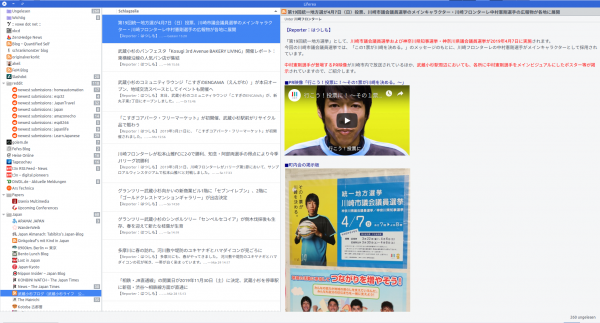

And as said – we are using it for all sorts of things – like when the cat uses her litter box, when the washing machine, dryer, dishwasher starts and finishes. Or simply to count how many Nespresso coffees we’ve made. Things like when members of the household arrive and leave places like work or home. When movement is detected or anything out of order or noteable needs to be written down.

And of course it’s convenient as it can be – here’s the view of a recent saturday:

![var complete = {};

var sodium = {};

var carbohydrates = {};

var calories = {};

var daydate = {};

var fat = {};

var sugar = {};

var protein = {};

var weight = {};

var bodyfat = {};

var goalsodium = {};

var goalcarbohydrates = {};

var goalcalories = {};

var goalfat = {};

var goalsugar = {};

var goalprotein = {};

var caloriesdiff = {};

var ttopic = msg.topic.toLowerCase();

var firstobject = Object.keys(msg.payload)[0];

complete.payload = msg.payload[firstobject].complete;

complete.topic = ttopic+'/complete';

sodium.payload = msg.payload[firstobject].totals.sodium;

sodium.topic = ttopic+'/total/sodium';

carbohydrates.payload = msg.payload[firstobject].totals.carbohydrates;

carbohydrates.topic = ttopic+'/total/carbohydrates';

calories.payload = msg.payload[firstobject].totals.calories;

calories.topic = ttopic+'/total/calories';

fat.payload = msg.payload[firstobject].totals.fat;

fat.topic = ttopic+'/total/fat';

sugar.payload = msg.payload[firstobject].totals.sugar;

sugar.topic = ttopic+'/total/sugar';

protein.payload = msg.payload[firstobject].totals.protein;

protein.topic = ttopic+'/total/protein';

weight.payload = msg.payload[firstobject].measurements.weight;

weight.topic = ttopic+'/measurement/weight';

bodyfat.payload = msg.payload[firstobject].measurements.bodyfat;

bodyfat.topic = ttopic+'/measurement/bodyfat';

goalsodium.payload = msg.payload[firstobject].goals.sodium;

goalsodium.topic = ttopic+'/goal/sodium';

goalcarbohydrates.payload = msg.payload[firstobject].goals.carbohydrates;

goalcarbohydrates.topic = ttopic+'/goal/carbohydrates';

goalcalories.payload = msg.payload[firstobject].goals.calories;

goalcalories.topic = ttopic+'/goal/calories';

goalfat.payload = msg.payload[firstobject].goals.fat;

goalfat.topic = ttopic+'/goal/fat';

goalsugar.payload = msg.payload[firstobject].goals.sugar;

goalsugar.topic = ttopic+'/goal/sugar';

goalprotein.payload = msg.payload[firstobject].goals.protein;

goalprotein.topic = ttopic+'/goal/protein';

caloriesdiff.payload = msg.payload[firstobject].goals.calories - msg.payload[firstobject].totals.calories;

caloriesdiff.topic = ttopic+'/caloriedeficit';

daydate.payload = firstobject;

daydate.topic = ttopic+"/date";

return [complete, sodium, carbohydrates, calories, fat, sugar, protein, weight, bodyfat, goalsodium, goalcarbohydrates, goalcalories, goalfat, goalsugar, goalprotein, daydate, caloriesdiff];](https://www.schrankmonster.de/wp-content/uploads/2019/03/Bildschirmfoto-zu-2019-03-22-14-13-41.png)